Deep in a quiet suburb, a man who cannot walk and can barely speak sits before a computer screen. With no physical movement, no mouse or keyboard, he smoothly moves a cursor across the screen, selects icons, types text, and navigates apps. His voice is faint, yet his actions speak volumes. The silent power behind it? A revolutionary brain-computer interface (BCI) that reads signals from the speech motor cortex—an untapped gateway into the mind’s intent.

Researchers at the University of California, Davis have crossed a frontier in neurotechnology, crafting a device that empowers those silenced by disease to reconnect with the world—not just through speech, but through full digital control. This remarkable advancement, documented in the Journal of Neural Engineering, isn’t science fiction. It’s the story of how neural whispers from a paralyzed brain turned into digital commands, all from a single implant site.

When the Body Stops, the Brain Continues

Neurological diseases like amyotrophic lateral sclerosis (ALS) and stroke are cruel in their efficiency. They leave cognition untouched while erasing the body’s ability to respond. For individuals with ALS, the relentless degeneration of upper and lower motor neurons means progressive paralysis: limbs fail, speech slurs, and eventually, communication becomes an immense challenge. But crucially, the mind remains aware, alert, and capable.

Traditional BCIs have sought to bridge this tragic divide. By detecting electrical activity from the brain, these devices translate thought into action—controlling robotic arms, moving cursors, typing words. Most of these systems tap into the dorsal motor cortex, the brain region responsible for planning and executing movements of the arms and hands. When a user imagines reaching out or clicking a mouse, this area activates, and a well-trained decoder can interpret those signals into cursor movements or mouse clicks.

But herein lies a limitation. The dorsal motor cortex is optimized for movement—but not speech. BCIs based on this area can control a pointer or a keyboard but cannot decode what a person is trying to say. Speech decoding, on the other hand, relies on an entirely different brain region.

The Speech Motor Cortex: An Overlooked Gateway

Enter the ventral precentral gyrus—the speech motor cortex. This neural region coordinates facial movements, tongue motions, and vocal articulations. BCIs targeting this zone have shown promising results for decoding speech directly from neural activity. But they’ve never been known to support motor tasks like cursor control.

Until now.

The UC Davis team asked a daring question: What if the speech motor cortex could multitask? Could the same area that controls spoken language also support precise motion control of a cursor? By decoding subtle neural activity from just one implant site—where speech lives—could patients gain both communication and navigation abilities?

To test this, they designed an elegant experiment using state-of-the-art brain implants and cutting-edge neural decoding algorithms, all performed in the participant’s own home.

Meet the Mind Behind the Experiment

At the heart of this story is a 45-year-old man living with ALS. With paralysis in all four limbs and severe speech impairment, he was unable to use traditional computers. Yet his mind was sharp, curious, and eager to engage with the world.

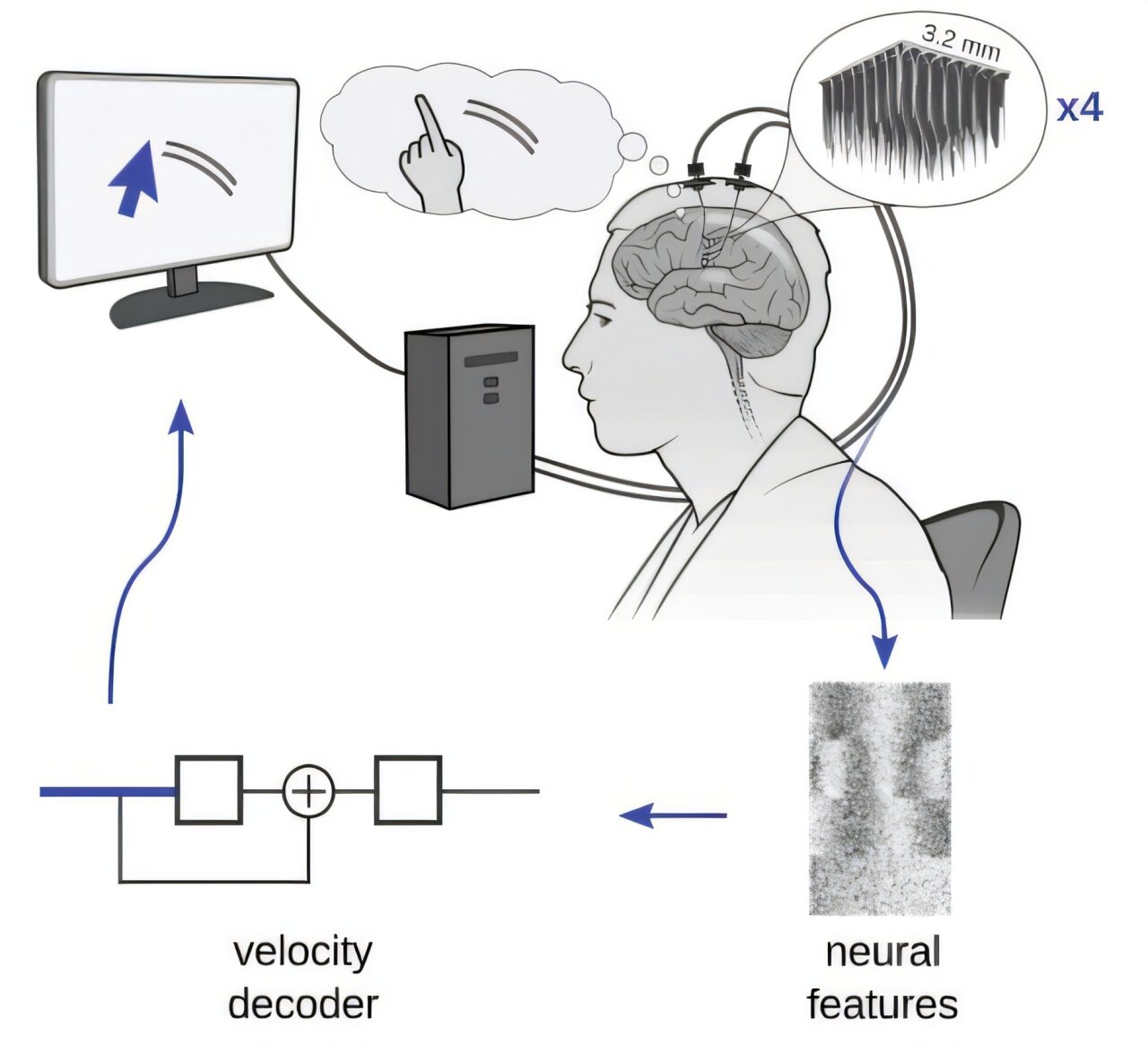

In a delicate surgical procedure, neurosurgeons implanted four 64-electrode arrays into his speech motor cortex. The placement was carefully mapped using preoperative MRI scans, aligned with the Human Connectome Project to ensure optimal targeting. The goal: decode both the will to speak and the desire to move a cursor—without touching the dorsal motor cortex.

The results were astonishing.

Decoding Thought: From Electrode to Cursor

Neural signals are faint electrical fluctuations generated by populations of neurons. The UC Davis system recorded these signals at an astonishing rate of 30,000 times per second, filtering them between 250 and 5,000 Hz to isolate the most relevant features. These signals, such as spike band power and threshold crossings, were processed into dense feature vectors—512 dimensions, updated every 10 milliseconds.

The real magic happened in the decoder. Using linear regression for cursor movement and logistic regression for clicking, the system continuously adapted to the participant’s brain patterns. The decoder didn’t just translate thoughts—it learned from them, recalibrating in real time to improve speed and accuracy.

Calibration took just 40 seconds. Within a minute, the participant was moving a cursor across the screen using only brain signals from his speech cortex.

Performance and Precision: Numbers That Speak for Themselves

Over months of testing, the system evolved from concept to capability. Three task paradigms were used: Radial8 Calibration, Grid Evaluation, and Simultaneous Speech and Cursor tasks. Across all, the participant’s performance was extraordinary.

In over 1,200 trials, he achieved a staggering 93% accuracy in target selection. That means that nearly every time he attempted to move and click with his mind, the system responded correctly. The average speed increased from 1.67 bits per second in early trials to a peak of 3.16 bits per second in optimized sessions. For reference, 1 bit per second means the user can make several accurate choices per minute; crossing 3 bits per second is considered remarkably efficient for BCI performance.

Click classification, a critical component for real-world utility, exceeded chance across all arrays. One electrode array, in particular, carried the lion’s share of the decoding power—demonstrating that targeted placement can yield robust performance even from a small neural sample.

Speech and Cursor: The Brain’s Balancing Act

Perhaps the most intriguing aspect of the study came when the participant attempted to control the cursor and speak simultaneously. Here, researchers uncovered a subtle but important challenge: speech production interfered with cursor control.

Target acquisition times increased by about a second during speech, indicating competition between the neural resources for language and movement control. However, the participant still succeeded in completing both tasks, suggesting that with improved decoder designs, future systems could minimize this interference and support seamless multimodal interaction.

Even more promising, the entire system functioned within the participant’s home environment—a critical step for real-world deployment. No hospital, no lab. Just a man, his mind, and a machine that listened.

Beyond the Lab: A Glimpse into the Future

This work opens up thrilling possibilities for the future of assistive technology. For individuals with ALS, stroke, spinal cord injury, or brainstem disorders, the prospect of controlling a computer, typing emails, or interacting on social media using a single BCI implant is no longer distant. It is here.

Until now, patients and clinicians had to choose between BCIs optimized for speech or for motion. Dual-implant setups—targeting both dorsal and ventral motor cortices—are surgically complex and often infeasible. The UC Davis study shows that this trade-off might not be necessary. One implant site, when decoded with precision, can unlock multiple abilities.

It’s a proof-of-concept with profound implications: the brain is more flexible and interconnected than we ever imagined.

Ethical, Technical, and Emotional Dimensions

As with all neural technology, this research brings important ethical and technical questions to the fore. Who owns the neural data? How do we ensure privacy, agency, and consent when decoding thoughts? What happens when a device begins to act as an extension of the self?

Yet for all its complexity, the emotional dimension is clear. Restoring autonomy to someone locked inside their body is more than a technological achievement—it’s a human triumph. The UC Davis participant now uses his BCI daily to manage tasks that once required a caregiver. The simple act of clicking a file, opening a document, or writing a message becomes a powerful assertion of independence.

Reimagining Communication Itself

Brain-computer interfaces are no longer just tools for restoring lost function—they are beginning to redefine what communication means. By tapping into the speech motor cortex, this research hints at future systems that combine rapid neural speech decoding with intuitive computer control. Imagine dictating an email and navigating your inbox entirely by thought. Imagine art created directly from neural intention. Imagine silent conversations through decoded brain activity.

This is the dawn of multimodal BCIs—technologies that don’t just replace the functions we’ve lost, but expand them in directions we’ve never dreamed.

A Whisper Turns into a Voice

The man in the study cannot walk. He struggles to speak. But through this neural interface, his thoughts race across the screen, clear and directed. He’s no longer a passive observer of technology. He’s its pilot.

And that is the essence of this breakthrough. A whisper from the speech cortex has become a voice in the machine—a testament to human ingenuity, resilience, and the boundless capacity of the brain to adapt, even when the body cannot.

As we move forward, this work from UC Davis will undoubtedly inspire a new generation of BCIs—systems that speak for those who cannot, move for those who cannot, and connect those who have been isolated too long. In doing so, they remind us that the mind is still the greatest interface we’ve ever known.

Reference: Tyler Singer-Clark et al, Speech motor cortex enables BCI cursor control and click, Journal of Neural Engineering (2025). DOI: 10.1088/1741-2552/add0e5. On bioRxiv DOI: 10.1101/2024.11.12.623096