Imagine a world where machines don’t just compute—they think. Where computers don’t process instructions in a rigid, linear fashion, but adapt, learn, and respond in a manner ever closer to the fluid intelligence of living brains. This isn’t the plot of science fiction—it’s the frontier of modern technology. At the heart of this revolution are neuromorphic chips, hardware designed not merely to compute faster, but to process information like biological systems.

Neuromorphic computing stands apart from traditional digital computing. While conventional computers excel at arithmetic, logic, and deterministic tasks, they struggle with flexibility, pattern recognition, and energy-efficient learning—capabilities that human brains achieve effortlessly. Neuromorphic chips aim to bridge that gap by imitating the architecture and dynamics of neural systems. They don’t just simulate neurons in software; they embed the very principles of neural computation in physical circuits. In doing so, neuromorphic chips offer a new paradigm of information processing—one that could reshape everything from artificial intelligence to robotics, from healthcare to the nature of human-machine collaboration.

To truly appreciate the significance of this technology, we must explore its origins, its inspirations, its challenges, and its breathtaking potential. This is a journey through the mind and machine—an exploration of how we might build computers that don’t just compute, but think.

The Inspiration: Why Look to the Brain?

For centuries, the human brain has been a source of awe. This three-pound organ, composed of billions of neurons and trillions of connections, orchestrates perception, memory, emotion, creativity, and decision-making. It does so with remarkable efficiency. The brain consumes roughly 20 watts of power—less than a typical LED lightbulb—yet it outperforms the most powerful supercomputers in tasks like pattern recognition and sensory integration.

Traditional computers are extraordinary for certain tasks—precise calculations, repetitive operations, database retrieval—but they pale in comparison when it comes to contextual understanding, adaptation, and generalization. A supercomputer might beat a human in chess, but it cannot recognize a friend’s face in a crowded room without extensive programming and enormous energy cost.

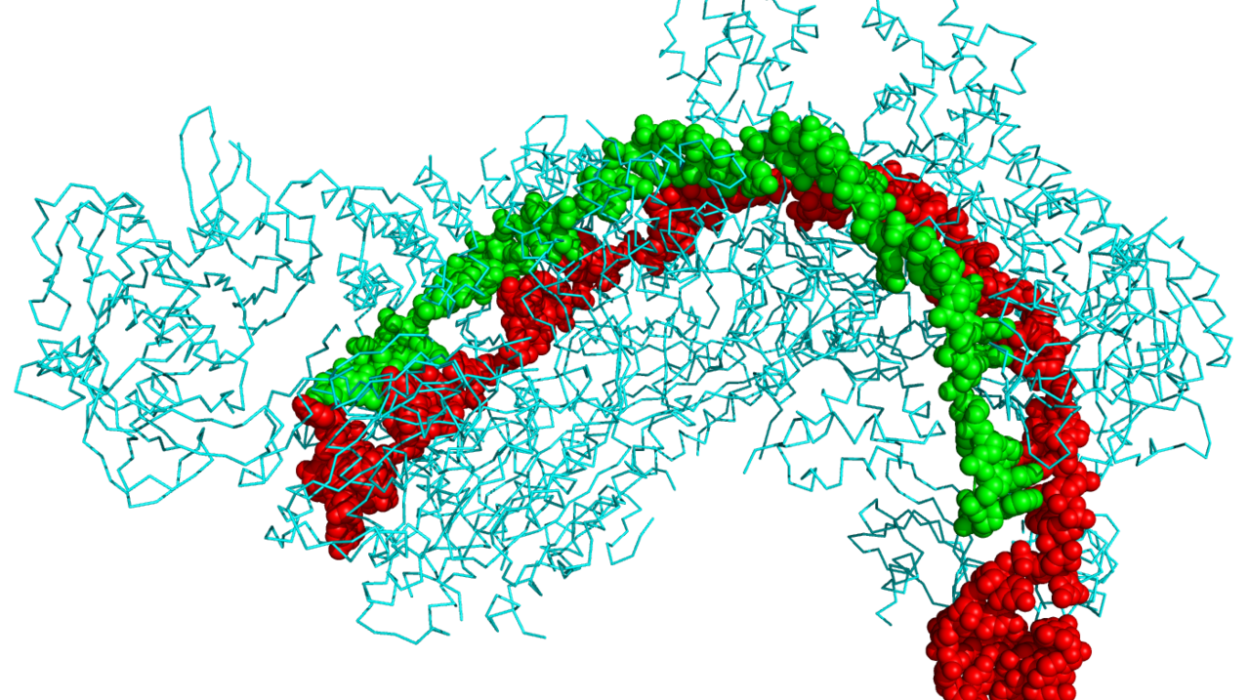

The human brain doesn’t compute by fetching instructions from memory and executing them sequentially. Instead, it processes information in parallel, through dynamic networks of neurons that transmit signals via electrochemical pulses. These neurons adjust their connections based on experience—a process known as synaptic plasticity—forming the basis of learning and memory.

Neuromorphic engineering brings this biological blueprint into the realm of hardware design. By rethinking computation from the ground up, engineers hope to capture the brain’s strengths—parallelism, adaptability, and efficiency—without merely mimicking its outward behaviors.

A New Kind of Architecture

At the core of neuromorphic chips is a radical departure from traditional computing architectures. In conventional systems, memory and processing are separate: data is stored in one place, and the processor fetches and manipulates it. This separation creates a bottleneck known as the von Neumann bottleneck. Data shuttles back and forth, consuming time and energy.

Neuromorphic chips collapse this divide. They embed memory and computation into the same physical locations, echoing the structure of neural networks where synapses both store information and compute signals. In neuromorphic hardware, each artificial “neuron” can process signals and adjust its connections without needing a centralized controller or a separate memory bank.

The communication among these units also differs. Instead of continuous voltage levels and clocked signals, neuromorphic circuits often use spikes—brief pulses that resemble the action potentials of biological neurons. These spikes are event-driven; they occur only when necessary. This sparse, asynchronous communication dramatically reduces energy consumption because components are active only when processing meaningful information.

Neuromorphic chips thus embody a philosophy: computation should be efficient, adaptive, and capable of responding to the world as it unfolds—just like a living brain.

Building Blocks: Neurons and Synapses in Silicon

To translate biological principles into silicon, engineers must define hardware equivalents of neurons and synapses. In neuromorphic chips, artificial neurons are electronic circuits that integrate incoming signals and fire a spike when a threshold is reached. Synapses—connections between neurons—are implemented with devices that can change strength over time, thereby encoding memory.

These synaptic weights are crucial. In biological brains, learning is the process of strengthening or weakening synaptic connections based on experience. In neuromorphic systems, similar adaptation is achieved through mechanisms that adjust synaptic weights in response to spiking patterns. Some implementations even use emerging nanotechnologies, such as memristors, whose resistance changes based on past electrical activity—mirroring biologically inspired plasticity.

Memristors and other novel devices promise not just efficiency but density. They can pack vast numbers of synapses into tiny areas, offering the potential for brain-scale complexity in hardware. The dream is not to replicate every detail of a human brain but to harness its principles to build systems that learn and adapt dynamically.

Learning in Neuromorphic Systems

One of the defining features of intelligence is the ability to learn from experience. Neuromorphic chips are inherently suited for learning because their architecture supports dynamic adaptation. Unlike traditional AI systems—where learning happens in software, often on powerful external servers—neuromorphic hardware can learn on the edge. This means devices can adapt to new information in real time, without relying on cloud connectivity or massive energy resources.

Learning in neuromorphic systems often draws on models like spike-timing-dependent plasticity (STDP), where the timing of spikes influences whether synaptic connections strengthen or weaken. If a presynaptic neuron fires just before a postsynaptic one, the connection tends to strengthen—reinforcing patterns that occur predictably. This mirrors Hebbian learning, often summarized as “neurons that fire together, wire together.”

This ability to learn in situ enables neuromorphic systems to tackle tasks that are difficult for traditional AI. For instance, a neuromorphic sensor network could adapt to changing environmental conditions, recognize patterns in noisy data, and adjust its responses without needing explicit reprogramming.

Why Neuromorphic Computing Matters

Neuromorphic computing promises transformative advances across many domains because it addresses some of the most persistent challenges in computing.

Traditional AI systems require enormous computational resources and energy. Training a single state-of-the-art deep learning model can consume as much energy as several households use in a year. In contrast, neuromorphic chips excel at energy-efficient processing. Their event-driven nature and localized computation mean they activate only when necessary. This makes them ideal for battery-powered devices, autonomous robots, and distributed sensor networks.

Energy efficiency is not merely a convenience—it’s essential. As data proliferates and AI becomes ubiquitous, the energy footprint of computation becomes a global concern. Neuromorphic computing offers a path to sustainable intelligence, where machines can learn and interact without draining resources.

Moreover, neuromorphic systems bring intelligence closer to the physical world. Traditional AI often relies on centralized servers and cloud computing, which introduces latency and dependency on network connectivity. Neuromorphic chips, embedded directly in sensors or devices, can process information locally in real time. This opens new possibilities for real-time decision-making in robotics, autonomous vehicles, smart cities, and wearable technology.

Applications: From Perception to Action

Neuromorphic computing is already finding applications that showcase its unique strengths.

In sensory processing, neuromorphic vision sensors capture visual information not as a stream of redundant frames, but as events—changes in light intensity. This mirrors how biological eyes work, responding to motion and contrast rather than static scenes. Coupled with neuromorphic processors, these sensors can detect movement and patterns with minimal energy, enabling drones and robots to navigate complex environments with agility and speed.

Robotics stands to benefit profoundly. Traditional robots follow predefined instructions and struggle with unpredictable environments. Neuromorphic systems, by contrast, can adapt on the fly. A neuromorphic robot could learn to adjust its gait on uneven terrain, recognize objects through tactile feedback, and coordinate behaviors with fluidity reminiscent of biological creatures.

Healthcare could be another major beneficiary. Neuromorphic chips could power advanced prosthetics that respond to neural signals, offering more natural movement and control for amputees. They could drive wearable health monitors that detect subtle physiological changes, learning personal patterns to alert users of potential issues. In neuroscience research itself, neuromorphic platforms can serve as testbeds for theories of brain function, enabling scientists to model and experiment with large-scale neural systems in real time.

Even communication networks could be transformed. Traditional networks route information based on predefined protocols. Neuromorphic networks could adapt to traffic patterns, prioritize essential data, and recover from disruptions in ways that mirror biological resilience.

The Emotional Core of Technology

At first glance, neuromorphic chips may seem like dry circuitry and abstract algorithms. But beneath the technical terminology lies a deeply human story. This technology reflects our enduring desire to understand ourselves—to uncover the mysteries of cognition, learning, and consciousness. Every neuromorphic breakthrough brings us a little closer to answering ancient questions: How does the mind work? Can intelligence be distilled into physical processes? What does it mean to think?

Neuromorphic computing is also a testament to human creativity and ingenuity. It represents a shift from brute computational power to elegant efficiency. It challenges us to rethink what computing can be, not just what it has been. In doing so, it ignites our imagination and invites us to envision a future where machines don’t just calculate, but participate in learning and discovery.

This emotional resonance is what makes the field compelling—not just to engineers and scientists, but to anyone who wonders about the nature of intelligence, the essence of innovation, and the future of human-machine collaboration.

Challenges and Limitations

For all its promise, neuromorphic computing remains an emerging field with significant challenges. Building hardware that truly captures the complexity of biological systems is no easy feat. Biological brains are not just networks of neurons; they are shaped by evolution, chemistry, and development in ways that hardware can only approximate.

Scaling neuromorphic systems to brain-like complexity presents engineering hurdles. While memristors and other novel devices offer density, reliability and yield remain concerns. Fabricating vast arrays of synapses that behave consistently and predictably is a technological challenge that researchers are still tackling.

Programming neuromorphic chips is another frontier. Traditional computing has decades of software tools, languages, and development frameworks. Neuromorphic systems require new paradigms for programming and training. Instead of writing step-by-step instructions, developers need to think in terms of spikes, plasticity, and distributed learning—concepts that are unfamiliar to many programmers.

Integration with existing infrastructure also poses questions. How do neuromorphic chips coexist with traditional systems? What interfaces and protocols will allow seamless collaboration between conventional processors and neuromorphic units? These are active areas of research and development, and their solutions will shape how widely the technology is adopted.

Ethical considerations loom as well. As machines become better at tasks once thought uniquely human—pattern recognition, decision-making, adaptation—we must confront questions about autonomy, accountability, and the nature of intelligence. Neuromorphic computing does not itself endow consciousness, but as systems grow more complex and interactive, the line between tool and agent becomes harder to define.

The Broader Landscape: AI and Beyond

Neuromorphic computing does not exist in isolation. It is part of a broader ecosystem of artificial intelligence and advanced computation. Today’s AI systems, many based on deep learning and large neural networks, have already transformed industries. They excel at recognizing speech, translating languages, and generating creative content. But these systems are typically trained and deployed on conventional hardware, with heavy reliance on powerful GPUs and cloud infrastructure.

Neuromorphic chips offer a complementary path. Instead of running large neural networks in software, they embody neural computation in hardware. This could enable more efficient, responsive, and decentralized AI. Rather than processing data in distant data centers, devices at the edge—smart sensors, robots, wearables—could think locally, respond instantly, and learn continuously.

The synergy between AI and neuromorphic computing points toward hybrid systems. Conventional AI could handle large-scale learning and global coordination, while neuromorphic modules manage real-time perception, adaptation, and low-power decision-making. This combination could unlock capabilities that neither approach achieves alone.

Neuromorphic Computing in Society

As neuromorphic technology matures, its impact will ripple through society. In education, it could power adaptive learning systems that tailor instruction to individual students, responding to their strengths and challenges in real time. In transportation, neuromorphic processors could help autonomous vehicles navigate safely and efficiently, even in complex environments. In environmental monitoring, sensor networks could track changes in ecosystems, learning to detect anomalies and predict risks before they escalate.

In industry, smart manufacturing systems could use neuromorphic controllers to optimize processes, reduce waste, and adapt to shifting demands. In entertainment and human-computer interaction, responsive systems could create immersive experiences that feel intuitive and alive.

These applications are not distant fantasies—they are emerging realities. As prototypes evolve into commercial products, neuromorphic chips will start to appear in everyday devices, quietly reshaping how we interact with technology and how technology interacts with the world.

A Human Future with Thinking Machines

Neuromorphic computing invites us to imagine machines not as tools that merely obey instructions, but as systems that participate in a form of learning and adaptation. This does not mean creating consciousness or replacing human agency. Rather, it means building machines that complement our strengths, augment our capabilities, and expand the realm of what is possible.

The journey is as much philosophical as technical. What does it mean to think? Can learning be divorced from embodiment? How do we ensure that intelligent systems align with human values? These questions are woven into the fabric of neuromorphic research, reminding us that technology is not just about circuits and code—it is about meaning, purpose, and human flourishing.

In the end, neuromorphic chips are not just a new class of hardware. They are a testament to humanity’s deepest drive: to understand the mind and to create systems that reflect that understanding. They embody our yearning to transcend limitations, to craft machines that resonate with the rhythms of life itself.

As we venture forward, we will encounter challenges and surprises. We will refine our models of intelligence, confront ethical dilemmas, and redefine what it means to be human in a world where machines can learn, adapt, and think. Through it all, neurologically inspired computing will not just change technology—it will change our story.

The Dawn of Thinking Machines

Neuromorphic chips mark a bold step in our ongoing quest to bridge the gap between biology and computation. They challenge us to rethink what computing can be, to seek systems that are not only powerful but alive with adaptive potential. In doing so, they illuminate the path toward a future where machines and minds co-evolve, where intelligence is not confined to silicon or neurons, but flows between them in ways we are only beginning to imagine.

This is the story of neuromorphic computing—an unfolding narrative of discovery, innovation, and human aspiration. It reminds us that the greatest leaps in technology come not from incremental speedups, but from new ways of thinking. As we build machines that think more like us, we learn more about what it means to think at all.