Artificial intelligence has long been a dream of human imagination—a dream of building machines that could think, reason, and perhaps one day rival the human mind. For decades, researchers have tried to capture intelligence in algorithms, but the journey has been divided between two powerful traditions: symbolic reasoning, which models intelligence through logic and rules, and neural networks, which imitate the brain’s ability to learn from patterns. Each approach has achieved remarkable things, but each has struggled with its own limitations.

Today, we are entering a transformative era where these two traditions no longer stand apart. They are being woven together into what is called neuro-symbolic AI—a hybrid approach that combines the adaptability of neural learning with the structure and reasoning of symbolic logic. It is not just another buzzword in the crowded landscape of artificial intelligence research. It is a bold attempt to create systems that are both flexible and understandable, both powerful and trustworthy.

To understand neuro-symbolic AI is to understand not only a new chapter in technology, but also a reflection of how the human mind itself balances instinct and reason, perception and thought.

The Symbolic Roots of Intelligence

Before deep learning took the spotlight, symbolic AI dominated the field. In the mid-20th century, researchers believed intelligence could be achieved by encoding human knowledge directly into machines. They built systems that worked with symbols—abstract representations of objects, concepts, and rules. For example, if you wanted a symbolic AI system to reason about family relationships, you could program it with rules like: “If X is the parent of Y, and Y is the parent of Z, then X is the grandparent of Z.”

This approach gave rise to expert systems in the 1970s and 1980s, which encoded the knowledge of doctors, engineers, or other professionals into sets of logical rules. Symbolic AI excelled at structured reasoning and could produce explanations for its conclusions, something that still eludes many modern machine learning systems.

But symbolic AI had weaknesses. It required enormous manual effort to encode knowledge, and it struggled with ambiguity, uncertainty, and the sheer messiness of real-world data. The world is not always cleanly described in logic. Cats do not always sit still to be categorized, and human language is full of nuance, exceptions, and contradictions. Symbolic systems proved brittle, unable to scale to the complexity of perception and everyday experience.

The Rise of Neural Networks

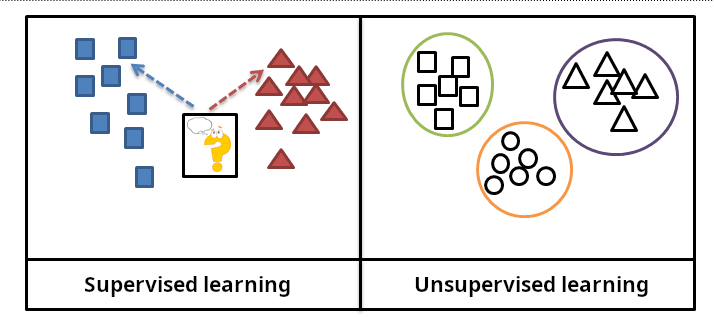

The limitations of symbolic AI set the stage for the resurgence of neural networks, a technology inspired by the way biological neurons process signals. Unlike symbolic systems, which relied on human-crafted rules, neural networks learned patterns directly from data. Feed enough labeled examples of cats and dogs into a neural network, and it could learn to distinguish them with astonishing accuracy—without ever being told what a “whisker” or “paw” was.

This ability to learn from raw data led to the explosive rise of deep learning in the 2010s. Convolutional neural networks mastered computer vision, recurrent networks captured the flow of language, and transformers revolutionized natural language processing, powering systems like modern chatbots and translation tools. Neural networks scaled effortlessly with data and computing power, achieving breakthroughs in areas once thought intractable.

But deep learning also has its shortcomings. Neural networks are often described as “black boxes.” They can make accurate predictions, but they rarely explain how they arrived at those predictions. They require massive amounts of data and computing power. And, most importantly, they lack reasoning ability. A deep learning model might recognize the objects in an image but fail to understand the relationships between them. It might generate fluent sentences without truly grasping meaning or context.

The Case for a Hybrid Approach

If symbolic AI is strong at reasoning but weak at perception, and neural networks are strong at perception but weak at reasoning, then the natural question arises: Why not combine them?

This is the essence of neuro-symbolic AI. By merging the statistical learning of neural networks with the structured logic of symbolic systems, researchers aim to build machines that can both learn from messy real-world data and reason abstractly about it. A neuro-symbolic system could look at an image, identify the objects within it, and then use symbolic reasoning to answer questions about relationships, causes, or consequences.

Consider a simple example. A child sees an image of a dog chasing a ball. A purely neural model might recognize “dog” and “ball,” but struggle to explain the action. A symbolic system, given the concepts of chasing, dogs, and balls, could reason about what is happening. A neuro-symbolic model could combine these abilities: learning to perceive the scene and then reasoning about its meaning.

This hybrid vision is not just about improving performance. It is also about creating AI systems that are more interpretable, trustworthy, and aligned with human values.

Lessons from the Human Mind

Neuro-symbolic AI does not only arise from practical engineering concerns. It also mirrors the dual nature of human cognition. Psychologists and neuroscientists often describe the mind as operating with two complementary systems: one fast, intuitive, and pattern-driven, the other slow, deliberate, and rule-based.

When you recognize a face in a crowd, you rely on the first system—an instinctive, neural-like ability to match patterns. When you solve a logic puzzle or plan a chess move, you rely on the second—a symbolic-like process of deliberate reasoning. Human intelligence emerges from the interplay of both.

By combining neural learning and symbolic logic, neuro-symbolic AI aspires to replicate this balance. It seeks not just to imitate one aspect of human thought, but to capture the richness of intelligence itself.

Building Blocks of Neuro-Symbolic Systems

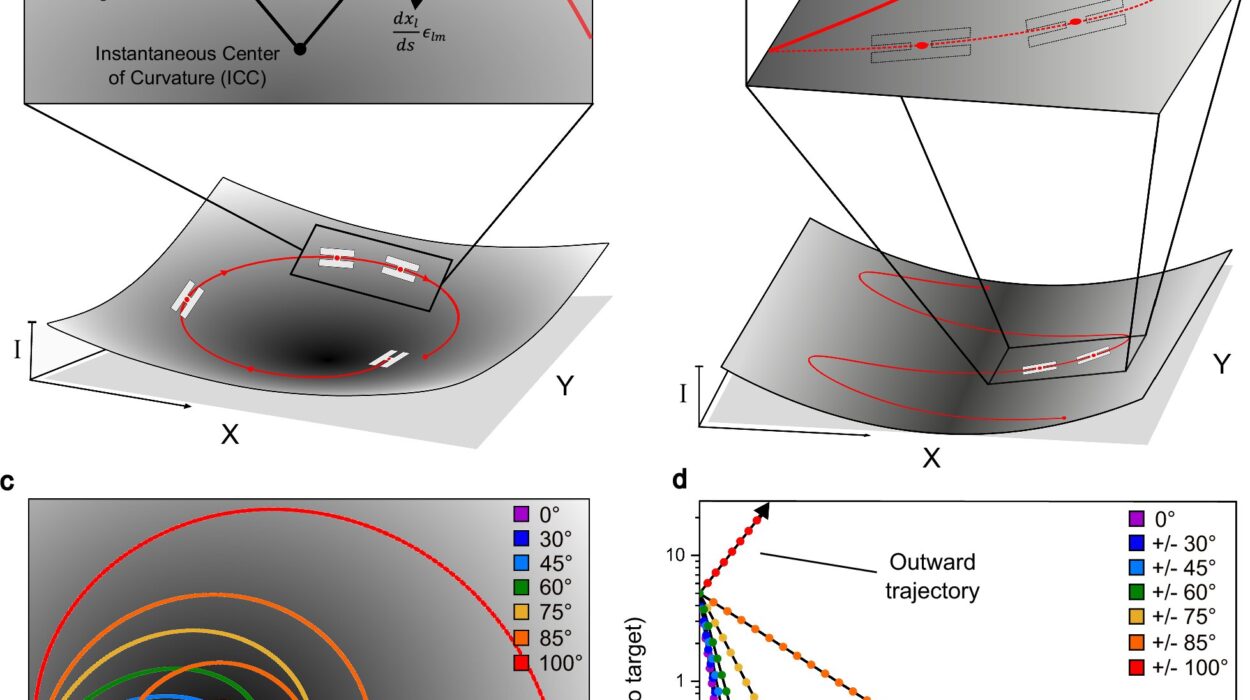

The architecture of neuro-symbolic AI varies widely, but certain principles guide its design. Neural networks often serve as the perceptual front-end, converting raw data—images, text, sound—into symbolic representations. These symbols can then be manipulated by a reasoning engine, which applies logic, rules, or constraints to draw inferences.

For instance, a vision model might identify objects in an image, and a symbolic engine could then reason about spatial relationships: “The cup is on the table,” or “The cat is under the chair.” In natural language processing, a neural model might parse sentences into grammatical structures, which a symbolic system could then use to reason about meaning or context.

Sometimes the integration is tighter, with neural and symbolic components interwoven rather than separated. Neural networks can be guided by symbolic constraints during training, reducing the need for massive datasets and improving generalization. Conversely, symbolic systems can learn new rules by observing patterns discovered by neural models.

Applications That Bridge Learning and Logic

The potential applications of neuro-symbolic AI span across domains. In medicine, such systems could combine neural models that analyze medical images with symbolic reasoning that incorporates established medical knowledge. Instead of merely flagging a suspicious region in a scan, a neuro-symbolic system could explain why, referencing both patterns in the data and logical rules from medical practice.

In robotics, neuro-symbolic AI could allow machines to navigate complex environments more intelligently. A robot equipped with neural perception could recognize objects and people, while symbolic reasoning could help it plan tasks, follow ethical guidelines, or adapt to unexpected situations.

In education, neuro-symbolic tutors could understand both the words a student writes and the reasoning behind them, offering more personalized and meaningful feedback.

Even in law and policy, where reasoning about rules, principles, and fairness is essential, neuro-symbolic AI could support decision-making in ways that pure deep learning cannot.

The Promise of Explainability

One of the most compelling features of neuro-symbolic AI is its potential for explainability. Neural networks alone often make decisions that are difficult for humans to interpret. But when those decisions are grounded in symbolic reasoning, they can be explained in terms of rules and relationships.

For example, instead of simply outputting “This is a malignant tumor,” a neuro-symbolic system could say: “This region matches the learned pattern of a tumor, and its characteristics align with the rule that malignant tumors tend to grow irregularly and invade surrounding tissues.” This kind of explanation builds trust and accountability—qualities essential for AI systems in sensitive domains like healthcare, finance, and law.

Challenges on the Road Ahead

As promising as neuro-symbolic AI is, it is not without challenges. Integrating two fundamentally different paradigms—statistical learning and symbolic reasoning—is not straightforward. Neural networks are continuous and probabilistic, while symbolic systems are discrete and rule-based. Building a bridge between them requires innovative architectures, training methods, and representations.

Scalability is another hurdle. While symbolic reasoning excels at structured problems, scaling it to the messy, high-dimensional realities of perception remains difficult. Similarly, ensuring that neural models produce reliable symbolic representations is an open challenge.

There are also philosophical questions. How much of intelligence can be captured by logic and learning alone? Does combining them bring us closer to true general intelligence, or are there other dimensions of cognition yet to be explored?

A Future Shaped by Neuro-Symbolic Thinking

Despite these challenges, the momentum is strong. Research labs and industry leaders are investing heavily in neuro-symbolic AI, seeing it as a pathway to systems that are not only smarter but safer, more reliable, and more aligned with human needs.

The future of AI may well be defined by this convergence. Just as electricity transformed industries once powered by steam, neuro-symbolic AI could redefine what is possible when learning and reasoning are united. It could give rise to machines that do not merely process data, but understand it; systems that can explain their decisions, adapt to new situations, and reason ethically about their actions.

The Human Dimension

At its heart, the story of neuro-symbolic AI is not just about machines. It is about us. It reflects our attempt to capture in algorithms the dual nature of human thought—the balance of instinct and reason, pattern and logic, perception and meaning.

In building neuro-symbolic systems, we are not only creating more powerful AI. We are holding up a mirror to our own intelligence, asking what it means to know, to understand, to explain. We are building tools that may one day expand our reach, helping us solve problems from climate change to disease, from education to justice.

And perhaps, in blending learning and logic, we are not only shaping the future of machines but also deepening our understanding of ourselves.

Conclusion: Toward a More Human Intelligence

Neuro-symbolic AI is more than a technical innovation. It is a philosophical and emotional leap, an acknowledgment that intelligence is not one-dimensional but multifaceted. It is about creating machines that are not only accurate but meaningful, not only powerful but trustworthy.

By uniting the strengths of neural networks and symbolic reasoning, we move closer to an AI that reflects the richness of human thought. We move closer to systems that can learn from the world, reason about it, explain their actions, and align with human values.

The path ahead is full of challenges, but it is also full of promise. Just as the night sky has always beckoned astronomers to look upward, the mystery of intelligence now beckons us to look inward and forward. Neuro-symbolic AI is not just the best of learning and logic—it is the best of human aspiration and ingenuity, woven into the machines of tomorrow.