Imagine a world where artificial intelligence grows smarter every day, not by siphoning our personal data into distant servers, but by learning directly from the devices in our hands, the hospitals in our cities, and the businesses in our communities. This is not science fiction. This is federated learning—a new frontier in AI that redefines how machines learn while preserving the privacy and ownership of data. It is a revolution in both technology and trust, bridging the need for intelligent systems with the ethical imperative to protect individuals.

At its heart, federated learning is about collaboration without compromise. It allows multiple devices or organizations to train a shared machine learning model together without the need to pool sensitive data in one place. Each participant contributes knowledge, not raw information. In this way, federated learning empowers us to build smarter systems while respecting one of our era’s greatest concerns: data privacy.

From Centralized Learning to Federated Intelligence

For decades, the traditional approach to training artificial intelligence has been centralized. Data from millions of users is gathered, stored in massive servers, and then processed to train models. This method has driven many of the breakthroughs we take for granted today, from voice assistants that understand natural language to recommendation engines that anticipate our tastes.

But centralization carries risks. The more data that flows into one place, the greater the temptation for misuse and the higher the cost of breaches. In a world where data is as personal as fingerprints—medical histories, browsing patterns, voice recordings—the idea of funneling everything into distant servers has become a source of unease.

Federated learning emerged as a response to this tension. Instead of pulling all data into the cloud, it turns the process inside out. The AI model travels to where the data lives, learns from it locally, and returns only the updated insights. The raw information never leaves the device, ensuring that privacy is not a secondary consideration but a foundational design.

The Spark of an Idea

The seeds of federated learning were sown in the mid-2010s when researchers at Google sought a way to improve predictive text on smartphones without compromising user privacy. They realized that while millions of users typed daily on their keyboards, uploading entire typing histories to servers was neither efficient nor ethical. What if the training process could happen directly on the devices?

The solution was elegant: send the model to the phone, train it with the local data, and then send back only the refined updates. When aggregated across millions of devices, these updates produced a powerful, global model—one that grew smarter without ever seeing the raw keystrokes. This was federated learning’s first major success, and it has since expanded into fields far beyond mobile keyboards.

How Federated Learning Works

To understand federated learning, imagine a classroom where students are solving math problems. Instead of each student handing over their notebooks to the teacher, the teacher visits each desk, learns from the solutions, and then compiles the collective wisdom into a single master notebook. No student ever has to reveal their full work; only the insights are shared.

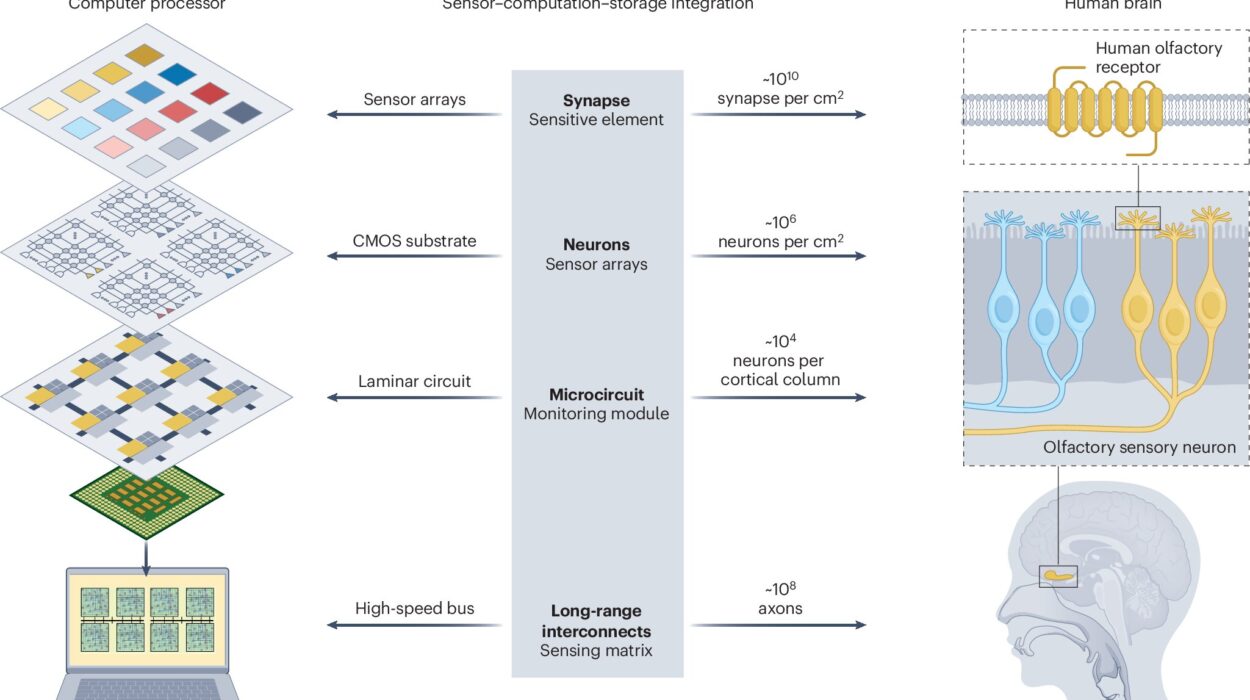

In practice, federated learning follows a cycle. A global model is initialized by a central server and sent to participating devices or organizations. Each participant trains the model on its local data, making improvements based on unique patterns. The improved models are then sent back—not as raw data, but as mathematical updates, often in the form of gradients or weights. The central server aggregates these updates, refining the global model. This cycle repeats, with the model becoming more accurate over time.

The magic lies in aggregation. By carefully combining updates, the system avoids reconstructing sensitive local information. Techniques such as differential privacy and secure multiparty computation add further layers of protection, ensuring that even in aggregation, privacy remains intact.

Why Federated Learning Matters

The significance of federated learning is profound because it addresses two pressing demands of our era: the hunger for smarter AI and the necessity of privacy. In an age where digital trust is fragile, technologies that prioritize both intelligence and protection are not just innovations—they are necessities.

Federated learning matters because it shifts the balance of power. It allows individuals, organizations, and societies to benefit from AI without surrendering their most personal resource: their data. This balance is crucial in healthcare, finance, government, and education, where sensitive information is both invaluable for training models and deeply private.

Federated Learning in Healthcare

Few fields illustrate the promise of federated learning more vividly than healthcare. Medical data is among the most sensitive information a person can have. Yet it is also among the most valuable for building AI systems that can detect diseases, recommend treatments, and predict outcomes. Traditional centralized training often stumbles on regulatory barriers like HIPAA in the United States or GDPR in Europe.

Federated learning offers a way forward. Imagine hospitals across the world training a shared model to detect rare cancers from medical images. Each hospital keeps its data within its secure systems. The global model learns from patterns across all hospitals, improving diagnostic accuracy, but no single patient’s scan ever leaves the premises.

This approach not only preserves privacy but also overcomes the problem of fragmented datasets. Rare diseases, by definition, have few examples at any one institution. By federating the learning across many, AI models can finally gain the breadth needed to recognize subtle and uncommon conditions.

Beyond Medicine: Finance, Industry, and Everyday Life

The reach of federated learning extends far beyond hospitals. In finance, it allows banks to detect fraudulent transactions by learning from patterns across institutions without exposing customer data. Each bank’s data remains secure, but the collective intelligence grows sharper.

In industry, federated learning enables manufacturers to optimize processes across multiple factories without centralizing proprietary production data. In telecommunications, mobile carriers can improve network performance by learning from device data while keeping customer activity private.

Even in daily life, federated learning is already at work. Smartphones refine speech recognition, predictive typing, and personalized recommendations using local data that never leaves the device. Each improvement feels seamless to the user, yet behind the scenes, federated learning orchestrates a symphony of local contributions into a global intelligence.

The Challenges Along the Path

While federated learning is transformative, it is not without challenges. Training models across distributed systems introduces new technical complexities. Devices may have varying computational power, unreliable internet connections, or differing amounts of data. Coordinating learning across this uneven landscape requires sophisticated algorithms.

Privacy, while central to the design, is not absolute. Clever attacks could still attempt to infer sensitive information from model updates. To guard against this, researchers employ techniques such as differential privacy, which adds mathematical noise to updates, and secure aggregation protocols, which ensure that individual contributions remain hidden even during collection.

Another challenge is fairness. Because federated learning aggregates across participants, there is a risk that models might favor the majority and underperform on underrepresented groups. Ensuring inclusivity and equity requires careful design, so that AI does not merely reflect existing biases but actively counteracts them.

The Future of Federated Learning

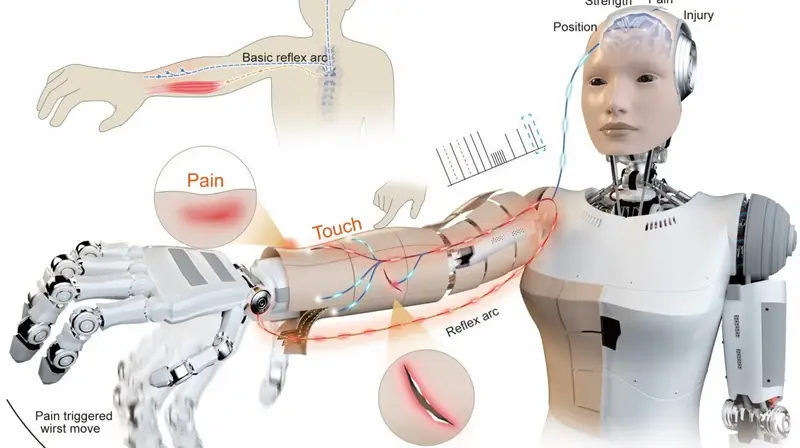

The future of federated learning is rich with possibility. As edge devices—from smartphones to smartwatches, from sensors to autonomous cars—proliferate, the potential to train models collaboratively will only grow. Instead of relying solely on massive centralized data centers, AI may evolve into a decentralized ecosystem, where intelligence emerges from billions of connected nodes.

Imagine self-driving cars sharing knowledge of road hazards in real time, without ever exposing drivers’ personal routes. Picture wearable health monitors that collaborate to detect early signs of epidemics without sending intimate medical readings to central servers. Consider educational platforms that adapt to diverse learning styles across schools worldwide, while respecting the privacy of every child.

In this vision, federated learning becomes not just a technical framework but a societal infrastructure—a way for humanity to build collective intelligence while safeguarding individuality.

Federated Learning and Human Values

Perhaps the most compelling aspect of federated learning is its alignment with human values. Technology has often demanded trade-offs: convenience at the expense of privacy, intelligence at the cost of autonomy. Federated learning challenges this narrative. It proposes that we can have both—that we can build powerful AI systems without eroding trust, that we can learn collectively without surrendering control.

This alignment matters because trust is the currency of the digital age. Without it, innovation stalls. With it, the potential for collaboration and discovery expands. Federated learning embodies the principle that technology should serve humanity, not exploit it.

A World of Shared Learning Without Shared Data

At its essence, federated learning redefines what it means to share knowledge. In traditional systems, sharing meant giving up control, handing over data to centralized authorities. Federated learning decouples knowledge from possession. It allows us to contribute to global intelligence without exposing personal truths.

This paradigm resonates beyond technology. It mirrors the way societies themselves grow—individuals retain their identities, yet through collaboration, collective wisdom emerges. Federated learning, in this sense, is not just an algorithmic innovation but a philosophical one. It teaches us that we can build common ground without erasing individuality.

Conclusion: Intelligence With Integrity

Federated learning is more than a technical milestone in artificial intelligence. It is a vision of how we might reconcile progress with principles, innovation with privacy, collaboration with autonomy. It allows AI to grow not by consuming everything in its path, but by listening, learning, and respecting the boundaries of those who contribute.

In a world increasingly shaped by data, federated learning offers a hopeful model: intelligence with integrity. It promises a future where AI is not built on the extraction of personal information, but on the respectful exchange of insights. It is a reminder that the most powerful technologies are those that do not force us to choose between what we need and what we value, but instead weave them together.

Federated learning is not just about training AI without sharing data. It is about reimagining what it means to learn in the digital age—together, securely, and with dignity.