In an era defined by artificial intelligence and the mass exchange of digital information, privacy concerns have reached new levels of complexity. Data is no longer simply personal; it is algorithmic, statistical, and predictive. The datasets used to train AI models—ranging from natural language processing corpora to medical imagery and financial data—represent some of the most valuable and sensitive assets in the modern digital economy. For organizations and individuals working in machine learning, the question of how to protect this data from unwanted surveillance, interception, or analysis has become increasingly urgent. One of the most common solutions proposed for maintaining data privacy during AI development is the use of a Virtual Private Network (VPN).

A VPN is widely understood as a tool that conceals a user’s online activity from Internet Service Providers (ISPs) and other intermediaries by encrypting network traffic and routing it through secure servers. However, the question remains: does a VPN truly hide your AI training data from ISPs? While the intuitive answer might be “yes,” the reality is far more nuanced. VPNs offer strong encryption and obfuscation capabilities, but they operate within specific boundaries. AI data pipelines are complex systems involving multiple endpoints, protocols, and layers of data handling. The protection a VPN offers depends not only on its encryption but also on the broader architecture of your machine learning infrastructure, including data sourcing, preprocessing, training, and deployment.

This article explores the technical truth behind this question in depth. We will examine what a VPN can and cannot do in the context of AI training data privacy, how ISPs handle encrypted traffic, what other entities can still infer from your data streams, and which additional security layers are needed to ensure comprehensive data protection in AI research and development.

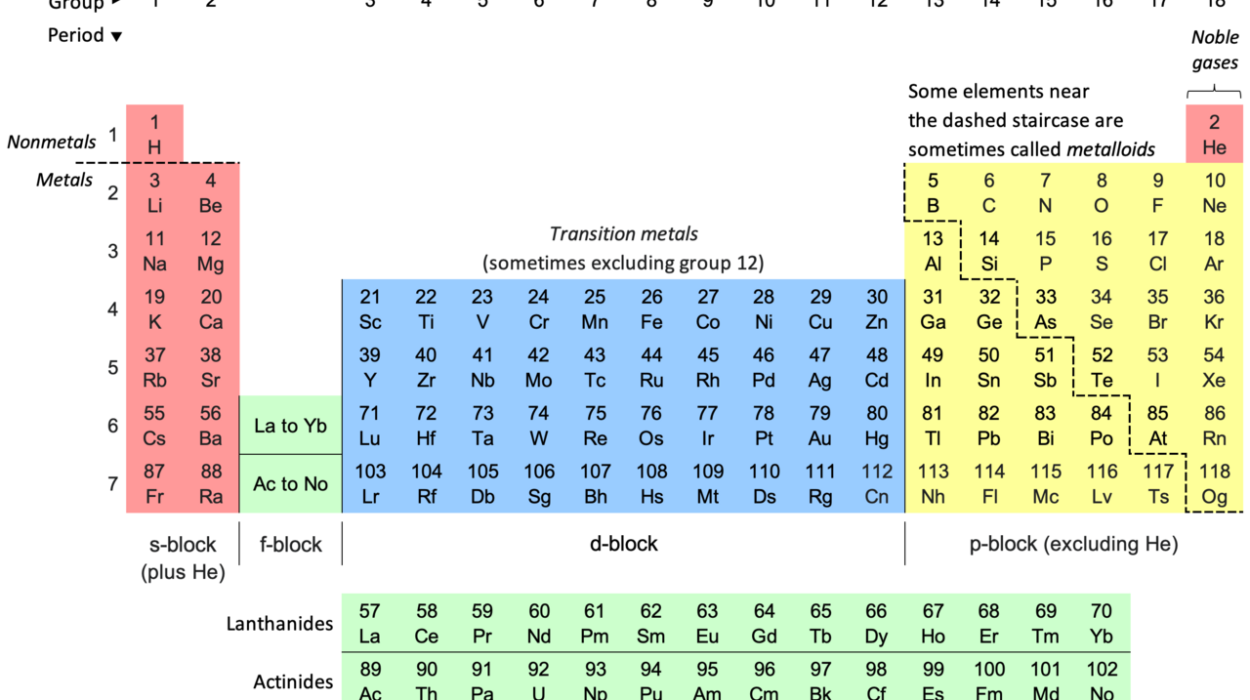

Understanding the Nature of AI Training Data

To understand whether a VPN can truly hide AI training data, one must first understand what AI training data consists of and how it flows across networks. Training data can be structured, such as numerical records or sensor readings; semi-structured, like logs or XML files; or unstructured, such as text, audio, video, and images. These datasets can range from gigabytes to petabytes in size, often stored across distributed cloud systems or local clusters.

When AI engineers train models, they typically need to move data between local machines, cloud storage, and compute nodes. For instance, a company may download datasets from a data provider’s API, preprocess them on local servers, and then upload them to a GPU cluster hosted on a cloud platform like AWS, Google Cloud, or Azure. During each of these steps, data travels over the public internet unless encapsulated within a private or dedicated network.

ISPs, as intermediaries, facilitate this data transmission. Every packet leaving a user’s local network must pass through an ISP before reaching its destination. This means that, without encryption, ISPs can observe the content of transmitted packets, monitor metadata such as IP addresses, ports, and timing information, and even infer the nature of user activities through traffic analysis.

AI training data often includes sensitive information—personally identifiable data, proprietary business data, or domain-specific content that represents intellectual property. Therefore, organizations must ensure that ISPs or other intermediaries cannot access or infer details from this traffic.

How VPNs Work at the Network Level

A Virtual Private Network is a secure communication channel established between a user’s device and a VPN server. When enabled, a VPN client encrypts outgoing internet traffic using cryptographic protocols such as OpenVPN, WireGuard, or IKEv2/IPsec. This encrypted traffic is then routed through a VPN server, which decrypts it and forwards it to the intended destination on the user’s behalf. The destination server, whether it is a cloud API, data storage platform, or compute node, sees only the VPN server’s IP address rather than the user’s original IP.

From an ISP’s perspective, all it can see is an encrypted stream of data traveling between the user and the VPN server. The ISP cannot read the contents of the traffic or easily determine what websites or services are being accessed. In theory, this means that the ISP cannot inspect or manipulate AI training data transmitted over the VPN connection.

However, this encryption only protects the data while it is in transit between the client and the VPN endpoint. Once the traffic reaches the VPN server and is decrypted for onward transmission to its final destination, it is potentially visible to other intermediaries. For example, if the connection between the VPN server and the cloud AI platform is not itself encrypted via HTTPS or another secure protocol, that segment of the data path remains exposed.

In essence, VPNs provide encryption and privacy on the “first mile” of the network path—the portion between the user’s system and the VPN gateway—but they do not inherently secure the “last mile,” unless the services you access also employ end-to-end encryption.

What ISPs Can and Cannot See When You Use a VPN

To evaluate the privacy guarantees of VPNs, it is essential to distinguish between what ISPs can see and what they cannot when a VPN is active. ISPs can still observe that you are connected to a VPN service. They can see the destination IP address of the VPN server, the amount of data being transmitted, and timing information such as when the connection starts and ends. They cannot, however, see the contents of your encrypted packets or the final destinations of the data beyond the VPN.

This distinction is critical in the context of AI training data. Suppose you are transferring a large dataset to a cloud training cluster while connected to a VPN. The ISP will see a continuous stream of encrypted traffic between your computer and the VPN server. The volume and frequency of the data might suggest large file transfers, but the actual contents—images, text, or numerical data—remain unreadable.

However, ISPs can still perform traffic analysis. Even without decrypting packets, they can infer patterns based on metadata such as packet size, frequency, and timing. This means that if your training data transfers follow predictable patterns—say, nightly synchronization of large datasets—an ISP could recognize that recurring pattern, even if it cannot inspect the data itself. In high-security contexts, such as corporate espionage or state-level surveillance, such metadata analysis can be valuable.

Furthermore, while ISPs cannot decrypt VPN traffic, they may collaborate with or be compelled by regulatory bodies to log metadata. In some jurisdictions, ISPs are required to retain connection records that could reveal when and how often a user connected to a VPN. These records could, under certain legal conditions, be used to correlate activities with other network-level observations.

The Role of Encryption Protocols in Protecting AI Data

VPNs rely on encryption protocols to secure traffic. The strength of that protection depends heavily on the choice of protocol and the quality of its implementation. Common protocols include OpenVPN, which uses TLS-based encryption; WireGuard, which employs modern cryptographic primitives such as ChaCha20 and Poly1305; and IPsec, which operates at the network layer.

For AI data protection, the encryption algorithm’s robustness is critical. Strong encryption ensures that even if packets are intercepted, they remain unintelligible. However, encryption does not eliminate metadata leakage entirely. The size, timing, and frequency of encrypted packets can still reveal information about user activity patterns.

When transmitting large AI datasets, organizations often use additional encryption layers on top of VPNs. For example, they might employ HTTPS, SSH tunnels, or SFTP for data transfers. These protocols ensure end-to-end encryption from the source to the destination, meaning that even if the VPN decrypts data at its exit point, the payload remains protected.

Ultimately, VPNs should be seen as one component of a layered security architecture. They provide confidentiality and anonymity at the transport layer but cannot guarantee data security throughout the entire AI pipeline.

Data Movement in AI Workflows and Its Security Implications

AI workflows are inherently distributed. Data rarely resides in one place. It is collected from multiple sources, preprocessed on different servers, and then used for training models on high-performance computing clusters. These operations often occur across geographically dispersed data centers.

When AI researchers rely on public or commercial networks to transfer data between these environments, each transmission represents a potential vulnerability. VPNs mitigate this risk by encrypting the data in transit, but they cannot control how data is stored, accessed, or processed once it reaches its destination.

For example, if an AI engineer uploads encrypted training data to a cloud platform through a VPN, the data remains encrypted during transit but becomes subject to the cloud provider’s internal security policies once uploaded. If the provider’s storage systems are compromised, the VPN offers no protection. Therefore, true AI data privacy requires both network-level security and application-level encryption.

In secure AI pipelines, encryption should be applied at multiple layers: data should be encrypted at rest, during transmission, and, ideally, during processing. Techniques such as homomorphic encryption and secure multi-party computation are emerging as ways to allow computation on encrypted data without revealing its contents, offering future avenues for privacy-preserving AI.

The Myth of Total Anonymity with VPNs

VPNs are often marketed as tools for total anonymity, but this claim does not hold up under technical scrutiny. While VPNs can hide your IP address from ISPs and websites, they do not make you invisible. The VPN provider itself can, in theory, see your traffic after decryption at the exit node. Therefore, the security of your AI training data depends on the trustworthiness and privacy practices of your VPN provider.

If the VPN logs traffic or metadata, it could inadvertently expose your activities. A compromised or malicious VPN provider could inspect the unencrypted data after it leaves their servers, especially if the final destination connection is not secured with TLS or another encryption layer. Some VPN services claim to operate “no-log” policies, but verifying these claims can be difficult without third-party audits.

Furthermore, sophisticated network analysis techniques can sometimes correlate encrypted VPN traffic with external events. If an adversary observes network patterns at both the VPN ingress and the target destination, they can correlate the two data streams to infer activity timing, effectively deanonymizing the user. While this is an advanced and resource-intensive attack, it underscores that VPNs are not infallible shields.

VPNs vs. Dedicated Private Networks for AI Research

In large-scale AI research environments, organizations often rely not on consumer VPNs but on dedicated private networks or enterprise VPNs with managed encryption policies. These networks establish secure tunnels between corporate data centers and cloud providers, ensuring end-to-end control over data movement.

Dedicated private networks, such as AWS Direct Connect or Google Cloud Interconnect, bypass the public internet entirely, reducing exposure to ISP-level monitoring. Unlike typical VPNs, which rely on third-party infrastructure, these connections are managed directly by the organization or cloud provider, providing stronger guarantees of confidentiality and performance.

For AI training pipelines that handle regulated or proprietary data, dedicated connections or virtual private clouds (VPCs) are generally preferred. They provide network isolation, consistent latency, and strict access control, ensuring that AI training data never traverses untrusted networks.

Regulatory and Legal Considerations

Data privacy regulations such as the General Data Protection Regulation (GDPR) in the European Union, the California Consumer Privacy Act (CCPA), and other regional frameworks impose strict requirements on how data—especially personal data—can be transmitted and stored. Using a VPN may help meet certain compliance requirements by encrypting data in transit, but it does not guarantee full legal protection.

Organizations handling AI training data that includes personal information must ensure that encryption keys, storage policies, and access controls comply with data protection standards. Furthermore, the use of VPNs can introduce jurisdictional complexities. If a VPN routes data through servers located in different countries, it may inadvertently expose the data to foreign legal jurisdictions. This is particularly critical for AI applications that rely on sensitive datasets, such as medical or biometric data.

VPNs thus provide technical privacy but not necessarily regulatory compliance. Comprehensive data protection requires a combination of legal, organizational, and technical controls.

Alternatives and Complements to VPNs

While VPNs are powerful tools, they are only one part of the data privacy toolkit. Other technologies can complement or even surpass VPNs in specific contexts. End-to-end encryption ensures that only the communicating parties can decrypt the data, regardless of intermediate networks. Secure Shell (SSH) tunnels provide similar transport-layer encryption for command-line and file transfer operations.

For distributed AI systems, technologies such as zero-trust networking, private data enclaves, and federated learning offer additional layers of protection. Federated learning allows AI models to be trained across multiple devices or servers without sharing raw data, minimizing exposure to network interception entirely.

Another important approach is differential privacy, which introduces statistical noise into datasets to prevent individual records from being identified. While differential privacy does not secure data in transit, it reduces the risk of sensitive information being extracted from trained models, complementing network-level protections like VPNs.

The Future of AI Data Privacy Beyond VPNs

As AI systems continue to expand, so too does the sophistication of data protection techniques. VPNs will remain valuable for securing connections, but future privacy strategies will rely on deeper integration between networking, cryptography, and machine learning.

Emerging trends include the use of decentralized VPNs (dVPNs), where traffic is routed through distributed peer-to-peer networks rather than centralized providers. This reduces the risk of single-point compromise. Additionally, AI-driven network monitoring tools can detect and mitigate potential data leaks in real time, automatically adjusting routing or encryption based on threat intelligence.

Quantum-safe encryption is another frontier. As quantum computing advances, traditional VPN encryption methods may become vulnerable. Researchers are already developing post-quantum cryptographic algorithms that can protect data streams from quantum attacks, ensuring long-term confidentiality of AI training data.

In the longer term, privacy-preserving computation techniques such as homomorphic encryption and secure enclaves may render traditional network-level protections less critical. These methods allow AI models to process encrypted data directly, ensuring that even if an adversary intercepts the data, it remains useless.

Conclusion

A VPN can effectively conceal your AI training data from ISPs in transit by encrypting the network connection and masking destination endpoints. It prevents ISPs from inspecting or tampering with the contents of data packets, offering a strong layer of privacy at the transport level. However, VPNs do not provide absolute protection. They do not secure data once it leaves the VPN server, nor do they prevent metadata analysis or jurisdictional exposure.

True protection of AI training data requires a multi-layered security approach that extends beyond VPNs. Encryption must be applied end-to-end, data governance must adhere to regulatory standards, and AI workflows must incorporate privacy-preserving techniques such as federated learning and differential privacy.

VPNs are essential tools in the modern privacy stack, but they are not silver bullets. They protect the path between you and the network, not the entire lifecycle of your AI data. To truly secure AI training pipelines, organizations must think holistically—combining network encryption, data governance, cryptographic computation, and regulatory compliance. Only then can we ensure that the data powering artificial intelligence remains private, secure, and ethically protected from unauthorized observation.