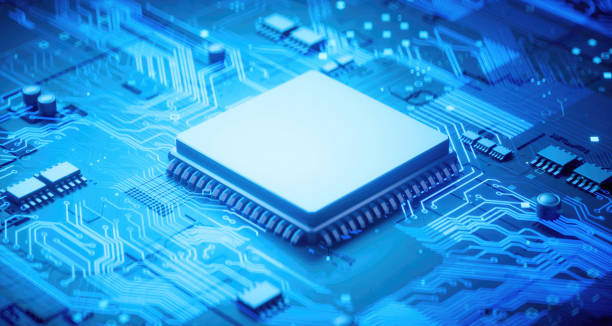

The Central Processing Unit, commonly known as the CPU, is the core component of every computing device—from massive data servers powering the internet to the smartphones in our pockets. Often referred to as the “brain” of the computer, the CPU is responsible for executing instructions, performing calculations, and managing data flow within the system. It is the central element that transforms digital information into meaningful operations, enabling all software and hardware components to function together in harmony.

The concept of the CPU dates back to the earliest days of electronic computing, when machines first began automating mathematical and logical tasks. Over the decades, the CPU has evolved from a simple collection of transistors into a complex, high-speed microprocessor capable of performing billions of operations per second. Understanding what a CPU is, how it works, and why it matters provides deep insight into how modern computing functions.

The Role and Importance of the CPU

At its most fundamental level, a computer is a machine designed to process information according to a set of instructions. The CPU performs this task by fetching, decoding, and executing instructions from memory. Every time you open a file, click an icon, type a document, or run a program, the CPU is the component that makes it happen.

The CPU coordinates all other parts of the computer, ensuring that input devices, memory, and output systems communicate efficiently. It interprets binary instructions—strings of 1s and 0s—into meaningful actions, such as arithmetic calculations, data transfers, or logic comparisons. These basic operations are executed millions or even billions of times per second, depending on the speed of the processor.

The importance of the CPU cannot be overstated. Without it, a computer would be nothing more than an inert collection of circuits. The CPU not only powers everyday computing but also enables complex scientific simulations, artificial intelligence, and real-time data analysis that underpin modern civilization.

The Historical Evolution of the CPU

The evolution of the CPU reflects the broader history of computing itself. Early computers, developed in the mid-20th century, did not have what we now consider a CPU. Instead, they were built with separate modules for arithmetic, logic, and control. The first concept of a stored-program computer—a system where both data and instructions reside in the same memory—was introduced by John von Neumann in 1945. His design, known as the Von Neumann architecture, became the foundation for virtually all modern CPUs.

The first generation of CPUs used vacuum tubes, which were large, fragile, and consumed enormous power. Machines like the ENIAC and UNIVAC were massive, filling entire rooms while performing calculations at speeds that would seem primitive today. In the late 1950s, the invention of the transistor revolutionized electronics, allowing CPUs to become smaller, faster, and more reliable.

By the 1970s, integrated circuits made it possible to place thousands of transistors on a single silicon chip. This led to the birth of the microprocessor—an entire CPU condensed into a tiny chip of silicon. Intel’s 4004, released in 1971, is considered the first commercial microprocessor. It had a clock speed of 740 kHz and could execute about 92,000 instructions per second, which was revolutionary for its time.

Over the following decades, CPUs became exponentially more powerful thanks to advances in semiconductor technology, particularly the trend described by Moore’s Law—the observation that the number of transistors on a chip doubles approximately every two years. From the early Intel 8086 processor, which introduced the x86 architecture in 1978, to modern processors with billions of transistors and multiple cores, the CPU’s evolution has defined the progress of digital technology.

The Architecture of the CPU

A CPU’s architecture refers to its internal design and organization. The most common model used in computer architecture is the Von Neumann architecture, which includes five primary components: the Control Unit (CU), the Arithmetic Logic Unit (ALU), registers, memory, and input/output (I/O) interfaces.

The Control Unit directs the operation of the processor. It fetches instructions from memory, decodes them to determine what actions are required, and orchestrates the movement of data between the CPU’s internal components. The Arithmetic Logic Unit (ALU) performs mathematical calculations such as addition, subtraction, multiplication, and division, as well as logical operations like AND, OR, and NOT.

The CPU also contains registers, which are small, high-speed memory units that temporarily store data and instructions being processed. Registers allow the CPU to access critical information instantly without waiting for data retrieval from slower memory systems.

While the ALU and CU are the main functional blocks, modern CPUs also include other specialized units such as cache memory, pipelines, and instruction decoders, all designed to increase speed and efficiency.

The Fetch–Decode–Execute Cycle

Every action the CPU performs can be broken down into a repeating sequence known as the Fetch–Decode–Execute cycle, sometimes called the instruction cycle. This cycle represents the fundamental operation of all modern processors.

During the fetch phase, the CPU retrieves an instruction from memory, identified by the program counter (PC), which keeps track of the next instruction to be executed. The instruction is then transferred into the instruction register for processing.

In the decode phase, the control unit interprets the fetched instruction, determining which operation is required and which data or registers will be involved.

Finally, in the execute phase, the CPU performs the operation. This may involve performing arithmetic calculations in the ALU, moving data from one register to another, or sending a command to an input/output device.

Once the instruction is executed, the program counter advances to the next instruction, and the cycle repeats continuously, millions or billions of times per second.

CPU Clock and Performance

The speed at which the CPU executes instructions is determined by its clock frequency, measured in hertz (Hz). The clock provides a steady series of electrical pulses that synchronize the operations within the processor. One hertz represents one cycle per second, so a 3.0 GHz (gigahertz) processor completes three billion cycles every second.

However, clock speed alone does not determine performance. Other factors—such as the number of cores, cache size, instruction set efficiency, and architecture—also play crucial roles. For example, two CPUs with the same clock speed may perform differently depending on how efficiently they process instructions.

Modern CPUs use pipelining, superscalar execution, and out-of-order processing to execute multiple instructions simultaneously, dramatically improving performance. Additionally, multicore processors contain multiple processing units (cores) within a single chip, allowing for true parallel computation.

CPU Cores and Multithreading

Originally, CPUs contained a single core, meaning they could execute only one thread or sequence of instructions at a time. However, as computing demands increased, manufacturers began adding multiple cores to processors, each capable of handling its own tasks independently.

A dual-core CPU can process two threads simultaneously, a quad-core four, and so on. Modern high-performance CPUs can have up to 16 or more cores, with server-grade processors featuring dozens or even hundreds of cores.

In addition to multiple cores, many CPUs use simultaneous multithreading (SMT), also known as hyper-threading in Intel processors. This technology allows each core to handle two or more threads at once, effectively increasing processing efficiency by utilizing idle resources within the core.

The combination of multiple cores and multithreading allows modern CPUs to handle complex multitasking workloads, such as running several applications at once, rendering 3D graphics, or processing massive datasets.

The Instruction Set Architecture (ISA)

The Instruction Set Architecture (ISA) defines the set of commands that a CPU can understand and execute. It acts as the interface between software and hardware, dictating how programs communicate with the processor. Each CPU family has its own ISA, which determines how instructions are encoded, how memory is accessed, and how operations are executed.

The two most prominent ISAs in modern computing are x86 (used by Intel and AMD) and ARM (used in mobile devices and increasingly in servers and desktops).

The x86 architecture, originally introduced by Intel in 1978, has evolved through several generations, maintaining backward compatibility while adding new features. ARM, on the other hand, is known for its energy efficiency, making it the preferred choice for smartphones, tablets, and embedded systems.

There are two main design philosophies behind ISAs: Complex Instruction Set Computing (CISC) and Reduced Instruction Set Computing (RISC). CISC architectures, such as x86, use complex instructions that can perform multiple operations in a single command. RISC architectures, such as ARM, simplify the instruction set to optimize speed and efficiency. Each approach has advantages depending on the intended application.

Cache Memory and Data Access

The CPU relies on memory to fetch instructions and data, but accessing main system memory (RAM) is relatively slow compared to the speed of the processor. To bridge this gap, CPUs use cache memory, which stores frequently accessed data close to the processor cores.

Cache memory is organized into multiple levels: L1, L2, and L3. L1 cache is the smallest and fastest, located directly on the processor core. L2 cache is larger and slightly slower, while L3 cache is shared among multiple cores and provides a larger storage capacity.

The hierarchy ensures that the CPU has rapid access to the most frequently used data, significantly reducing latency. Effective cache management is one of the key factors determining overall CPU performance.

Power Efficiency and Thermal Design

As CPUs become more powerful, they also generate more heat. Managing power consumption and thermal output is essential to maintaining performance and reliability. The amount of heat produced by a processor is measured as Thermal Design Power (TDP), expressed in watts.

Manufacturers use advanced fabrication techniques, such as smaller transistor sizes measured in nanometers (nm), to improve energy efficiency. A smaller transistor size allows more transistors to fit on a chip, reducing power consumption and enabling faster switching speeds.

Additionally, modern CPUs incorporate dynamic frequency scaling and power gating, which adjust the clock speed and voltage depending on workload demands. This helps balance performance with energy efficiency, extending battery life in mobile devices and reducing power costs in data centers.

The Manufacturing Process

CPU manufacturing is one of the most complex engineering processes in the world. It involves creating billions of microscopic transistors on a silicon wafer using photolithography, ion implantation, and chemical vapor deposition.

The process begins with purified silicon, which is sliced into thin wafers. Patterns representing the transistor layout are projected onto the wafer using ultraviolet light and etched with precision at the nanometer scale. Each layer adds new structures, such as gates and interconnects, forming a complete microprocessor after dozens or even hundreds of steps.

After manufacturing, each chip undergoes rigorous testing to ensure functionality and performance. Chips that fail to meet specifications are discarded or repurposed for lower-end products. The complexity of modern CPU fabrication pushes the limits of physics and materials science, with each generation representing a major technological leap.

Modern CPU Technologies and Trends

In recent years, CPU design has focused on parallelism, efficiency, and integration. The rise of multicore processors, heterogeneous computing, and specialized accelerators reflects the need for handling increasingly complex workloads such as artificial intelligence, machine learning, and 3D rendering.

Technologies such as chiplet architectures—where multiple smaller dies are combined into one package—allow manufacturers to increase performance while controlling costs and improving yields. AMD’s Ryzen and EPYC processors are prime examples of chiplet-based design.

Another major development is the integration of graphics processing units (GPUs) and neural processing units (NPUs) within CPUs, creating hybrid chips capable of handling both general-purpose and specialized tasks efficiently. Apple’s M-series chips and Intel’s latest Core Ultra processors exemplify this trend toward unified architectures.

CPUs in Everyday Life

Although most people never see a CPU directly, it is the driving force behind nearly every digital experience. In personal computers, it runs operating systems, applications, and games. In smartphones, it powers everything from the touchscreen interface to voice recognition.

Servers and data centers rely on powerful CPUs to manage cloud computing, artificial intelligence, and massive data processing. Embedded CPUs control vehicles, household appliances, medical equipment, and industrial machines. Even tiny devices like smartwatches and sensors contain CPUs designed for specific tasks.

The Future of CPU Development

The future of CPU technology is both exciting and challenging. As transistor sizes approach physical limits, engineers are exploring new materials, architectures, and computing paradigms.

Quantum computing, neuromorphic processors, and optical computing represent emerging frontiers that could one day surpass traditional silicon-based CPUs. In the near term, innovations such as three-dimensional chip stacking, advanced cooling systems, and hybrid architectures will continue to push performance boundaries.

Artificial intelligence is also influencing CPU design, driving the creation of processors optimized for machine learning workloads. The convergence of CPU, GPU, and AI accelerators points toward a future where computing is more efficient, adaptable, and integrated than ever before.

Conclusion

The Central Processing Unit is the beating heart of modern computing, the component that transforms data into action. From the simplest microcontroller to the most powerful supercomputer, every digital system depends on the CPU’s ability to execute instructions quickly and accurately.

Its evolution from room-sized machines to nanometer-scale microprocessors represents one of humanity’s greatest technological achievements. The CPU embodies the fusion of physics, mathematics, and engineering—a masterpiece of precision and logic that continues to shape the modern world.

As technology advances, the CPU will remain at the center of innovation, driving the next generation of discoveries in science, communication, and artificial intelligence. Understanding the CPU is not just about knowing how computers work; it is about understanding the fundamental mechanisms that power the digital age and define the future of human progress.