When people imagine machine learning, they often think of breakthrough models, cutting-edge algorithms, or clever architectures. The public imagination is captivated by stories of neural networks defeating grandmasters or algorithms predicting diseases before symptoms appear. But hidden behind every one of these feats lies something less glamorous yet utterly indispensable: the machine learning pipeline.

This pipeline is the invisible machinery that carries a raw, messy idea from the chaos of data into a living, breathing product in the real world. It’s the bridge between the laboratory and the market, between the model’s accuracy on a Jupyter notebook and its resilience under millions of daily requests. Without it, even the most sophisticated AI remains a fragile prototype.

In truth, building a production ML pipeline is as much an art as it is a science. It requires technical skill, but also patience, design thinking, and a deep respect for the realities of messy human and machine systems. This is the journey from data to deployment — and it’s one of the most fascinating journeys in modern technology.

From the Spark of an Idea to a Measurable Goal

Every production ML pipeline begins not with code, but with a problem worth solving. In real-world businesses, that means translating a vague ambition — “We want to predict customer churn” — into something measurable, testable, and valuable. This stage demands collaboration between data scientists, domain experts, and business stakeholders.

The language here matters. Saying “We want an accurate model” is meaningless without defining what “accurate” means in context. Does a false positive cost more than a false negative? Is interpretability more important than raw predictive power? Will the model’s decisions require human oversight?

This phase is also where constraints surface. Maybe the model must return results in under 100 milliseconds. Maybe it needs to run on devices with limited memory. Maybe the data arrives in real time, or maybe it’s batch-loaded once a day. These realities shape the entire architecture of the pipeline before a single dataset is touched.

The Foundation: Data Collection and Ingestion

Once the goal is set, the first tangible step is getting the data. In an academic paper, datasets arrive neatly packaged, ready for training. In the real world, they arrive like an unfiltered stream from a firehose — messy, inconsistent, and often incomplete.

Data can come from transactional databases, API calls, IoT devices, web logs, sensors, customer surveys, or third-party providers. The ingestion layer must pull it in reliably, handle scale, and often comply with strict privacy and compliance regulations. For production pipelines, ingestion is rarely a one-off action; it’s an ongoing heartbeat that must run as long as the model is alive.

Here, architecture decisions emerge:

- Should ingestion be batch-based, with large chunks of data processed periodically, or streaming, where data flows in near real time?

- How will failures be handled so that one corrupted file doesn’t collapse the whole process?

- Where will the data land first — in a staging area, a raw storage bucket, or a message queue?

For teams, this stage is a delicate balancing act: moving fast enough to get to model-building, but not so fast that the foundation crumbles under future load.

Cleaning the Real World Out of the Data

Raw data reflects the world, and the world is messy. Fields are missing. Numbers are mistyped. Different systems label the same category in wildly inconsistent ways. In many real projects, data cleaning takes far longer than model training.

This stage involves:

- Handling missing values intelligently — sometimes imputing them, sometimes discarding the affected rows, and sometimes acknowledging that “missing” is itself a signal.

- Removing duplicates without deleting legitimately repeating patterns.

- Standardizing formats for dates, currencies, measurement units.

- Detecting outliers — not simply as noise, but as potential goldmines of insight.

For a production pipeline, cleaning can’t be a manual one-time operation. It must be automated and repeatable, because the pipeline will encounter new data every day, and the cleaning logic must apply consistently.

Cleaning is also where bias can sneak in unnoticed. If your cleaning process disproportionately removes certain categories or ignores certain signals, your model will inherit those blind spots. Responsible teams document every transformation so that data lineage is preserved and any biases can be traced and addressed.

Feature Engineering: Sculpting the Raw Material

If raw data is stone, feature engineering is sculpture. This is the stage where raw variables are transformed into meaningful representations that help the model learn patterns.

Sometimes, this involves simple scaling and encoding — turning categorical variables into numbers, normalizing continuous values, creating binary flags. Other times, it’s a deeply creative act: designing composite features that capture domain-specific relationships. For example, instead of simply using “number of purchases” and “days since signup,” you might create “average purchases per week since signup,” which tells the model something richer.

In production, feature engineering must balance performance with cost. Complex features may improve accuracy, but if they require heavy computation or rely on data unavailable at inference time, they can break the pipeline.

A key concept here is feature stores — centralized systems where features are computed, stored, and served to models in both training and production, ensuring consistency. Without this, teams risk the dreaded “training-serving skew,” where the features at training time differ subtly from those at deployment, leading to degraded performance.

Choosing and Training the Model

By this stage, the pipeline has prepared the canvas. Now comes the painting: selecting and training the model itself.

For some problems, the choice is straightforward — a linear regression for a simple continuous prediction, a gradient boosting machine for tabular classification, a convolutional neural network for image recognition. In other cases, experimentation is necessary. Hyperparameter tuning frameworks, cross-validation strategies, and evaluation metrics all come into play.

Crucially, the pipeline must treat training not as a one-time event but as a reproducible process. This means logging the model version, the data snapshot it was trained on, the hyperparameters used, and the evaluation results. Tools like MLflow, Weights & Biases, or custom tracking systems become part of the pipeline’s DNA.

In production ML, the best model isn’t always the most accurate. Latency, memory footprint, interpretability, and robustness to edge cases can outweigh a few percentage points in accuracy. A lightweight model that delivers predictions instantly may serve users far better than a heavy, slow, marginally more accurate one.

Evaluation in the Real World

A model that shines in offline validation can falter in the wild. Before deployment, the pipeline must put it through rigorous evaluation — not just with random test splits, but with time-based validation (to mimic real-world drift), stress testing (to simulate extreme inputs), and fairness audits (to ensure performance is equitable across groups).

In a production setting, evaluation is about trust. Stakeholders must believe the model’s outputs are reliable enough to drive decisions that affect revenue, customers, or even lives. That trust is built on transparency, repeatable testing, and clear communication of limitations.

Packaging for Deployment

Once the model is trained and evaluated, it must be packaged in a way that the production environment can use. This may involve serializing it into a format like Pickle, ONNX, or TensorFlow SavedModel. But packaging is not just about file formats — it’s about creating an inference pipeline that can receive data, apply the same preprocessing steps as training, run the model, and return results in the required format.

For many teams, this means wrapping the model in a microservice — often using frameworks like FastAPI, Flask, or gRPC — and containerizing it with Docker. This encapsulation makes the model portable, scalable, and easy to deploy across environments.

The Deployment Moment

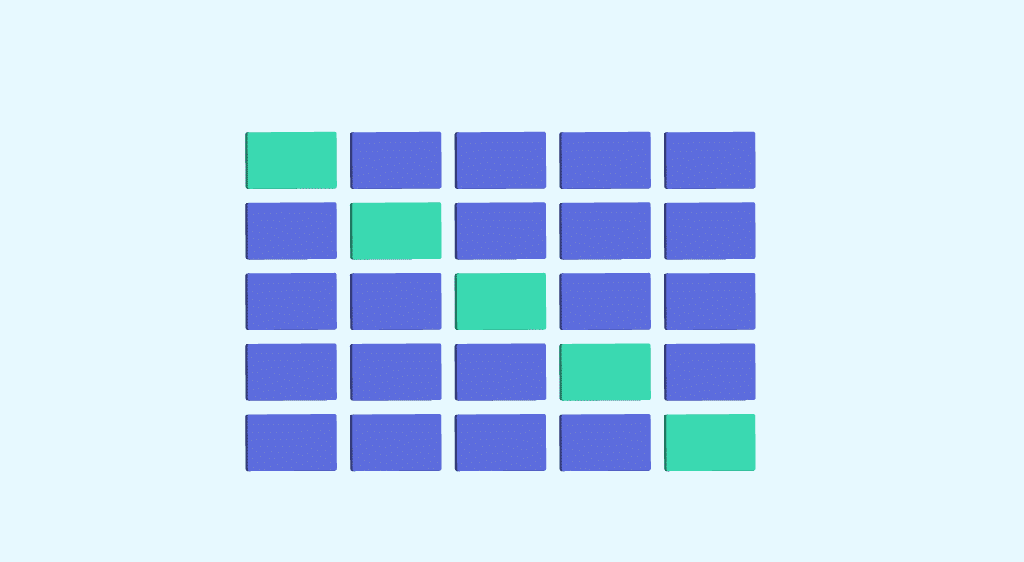

Deployment is the most visible step, but in reality, it’s just the midpoint of the pipeline’s lifecycle. This is where the model moves from theory to practice, from test data to real-world inputs. The deployment strategy might involve a full rollout or a cautious phased release — A/B testing, canary deployments, or shadow mode, where the model runs alongside the old system without influencing outcomes, allowing teams to measure its behavior safely.

At this stage, infrastructure decisions are critical: Will the model run in the cloud, on-premises, or at the edge? Will it serve predictions synchronously via APIs or asynchronously through batch jobs?

The best deployment pipelines are built for reversibility. If something goes wrong, they can roll back to a previous stable version within minutes.

Monitoring: The Lifeblood of Production ML

A deployed model is not a static artifact — it’s a living system interacting with a dynamic world. Data distributions shift. User behavior changes. Sensors drift. Competitors alter the landscape. Without continuous monitoring, a model that was once stellar can silently degrade into uselessness.

Monitoring covers:

- Performance metrics: accuracy, precision, recall, latency, throughput.

- Data drift detection: identifying when input distributions diverge from the training set.

- Bias and fairness monitoring: ensuring the model continues to treat different groups equitably over time.

- Operational health: server uptime, memory usage, error rates.

This is where production ML truly differs from academic ML. The job is never done. Monitoring is not a reactive process — it’s proactive, with alerts, dashboards, and automated retraining triggers.

The Feedback Loop and Continuous Improvement

In a mature production pipeline, monitoring feeds back into retraining. New data, labeled by users or human reviewers, is ingested into the system, cleaned, and used to update the model. This feedback loop keeps the model relevant, adapts it to changing conditions, and continually improves performance.

Some pipelines run this retraining automatically on a schedule; others trigger it based on drift thresholds or performance drops. The challenge is balancing agility with stability — updating often enough to stay sharp, but not so often that the system becomes unstable.

Security, Ethics, and Trust

Production ML operates in the real world, where stakes are high and mistakes have consequences. That means security and ethics can’t be afterthoughts. Models can be attacked — through adversarial inputs designed to fool them, or through data poisoning during training. Pipelines must include safeguards, from input validation to model hardening techniques.

Ethically, production ML teams carry the responsibility of ensuring their models don’t perpetuate harm. This means auditing for bias, explaining decisions to affected users when possible, and being transparent about limitations. Regulations like GDPR and the EU AI Act are making these practices not just advisable but mandatory.

The Art of Building for Scale

A final truth: a production ML pipeline is not just a collection of steps — it’s an ecosystem. It must be scalable, both in technical terms (handling larger data volumes, more requests) and in human terms (onboarding new team members, adapting to new problem domains).

This requires thoughtful architecture: modular components that can evolve independently, clear documentation that outlives individual engineers, and tooling that empowers data scientists and engineers alike.

At its best, a production ML pipeline is like a well-tended garden. Data flows through it like water, models bloom, and users reap the harvest. But it needs constant care — pruning, fertilizing, and occasionally redesigning entire sections to meet the needs of the future.

The Journey Never Ends

From the first spark of an idea to the hum of servers serving predictions in milliseconds, building a production ML pipeline is a journey of transformation — for the data, for the model, and for the team itself.

It demands the precision of an engineer, the curiosity of a scientist, and the adaptability of a storyteller who knows the ending will keep changing. The reward is not just a working system, but the quiet satisfaction of knowing that somewhere, a user’s life just got a little better because of the invisible architecture you built.