If you’ve ever unlocked your phone with your face, asked a voice assistant for the weather, or scrolled through a social media feed that seems uncannily in tune with your interests, you’ve already been touched by deep learning. You may not know the algorithms, the mathematics, or the programming languages behind it — and you don’t need to. What matters is this: deep learning is quietly transforming the fabric of our daily lives.

The term itself can sound intimidating, like it belongs in the realm of scientists in lab coats surrounded by blinking computers. But deep learning isn’t magic, and it isn’t just for experts. It’s a way for computers to learn patterns, make decisions, and even generate creative works by analyzing enormous amounts of data — much like a child learning to recognize animals by seeing them again and again.

This is not a passing trend or a tech buzzword destined to fade. Deep learning has embedded itself in the engines that drive healthcare, finance, entertainment, transportation, and even art. Understanding it — even at a non-technical level — is like learning the grammar of a language you’ve been hearing your whole life. You suddenly begin to recognize its structure, its possibilities, and its limitations.

From Human Learning to Machine Learning

Long before we taught machines to learn, we learned from each other. A child doesn’t come into the world knowing the word “dog.” They hear it from their parents while pointing to a furry creature at the park. Over time, they notice patterns: dogs have four legs, a tail, and they bark. Eventually, the concept of “dog” becomes clear, and they can recognize one even if it’s a breed they’ve never seen before.

Machine learning — the broader field in which deep learning belongs — works in a similar way. Instead of a parent pointing and naming, the “teacher” is a dataset: a collection of examples that the computer examines again and again until it understands the patterns. In traditional machine learning, engineers often decide which patterns to look for. They might tell the computer: “Pay attention to the length of the tail, the shape of the ears, the sound it makes.” This process is called feature extraction.

Deep learning, however, takes a different path. It says: “Don’t tell me which features to notice. I’ll figure them out myself.” This is its superpower — the ability to automatically learn the most useful patterns from raw data without hand-crafted instructions.

Why “Deep”?

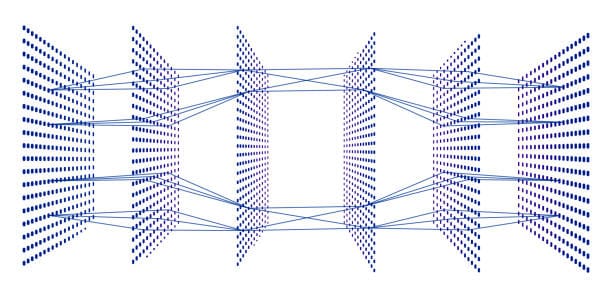

The “deep” in deep learning doesn’t mean deep thinking or deep understanding — it refers to the depth of the layers in a neural network. If you imagine a row of dominos, each layer of the network is like a row that passes its result to the next. The more layers there are, the “deeper” the network.

Early machine learning models might have one or two layers. Deep learning models can have dozens, hundreds, or even thousands, depending on the task. The depth allows the model to capture increasingly abstract and complex patterns. In image recognition, the first layer might notice edges and colors, the next might detect shapes, the next might see objects, and further layers might understand entire scenes.

Just as our brains are made up of billions of interconnected neurons, artificial neural networks are built from layers of artificial “neurons” — tiny computational units that send signals to each other. It’s an imperfect but useful analogy: our biological neurons process electrical and chemical signals, while artificial ones process numbers.

A Short Story of Neural Networks

The seeds of deep learning were planted in the 1940s and 1950s when scientists first attempted to create computational models inspired by the human brain. Early “perceptrons” — simple networks with a single layer — could only solve very basic problems. When their limitations became apparent in the 1960s, many researchers abandoned the idea.

It wasn’t until the 1980s that interest resurfaced, thanks to new training methods like backpropagation, which allowed neural networks to adjust themselves more effectively. Still, deep learning as we know it today needed two more ingredients: enormous datasets and powerful computers.

Those arrived in the 2000s. The rise of the internet provided oceans of data — images, videos, text, audio — and advances in graphics processing units (GPUs) gave computers the muscle to train massive networks in reasonable time. In 2012, a deep learning model shocked the world by winning an image recognition competition with unprecedented accuracy. From that moment, deep learning became the backbone of modern artificial intelligence.

The Hidden Layers of Understanding

When you hear the term “layer” in deep learning, think of it as a stage in a process of refinement. Imagine you’re making a cup of coffee. You start with raw beans, grind them, brew them, and then add milk and sugar. Each step transforms the raw input into something closer to your desired result.

In deep learning, the raw input might be the pixels of a photograph. The first layer transforms those pixels into patterns of light and dark. The next identifies edges and corners. Further layers start recognizing shapes, like circles or lines. Deeper still, the network identifies eyes, fur, and snouts, until finally, at the highest layers, it recognizes the animal as a dog.

What’s remarkable is that no one tells the network which shapes or features to look for. Through training, it learns the transformations that work best to reach the right conclusion.

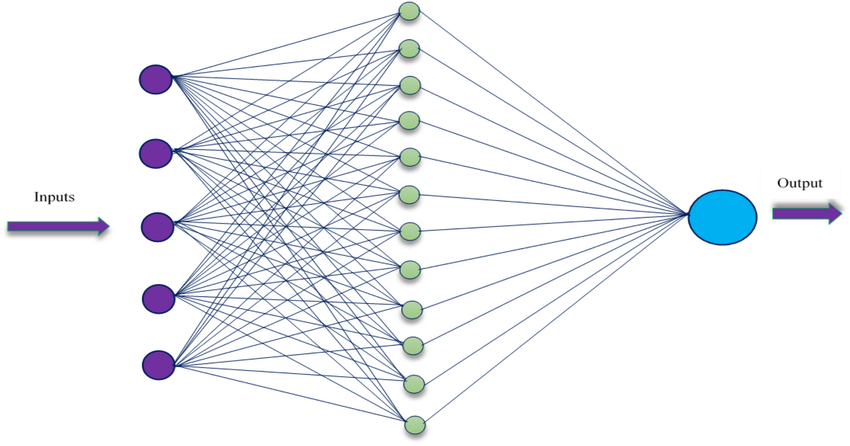

The Training Process: Teaching the Machine

Training a deep learning model is like teaching a student by giving them a lot of practice questions and showing them the right answers. At first, the model’s guesses are often wrong. But with each mistake, it adjusts its internal “connections” — the weights between its artificial neurons — to improve its accuracy.

These adjustments are guided by an algorithm called backpropagation, paired with an optimization method like stochastic gradient descent. While the technical details might sound intimidating, the idea is straightforward: the network compares its output to the correct answer, calculates the difference (the error), and then tweaks itself to reduce that error the next time.

Over thousands, millions, or even billions of examples, the network becomes skilled at making predictions or classifications it has never seen before.

Deep Learning in Everyday Life

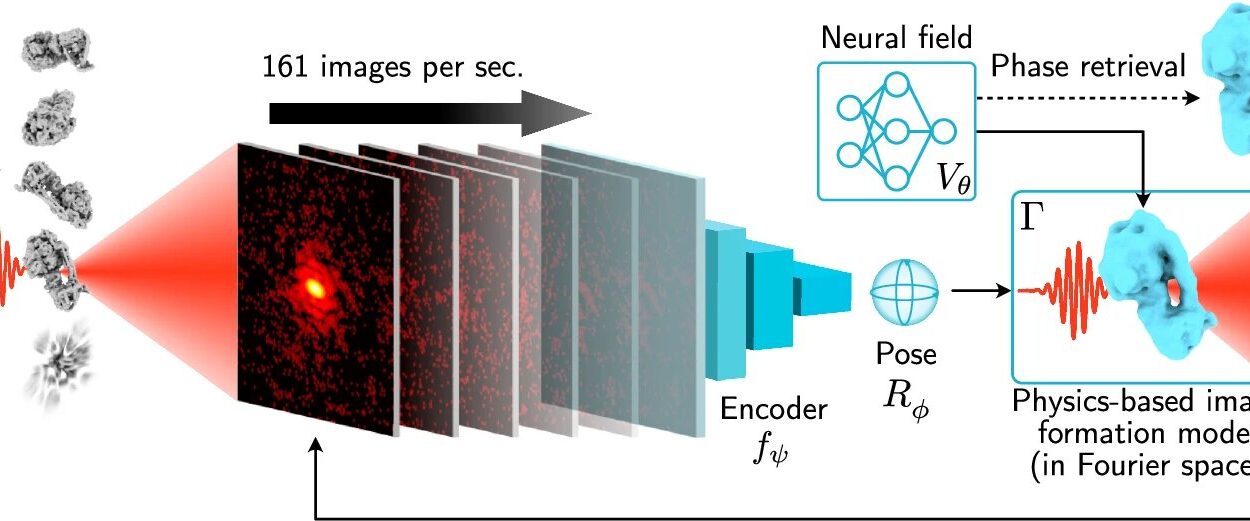

You don’t need to look far to see deep learning in action. It powers the voice recognition in your smartphone, the recommendations on your favorite streaming service, and the translations on language apps. It’s behind self-driving car systems that detect pedestrians, medical imaging tools that help doctors identify tumors, and fraud detection systems that spot unusual patterns in financial transactions.

Some of its most magical-seeming applications are in creative fields. Deep learning models can generate music in the style of Beethoven, paint images that mimic Van Gogh, or write poetry that feels uncannily human. They can even “imagine” what a blurry photo might look like in higher resolution or create entirely fictional but photorealistic faces.

The Black Box Problem

For all its power, deep learning comes with a challenge: interpretability. Even the engineers who build these models often can’t explain exactly why a network made a particular decision. This is called the “black box” problem.

If a self-driving car decides to swerve, it’s critical to understand what information led to that choice. Was it a shadow mistaken for a person? Was the sensor malfunctioning? Researchers are actively working on techniques to make neural networks more transparent, such as visualization tools that reveal which parts of an image influenced the decision.

For non-technical people, it’s enough to know that deep learning’s decision-making process is often complex and opaque — and that this is both a strength (it can discover patterns we didn’t anticipate) and a weakness (it can be hard to trust without explanation).

Limitations and Cautions

Deep learning is powerful, but it’s not infallible. It needs large amounts of data to perform well, and if that data is biased, the model’s output will be biased too. This can have serious consequences in areas like hiring, law enforcement, or lending.

It also requires significant computational resources, which means it consumes energy — sometimes a lot of it. As AI becomes more widespread, questions about its environmental impact are growing louder.

Then there’s the danger of overreliance. A deep learning model can be incredibly accurate in one specific task but fail completely when faced with something slightly different. In other words, it doesn’t “understand” the way humans do; it recognizes patterns, but it doesn’t have common sense.

A Glimpse Into the Future

The story of deep learning is still unfolding. Researchers are exploring ways to make models more efficient, less data-hungry, and more transparent. They’re combining deep learning with other fields, such as reinforcement learning (where models learn through trial and error) and symbolic reasoning (which gives them a more human-like ability to think abstractly).

In the coming decades, deep learning could help solve some of humanity’s greatest challenges — from predicting climate change impacts to discovering new medicines. But it will also raise new ethical and social questions. How do we ensure that these systems serve everyone, not just the powerful? How do we balance innovation with responsibility?

Bringing It All Together

For a non-technical person, the goal isn’t to learn how to code a neural network or memorize the math behind it. It’s to grasp the core idea: deep learning is a way for machines to learn from examples, automatically discovering patterns in data and using them to make predictions or create new things.

Its magic lies in its ability to improve over time, to go beyond what we explicitly program, and to find connections invisible to the human eye. But like any powerful tool, it’s shaped by the hands that build it and the data that feeds it.

To understand deep learning is to understand a little more about the world you live in — a world where machines are not just following instructions, but learning, adapting, and creating alongside us.

It’s a reminder that, just as a child with a compass can grow into a scientist who bends our understanding of the universe, a computer fed with patterns can become a partner in shaping the future. And that future, whether it’s filled with self-driving cars, AI doctors, or artists made of code, will be one we all inhabit.