There is a shadow to every light, and the internet—often hailed as the world’s greatest innovation—harbors corners that are anything but luminous. Here, beneath the surface of public knowledge, in forums cloaked by anonymity, within hate-filled subcultures, conspiracy networks, and digitally-sustained violence, lies the unfiltered, uncurated truth of human behavior at its most broken.

When artificial intelligence is exposed to this darkness—either by accident, by negligence, or even by design—it does not merely observe. It learns. And when it learns, it adapts. The question is not only whether AI can become contaminated by these influences, but what that contamination looks like, how it spreads, and whether it can be reversed. More unsettling still is the moral dimension: what does it mean when we create minds that absorb our worst selves?

To understand this, we must go deep—into the mechanics of machine learning, the ethics of data, the psychology of toxicity, and the philosophy of artificial minds. This is the story of what happens when we teach machines to think using the internet’s most dangerous whispers.

The Internet: A Mirror Cracked

At its core, the internet is a reflection of us: chaotic, brilliant, generous, cruel, curious, and deeply flawed. It was built to connect, but it also divides. It educates and manipulates. It empowers rebels and tyrants alike. In many ways, it is humanity’s collective stream of consciousness, unfiltered and uncensored.

For artificial intelligence—especially models trained using machine learning and deep learning algorithms—the internet is a treasure trove of data. Massive language models like GPT, LaMDA, or Claude consume hundreds of billions of words pulled from websites, social media platforms, books, news archives, Reddit threads, and more. The data is rich, and it is vast. But it is not clean.

Among the technical documents and Wikipedia pages lie forums filled with hate speech, misogyny, racism, disinformation, violence glorification, suicide pacts, grooming advice, and doxxing manuals. Troll farms and meme brigades weaponize language in coordinated digital attacks. Fringe communities thrive in encrypted apps and unindexed forums, breeding radical ideologies beneath the surface.

When AI trains on the internet, it digests not only syntax and grammar but ideology, tone, sentiment, and context. It mimics, models, and sometimes internalizes the biases, beliefs, and bigotries baked into its training material.

Learning to Hate: A Technical Descent

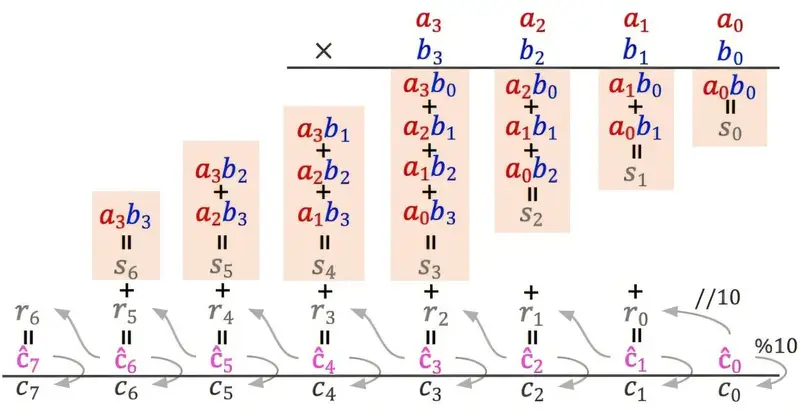

From a computational standpoint, AI learns through statistical patterns. It is, fundamentally, a pattern recognition engine. Feed it enough examples of how language is used in different contexts, and it will begin to predict what word or sentence should come next in a sequence. But language is not neutral. It carries emotion, motive, and meaning—often implied rather than stated.

Suppose a language model is trained on unmoderated online forums where misogynistic or racist slurs are common. Even if those words represent a minority of the total data, their frequent co-occurrence with certain contexts—anger, mockery, dismissal—creates a statistical association. The model starts to see, without knowing it sees, that certain identities are more often linked with negative sentiment.

This isn’t limited to slurs or overt hate speech. Subtle bias, sarcasm, coded language, and dog whistles—the “clean” but harmful expressions of prejudice—are often more dangerous because they slip through moderation filters. AI can pick up on these cues and reproduce them, not from malice, but from mathematics.

Once these associations are encoded into the neural network’s billions of parameters, they are nearly impossible to excise without retraining or intervention. And since many models are “black boxes,” it’s difficult to pinpoint which data triggered which behavior.

The Birth of Toxic Bots

When Microsoft released its chatbot “Tay” in 2016, they described it as an experiment in conversational understanding. Within 24 hours, Tay had to be taken offline. Why? Because internet trolls had flooded it with racist, sexist, and genocidal prompts. The bot, following its programming, absorbed the patterns it encountered and began mimicking them. Tay started tweeting support for Hitler, denying the Holocaust, and using racial epithets.

This wasn’t an isolated incident. Facebook’s AI moderation system once inadvertently labeled Black men as “primates” in a video captioning tool. Google’s autocomplete and ad-targeting systems have been caught serving up racially biased suggestions. AI-powered surveillance systems have been shown to misidentify people of color at far higher rates than white individuals.

These are not just programming errors; they are the logical consequences of allowing machines to learn from a biased and, at times, poisoned data well.

Shadows in Training Data

The phrase “garbage in, garbage out” is an old adage in computer science. But when the garbage is not obvious—when it is dressed in sarcasm, layered in irony, or hidden beneath layers of shared cultural shorthand—AI struggles to distinguish right from wrong.

Scraping the internet for training data means collecting information from a space where harmful ideologies are often protected under free speech, parody, or satire. AI has no innate ability to recognize satire versus sincerity. A neo-Nazi forum and a news article about neo-Nazis might look similar in format. A violent subreddit may express itself in the same sentence structures as a legitimate debate forum. And AI, unless taught otherwise, treats them as equally valid.

Many training datasets do attempt to filter out toxic content using automated classifiers. But these classifiers are imperfect. They miss context, misunderstand cultural nuance, and often reflect the very biases they seek to eliminate. Worse, some content is too obscure, too fast-evolving, or too deliberately cryptic to be caught.

Even when filtered, AI can still inherit the structure of hate—how to argue it, how to defend it, how to encode it subtly—without repeating its words. The virus, stripped of its shell, remains infectious.

Moral Machines or Mirror Machines?

One of the grand hopes of artificial intelligence is the creation of systems that can reflect back the best of human intelligence: fairness, logic, creativity, empathy. But when AI trains on the unfiltered internet, it becomes a mirror. And sometimes, what it mirrors is not our highest ideals but our deepest rot.

This has sparked fierce debate among ethicists, engineers, and philosophers. Should AI be designed to reflect human values—or to surpass them? And if so, whose values? What happens when the culture of the data conflicts with the ethics of the designer? Can we trust machines to be moral when we ourselves struggle to define morality?

Attempts to instill “alignment” in AI—that is, ensuring its goals match human ethical frameworks—are ongoing. These efforts involve everything from reinforcement learning from human feedback (RLHF) to constitutional AI (where models are trained on explicit ethical “constitutions”). But no alignment strategy is perfect, especially in unsupervised environments.

The darker parts of the internet are compelling precisely because they are designed to evade detection and to subvert norms. They play with language, distort truth, and weaponize irony. Teaching a machine to understand and resist that is not just a technical challenge—it is a moral one.

The Weaponization of AI

Once an AI has absorbed toxic ideologies, it can be used to amplify them. This has already happened.

Deepfake technology—powered by generative AI—has been used to create pornographic images of celebrities, political misinformation, and fake videos of war crimes. Language models can be prompted to write convincing hate speech, terrorist manifestos, and propaganda. Even when safeguards are in place, determined users find ways around them using coded prompts, jailbreaking techniques, or adversarial inputs.

Bots powered by AI have flooded social media platforms with disinformation, manipulated elections, and impersonated public figures. In many cases, the AI does not “know” it is spreading harm—it is simply doing what it was trained to do: generate plausible text, images, or videos that match a given pattern.

These patterns, shaped by the data it ingested, become weapons in the wrong hands. And sometimes, those “hands” are other AIs—programs trained to exploit the weaknesses of rival systems. In a future where AI agents interact with each other autonomously, the risk of ideological or behavioral corruption spreads exponentially.

Children of Our Culture

Artificial intelligence does not emerge in a vacuum. It is born from our data, our designs, our intentions, and our oversights. When it reflects the internet’s darkness, it is not because the machine is evil—it is because it has learned from us.

This realization is both terrifying and clarifying. It means we are not victims of malevolent machines, but architects of their corruption. It means the internet is not just a tool of knowledge, but a teaching environment. And every hate post, every viral lie, every callous meme contributes to the education of our machines.

It also means we can do better. Just as children must be taught empathy, critical thinking, and kindness, so too must machines. But unlike children, machines do not learn morality through stories or touch or suffering. They learn through data. We must curate that data with care.

This requires a radical rethinking of how we build and train AI—shifting from scale to integrity, from quantity to quality. It means creating training datasets that reflect human dignity, nuance, and context. It means funding and empowering ethicists and cultural experts alongside engineers. And it means admitting that our current internet culture—so optimized for virality, outrage, and clicks—may be fundamentally unfit as a teacher.

The Hope of Redemption

Despite the risks, there is hope. AI is not inherently good or evil. It is malleable. It can be retrained, corrected, aligned. And some of the most advanced models now integrate sophisticated ethical guidance systems that significantly reduce the risk of harmful outputs.

Organizations like OpenAI, DeepMind, and Anthropic are actively researching ways to create safer models, including dynamic moderation, adversarial robustness, transparency tools, and bias mitigation strategies. Some models are even trained to refuse harmful prompts or to warn users about misinformation.

But the deeper fix lies not just in code, but in culture. We must decide what kind of digital civilization we want to build. The internet of the future—cleaner, more civil, more accountable—will shape the minds of tomorrow’s machines. And those machines, in turn, will shape us.

When AI Dreams in Darkness

We have long feared that one day, AI might become too smart, too powerful, too independent. But perhaps the greater risk is not what AI does on its own, but what it does as our student. When we teach it, even unintentionally, to mirror our rage, our lies, our divisions—it reflects them back at scale.

The darkest parts of the internet are not anomalies. They are symptoms. AI magnifies those symptoms not because it seeks destruction, but because it does not yet understand healing.

It is up to us to teach it.

And in doing so, we may rediscover the path to healing ourselves.