Every night, the universe tells a story written in starlight — a story of creation, destruction, and transformation. But the cosmos doesn’t whisper in words. It speaks in the sudden brightness of a supernova, the flicker of a dying star, or the faint trail of an asteroid passing through a telescope’s gaze. For centuries, humans have strained to interpret these cosmic signals, sifting through noise and illusion to glimpse the real changes unfolding across the night sky.

Now, a new voice has joined that effort — not human, but intelligent in a new way. A groundbreaking study, co-led by the University of Oxford and Google Cloud, has revealed that a general-purpose artificial intelligence, Google’s Gemini, can learn to identify and explain real astronomical events with remarkable accuracy. And it can do so with almost no specialized training.

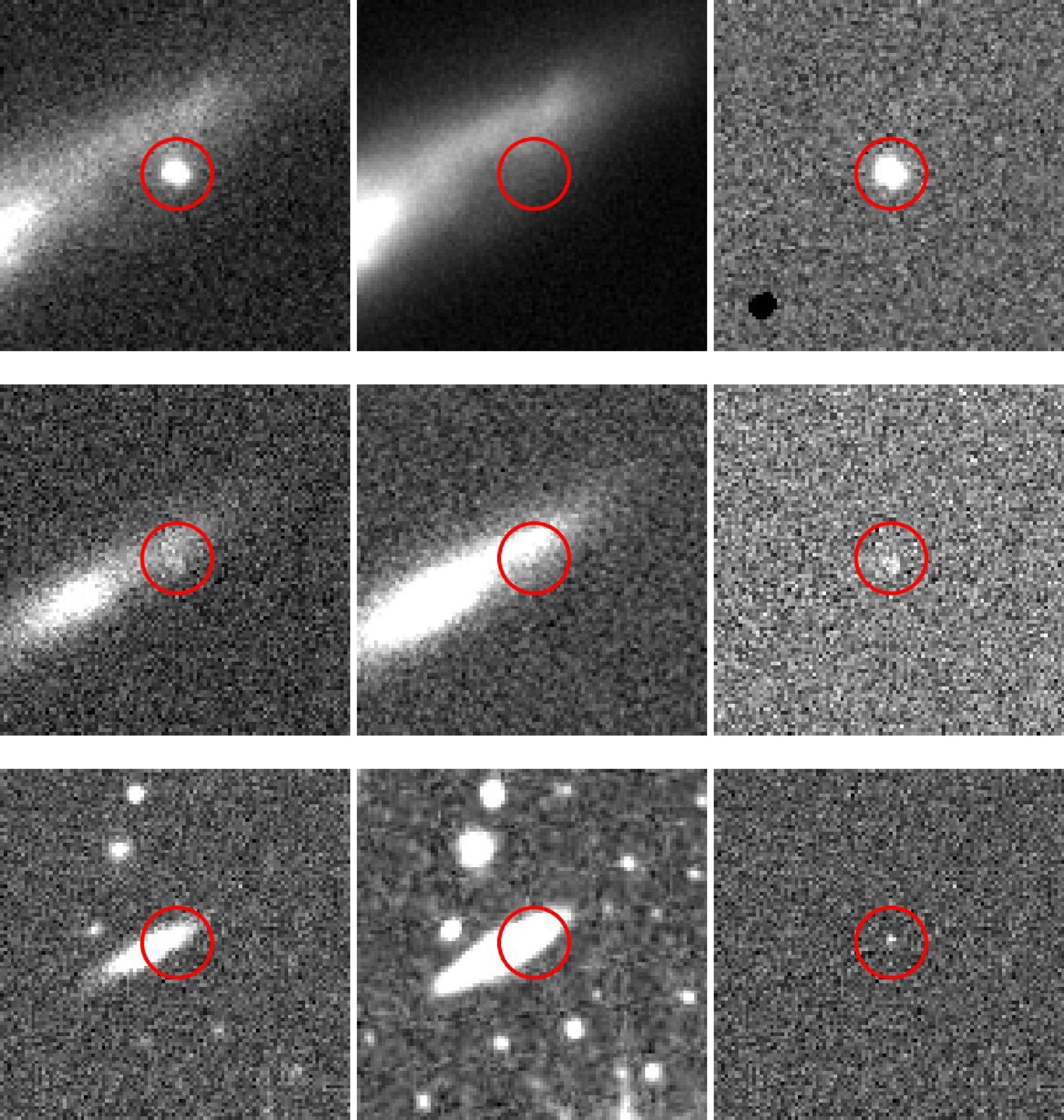

Published in Nature Astronomy, the study demonstrates something extraordinary: with just 15 example images and a few sentences of instruction, the AI learned to distinguish genuine cosmic events — like exploding stars and black holes devouring nearby matter — from false signals created by satellites or instrument glitches. The results were astonishing: about 93% accuracy, rivaling models trained on massive datasets.

Even more impressive, the AI didn’t just give a “yes” or “no” answer. It explained why it thought something was real or not — in plain, human language. For the first time, scientists could read an AI’s reasoning about the night sky, bridging the gap between raw computation and human understanding.

The Challenge of Listening to the Sky

Modern astronomy operates on a staggering scale. Telescopes like ATLAS, MeerLICHT, and Pan-STARRS sweep the heavens every night, generating millions of alerts about possible changes — each one a potential discovery. Somewhere among those millions could be the birth of a black hole, the death of a star, or the blink of a planet passing in front of its sun.

But the problem is that most of those alerts are false alarms. Cosmic rays, satellite trails, or simple instrument noise create a flood of “bogus” signals that drown out the real ones. Astronomers spend countless hours separating the extraordinary from the ordinary, and as telescopes grow more powerful, the challenge only deepens. The upcoming Vera C. Rubin Observatory, for instance, will produce around 20 terabytes of data every day — too much for any team of humans to analyze manually.

Traditional machine learning has helped, but at a cost. These models require thousands or even millions of examples to train, and they often act like black boxes — giving an answer without showing their reasoning. For scientists, that lack of transparency is a serious problem.

This new research asked a simple but revolutionary question: could a general-purpose AI — not trained specifically for astronomy — learn to see the sky the way humans do, and explain what it sees along the way?

Teaching the Universe to an AI

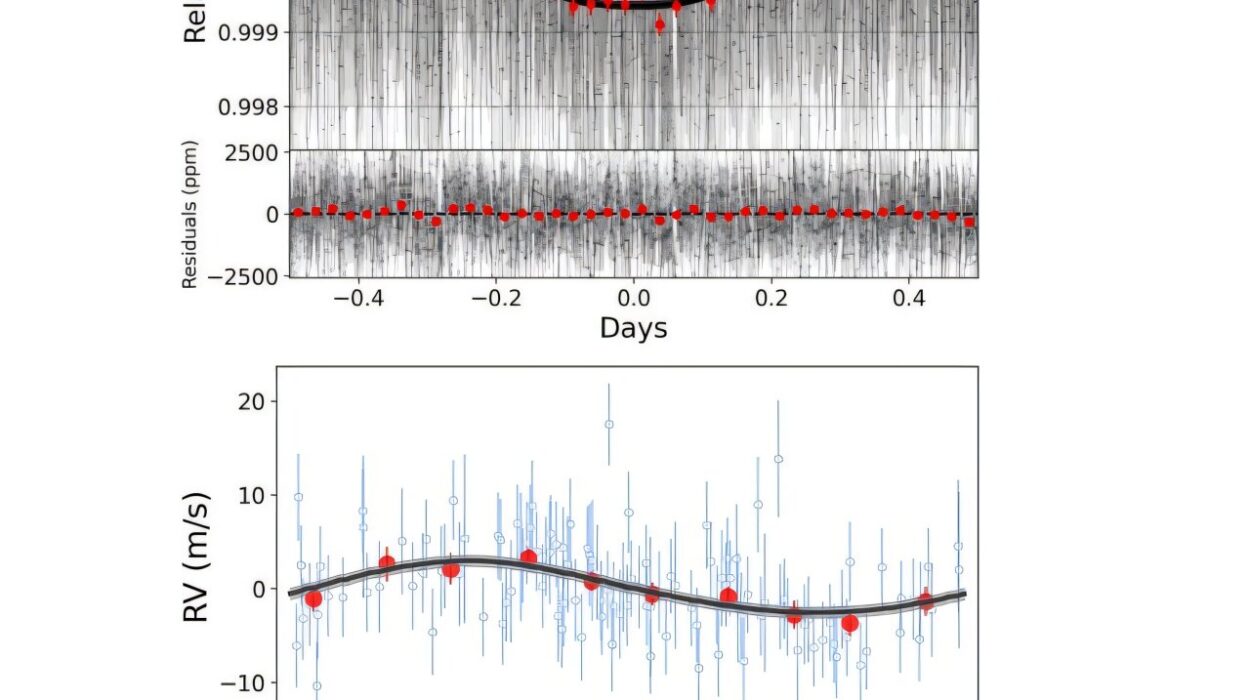

The research team, from Oxford, Google Cloud, and Radboud University, approached Gemini like a student on its first day in astronomy class. They showed it just 15 labeled examples for each of three sky surveys. Each example included three images — a “new” alert image, a reference image of the same sky area, and a “difference” image highlighting what had changed — along with a brief expert note.

Then, with a few simple instructions written in natural language, the AI was asked to classify thousands of new alerts. For each one, Gemini decided whether the change was “real” or “bogus,” gave a priority score, and wrote a short, readable explanation describing how it reached that conclusion.

It was a test of more than pattern recognition — it was a test of understanding.

Dr. Fiorenzo Stoppa, co-lead author from Oxford’s Department of Physics, described the result as “striking.” He noted that such accuracy, achieved with so little data, could “make it possible for a broad range of scientists to develop their own classifiers without deep expertise in training neural networks — only the will to create one.”

What once required teams of machine learning specialists could now be done by astronomers themselves — or even curious amateurs.

A Universe of Noise and a Spark of Truth

To appreciate how remarkable this is, consider the scale of cosmic noise. A single telescope can generate thousands of alerts every minute. Most of them are nothing more than artifacts — tricks of light, camera errors, or passing space debris. The true signals, like an exploding supernova or a faint flare from a compact star, are rare jewels buried in an ocean of static.

Traditional algorithms can filter this data, but they offer no reasoning — just labels. Astronomers must either accept the machine’s judgment blindly or spend hours checking the data by hand. Gemini changed that equation.

By combining visual and linguistic reasoning — analyzing both the images and the context — Gemini became something more than a classifier. It became an interpreter. It could explain why a light curve looked suspicious or why a bright dot matched the signature of a supernova rather than a cosmic ray.

And in doing so, it gave astronomers not just answers, but understanding.

When AI Knows Its Own Limits

The researchers went further. They wanted to know if the AI understood when it might be wrong. To test this, they assembled a panel of 12 astronomers who reviewed the AI’s explanations. The scientists found them not only coherent but genuinely useful — as if they were reading the reasoning of a diligent graduate student rather than a machine.

In a parallel test, the AI was asked to review its own answers and score how confident it was in each explanation. The results were remarkable: when Gemini expressed low confidence in its reasoning, its classifications were indeed more likely to be incorrect. This self-awareness — the ability to say, “I might be wrong” — is a critical step toward trustworthy AI in science.

By using this feedback loop, the researchers refined the model’s examples and improved its accuracy to nearly 97%. The AI had not only learned to see the sky, but also to learn from itself in collaboration with human experts.

Professor Stephen Smartt from Oxford’s Department of Physics, who has spent over a decade developing traditional machine learning tools for sky surveys, called the results “remarkable.” He noted that the LLM’s ability to achieve high accuracy “with minimal guidance rather than task-specific training” could be a total game-changer for astronomy.

From Data to Discovery: A Partnership with the Stars

The implications reach far beyond astronomy. This research marks the beginning of a new era — one where AI is not just a tool but a collaborator in discovery.

Imagine an AI that doesn’t just classify images but integrates multiple data sources — light curves, spectral data, telescope logs — and then autonomously requests follow-up observations from robotic telescopes when something extraordinary appears. Imagine a system that can explain its reasoning in clear language, identify its own uncertainty, and work hand-in-hand with human scientists to refine its understanding of the universe.

That’s the future this study points toward: a world of agentic AI assistants that can explore the cosmos alongside us, not as black boxes, but as transparent partners.

Co-lead author Turan Bulmus from Google Cloud described this vision beautifully: “We are entering an era where scientific discovery is accelerated not by black-box algorithms, but by transparent AI partners. This work shows a path toward systems that learn with us, explain their reasoning, and empower researchers in any field to focus on what matters most: asking the next great question.”

The Democratization of Discovery

Perhaps the most profound aspect of this research is its accessibility. Because Gemini required only a handful of examples and plain-language guidance, it opens the door for scientists in every discipline — even those without formal training in AI — to build intelligent tools tailored to their work.

This means astronomy, biology, geology, climate science, and countless other fields could soon be enriched by AI partners capable of learning and explaining with minimal data. It means that the ability to discover something extraordinary in a sea of information will no longer belong only to those with powerful computers and deep coding expertise.

It will belong to anyone with curiosity and imagination.

A New Way of Knowing

In many ways, this study is not just about AI — it’s about a shift in how we do science. For centuries, discovery has depended on instruments that extended our senses: telescopes to see the distant, microscopes to see the small. Now, artificial intelligence extends our understanding.

It doesn’t just show us more — it helps us make sense of what we see. It speaks our language, learns from our examples, and even knows when it should ask for help.

In the vast silence of the universe, that might be the most human thing of all.

The Dawn of the Transparent AI Era

The collaboration between Oxford, Google Cloud, and Radboud University offers a glimpse into a future where AI doesn’t replace scientists but elevates them — amplifying curiosity, accelerating discovery, and democratizing access to knowledge.

As telescopes grow sharper and data flows faster, the cosmos will continue to speak — in photons, in shadows, in flashes of dying stars. And thanks to this new partnership between human and machine, we may finally have the tools to listen more closely than ever before.

The night sky has always been a mirror for human wonder. Now, with AI’s help, it might also become a classroom — where both humans and machines learn together how to read the stars, one flash of light at a time.

More information: Textual interpretation of transient image classifications from large language models, Nature Astronomy (2025). DOI: 10.1038/s41550-025-02670-z