Every day, you wake up and reach for your phone. The moment you unlock it, an invisible force begins to guide you—suggesting the news you read, the videos you watch, the ads you see, and even the people you meet online. You may not notice it, but it notices you. It observes what you click, what you ignore, how long you linger, and even when you put your phone down.

This unseen presence is not human. It does not have emotions, desires, or consciousness. Yet, it quietly shapes your reality. It is artificial intelligence—AI—not the flashy humanoid robots we often imagine, but the silent algorithms that power the digital world. We rarely see them, but they are everywhere, weaving themselves into the fabric of modern life.

To ask, What is the AI we don’t see? is to ask how invisible algorithms influence not only our choices but also our societies, economies, and even our understanding of truth.

What Exactly Are Algorithms?

At its simplest, an algorithm is a set of instructions—a recipe for solving a problem. If you follow a recipe for baking bread, you are using an algorithm. In the digital realm, however, algorithms are mathematical procedures that process data and generate outcomes.

Artificial intelligence algorithms go further. They learn patterns from data and adapt over time. For instance, if you watch a video on cooking pasta, YouTube’s recommendation system learns that you may also enjoy recipes for sauces, desserts, or Italian cuisine in general. Over millions of interactions, these systems build detailed models of individual preferences.

Unlike the code of early computers, which followed rigid instructions, modern AI algorithms thrive on flexibility. They are designed to learn, infer, and predict. The more they observe, the better they become at anticipating human behavior—even before we consciously know what we want.

The Hidden Infrastructure of Everyday Life

When we speak about AI, most people picture futuristic robots, but the reality is much more subtle. AI is not something we encounter as a separate entity. It is embedded into the very infrastructure of our lives, hiding behind every app, service, and platform.

When you check the weather forecast, AI models predict temperature and rainfall. When you drive using GPS, AI recalculates routes in real time. When you swipe your credit card, AI fraud detection systems evaluate whether the transaction is legitimate. At the airport, facial recognition systems powered by AI confirm identities in seconds.

These systems operate silently in the background, so seamlessly integrated that we rarely pause to consider their presence. We notice electricity only when it fails, and AI has become similar—an invisible utility that fuels the digital age.

The Personalization of Reality

Perhaps the most profound influence of invisible AI lies in personalization. Social media feeds, online shopping platforms, music streaming services, and search engines do not present information randomly. They curate it for you.

This curation is not neutral. Algorithms learn your preferences—your politics, your hobbies, your fears, your moods—and filter the world through them. The search results you see on Google may differ from what your friend sees, even if you type the same query. The news stories appearing on your feed are selected not because they are the most important, but because they are the most likely to keep you engaged.

This personalization is convenient, even delightful. But it also raises troubling questions: If everyone lives inside a customized digital bubble, do we lose a shared reality? When algorithms feed us only what we already like, do we grow narrower in our perspectives, trapped inside echo chambers of our own making?

The Economy of Attention

Invisible AI does not exist in isolation. It thrives within an economy where human attention is the most valuable resource. Tech companies do not simply sell services—they sell our attention to advertisers. The more time we spend scrolling, the more valuable we become.

Algorithms are designed to maximize engagement. They learn which colors, words, and video lengths keep us hooked. They nudge us toward one more click, one more video, one more purchase. In doing so, they shape habits, influence moods, and sometimes exploit vulnerabilities.

This economy is not inherently malicious, but it is relentless. An AI system does not “care” about human well-being. It cares only about optimizing for its goal, whether that goal is maximizing ad revenue, recommending songs, or predicting purchases. In this way, invisible AI becomes a mirror of human desire—but one that magnifies, distorts, and sometimes manipulates it.

The Politics of Algorithms

Beyond personal life, AI quietly shapes the public sphere. Political campaigns use algorithms to target voters with personalized messages. Governments employ predictive policing tools to identify “high-risk” areas for crime. Immigration agencies use facial recognition to screen travelers.

Algorithms decide who is eligible for loans, who gets job interviews, and who may be flagged for additional scrutiny at airports. These decisions, often invisible to those affected, can have life-altering consequences.

The danger is not only bias but opacity. Many modern AI systems are “black boxes”—their decision-making processes so complex that even their creators cannot fully explain why they reached a particular outcome. When invisible algorithms influence justice, finance, or governance, the stakes rise far beyond personal convenience.

The Emotional Dimension

Invisible AI is not only technical; it is emotional. It shapes how we feel about ourselves and others. Social media algorithms, tuned to maximize engagement, often elevate content that provokes strong reactions—anger, fear, or outrage—because those emotions drive clicks and shares.

This does not mean the algorithms are malicious; they are indifferent. But indifference can be dangerous. When outrage spreads more efficiently than calm dialogue, societies grow polarized. When people measure self-worth through likes and shares, mental health suffers.

On a more personal level, AI can sense emotions in ways that feel almost intimate. Voice assistants detect frustration in our tone. Streaming services learn what we watch when we are sad or restless. Dating apps analyze swipes to predict attraction patterns. Algorithms do not feel, but they know how we feel—and they act on that knowledge.

The Promise of Good

Yet it would be unfair to paint invisible AI as only manipulative or dangerous. These same algorithms can save lives, expand opportunity, and unlock knowledge at scales never before possible.

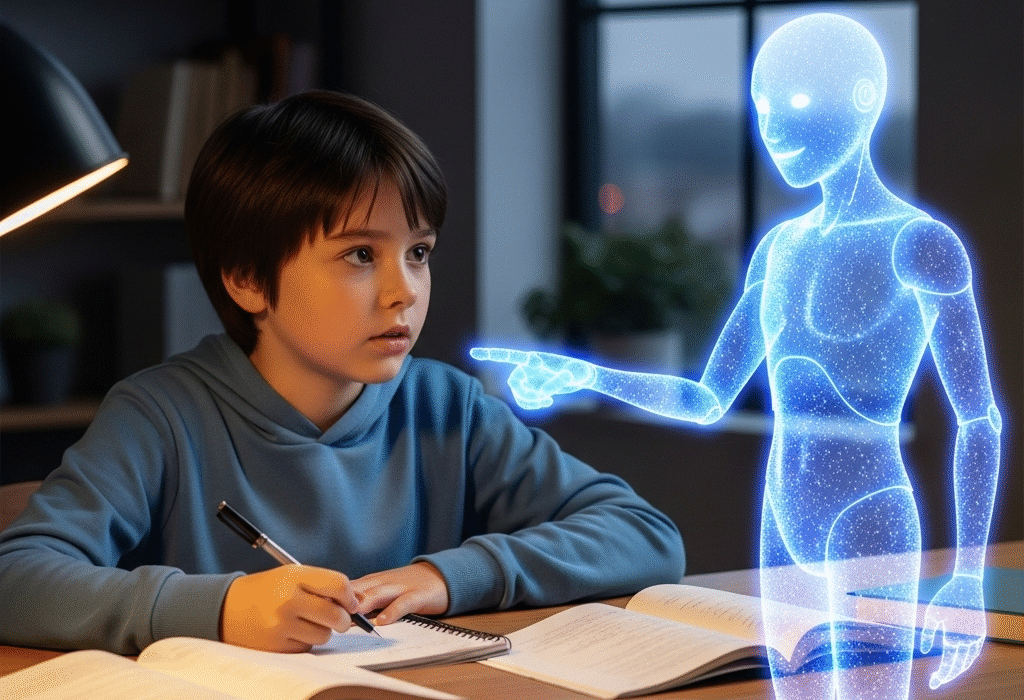

In healthcare, AI systems analyze medical images to detect cancers earlier than human doctors. In environmental science, AI models predict climate patterns and optimize renewable energy grids. In education, AI tutors provide personalized learning experiences for students across the world.

Even in everyday life, AI reduces friction. It helps us find music that resonates, connects us with distant friends, and translates languages instantly. For many, these invisible systems are not chains but wings, opening doors that would otherwise remain closed.

The Struggle for Transparency

The challenge is not to reject AI but to govern it wisely. As algorithms grow more powerful, societies must grapple with questions of transparency, accountability, and fairness. Should companies be required to explain how their AI works? Who is responsible when an algorithm makes a harmful mistake? How do we ensure that invisible systems serve the public good rather than exploit vulnerabilities?

Scholars and policymakers argue for “explainable AI”—systems that can justify their decisions in understandable terms. Others advocate for algorithmic audits, ethical guidelines, and stronger data privacy laws. The struggle is ongoing, a tug-of-war between innovation, profit, ethics, and human rights.

The Future of the Unseen

As AI evolves, it will become even more invisible. Smart homes will anticipate needs before we speak. Wearable devices will track health continuously, adjusting diets and exercise routines. Autonomous cars will decide the safest routes without our input. Algorithms will not only filter information but actively mediate our relationship with the world.

The frontier of AI is moving toward general intelligence, systems that can learn across domains rather than narrow tasks. If such systems emerge, they may become not only invisible but omnipresent, woven so deeply into our environments that distinguishing between human choice and machine suggestion will grow nearly impossible.

This prospect is both thrilling and unsettling. Will invisible AI free us from routine tasks, allowing more time for creativity and connection? Or will it erode autonomy, making us passive passengers in a world driven by unseen logic?

Seeing the Invisible

To answer the question, What is the AI we don’t see?, we must first admit that we are already living with it. It is the algorithm recommending the next video, the system approving a loan, the voice assistant answering questions, the model detecting fraud. It is everywhere and nowhere, present yet hidden, intimate yet impersonal.

The danger is not that AI is invisible but that we remain blind to it. The first step toward agency is awareness—recognizing that every digital interaction is shaped by algorithms with goals that may not align with our own. Once we see the invisible, we can demand better systems, more ethical design, and more human-centered technology.

The Human Question

In the end, the story of invisible AI is not about machines but about us. What kind of world do we want to build with these tools? Will we allow algorithms to deepen divides, or will we harness them to build bridges? Will we design systems that exploit human weakness, or ones that cultivate human strength?

Artificial intelligence is not destiny. It is a mirror—reflecting our values, our priorities, and our vision for the future. The AI we don’t see will continue to shape us, but we hold the power to shape it in return.

The challenge is profound, but so is the opportunity. The invisible algorithms guiding our lives are not gods or demons; they are creations. And like all human creations, they carry within them the possibility of harm or hope.

The future will depend on whether we dare to open our eyes to the unseen, to question what lies beneath the surface of our screens, and to demand that the intelligence we build reflects not only our ingenuity but also our humanity.