For decades, quantum computing has promised to revolutionize the way we solve problems, design drugs, discover new materials, and crack codes. But until now, that promise lived mostly on paper—a thrilling theoretical dream constantly delayed by an inescapable nuisance: noise. These microscopic whispers of error, generated during the fragile dance of qubits inside a quantum processor, have long kept quantum computers trailing behind their classical cousins.

Now, in a remarkable leap forward, a team of researchers led by Daniel Lidar at the University of Southern California has changed the game. They’ve demonstrated, for the first time, an unconditional exponential speedup using real quantum hardware—an achievement once confined to mathematical proofs and theoretical labs.

Their study, published in the prestigious journal Physical Review X, marks a pivotal moment in the race toward usable quantum advantage.

“This is not a simulation. This is not a prediction. This is not a speedup that depends on any ‘if’ or ‘maybe’ about classical algorithms,” said Lidar, a professor of engineering, chemistry, and physics at USC and co-founder of Quantum Elements, Inc. “This is a demonstration of quantum performance that scales exponentially better than anything classical computing can offer for this task.”

The Problem with Quantum’s Promise

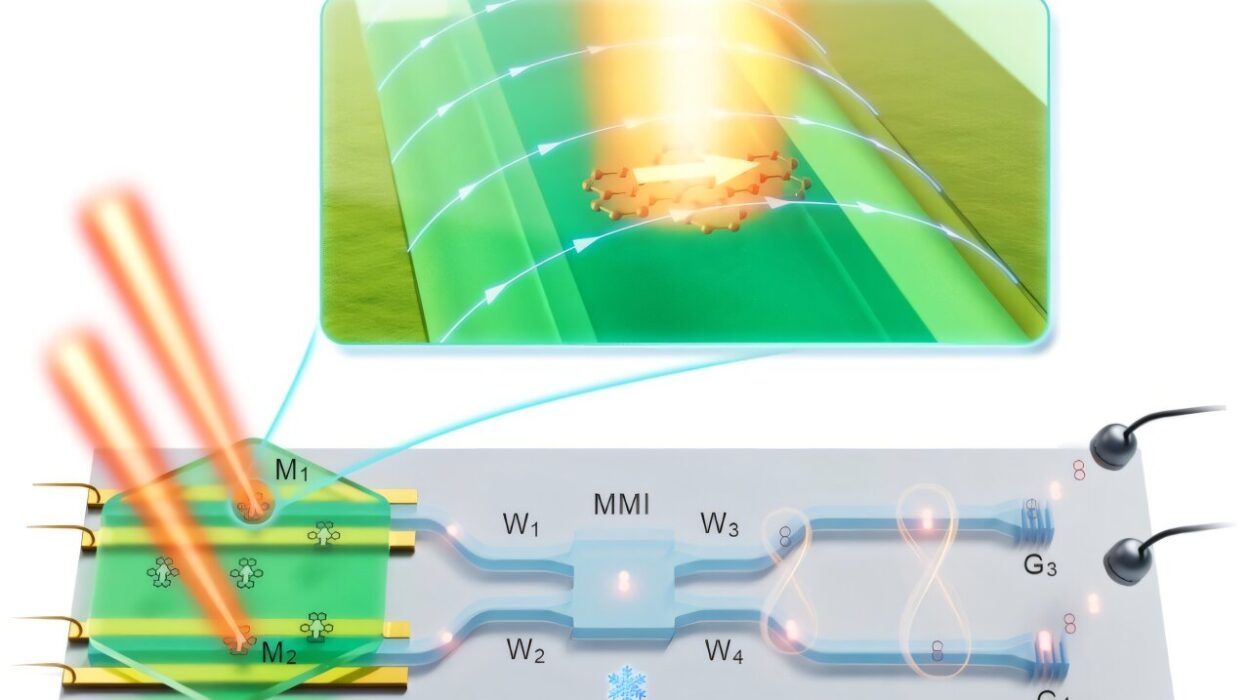

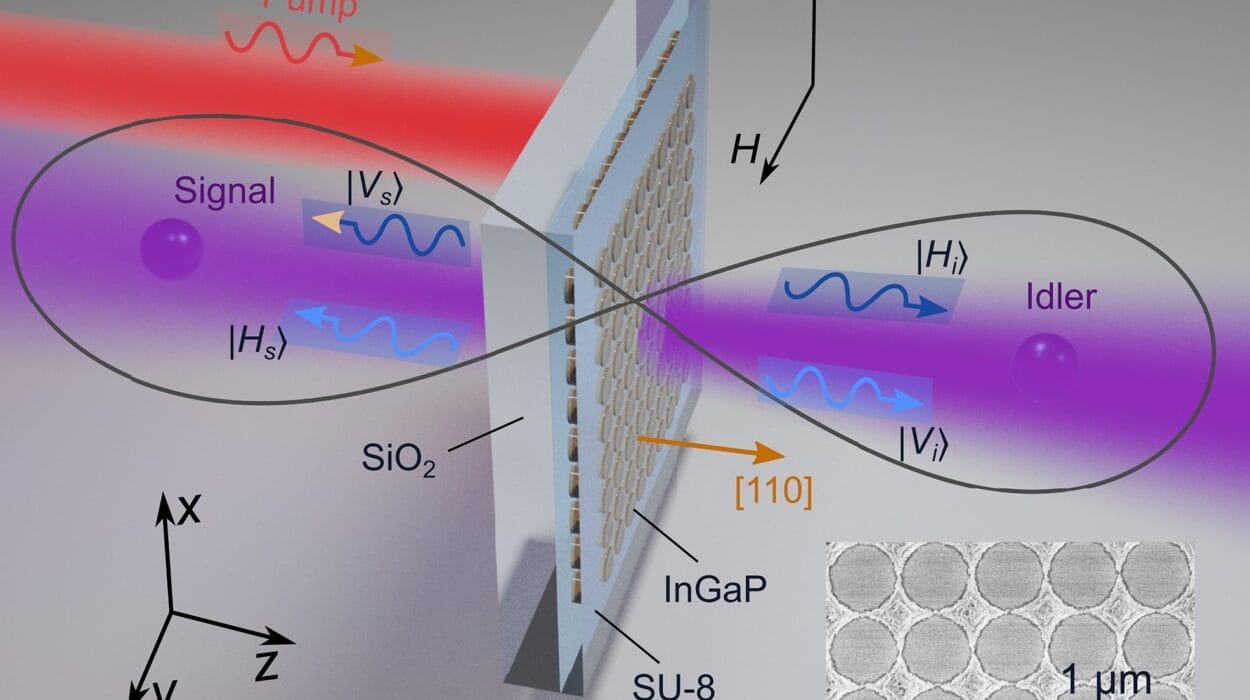

Quantum computers operate on principles alien to the deterministic world of classical computing. Instead of binary bits that are either 0 or 1, quantum computers use qubits—subatomic particles that can exist in multiple states at once, thanks to the phenomena of superposition and entanglement.

In theory, this gives quantum machines the ability to explore vast numbers of solutions simultaneously. But the real world isn’t so kind. Quantum systems are infamously fragile—qubits lose coherence quickly, and quantum operations are easily disturbed by the environment. The resulting noise causes errors that can snowball out of control during complex calculations.

To date, most quantum computing milestones have shown only modest advantages, often labeled “polynomial speedups.” These are impressive but limited gains, often relying on assumptions that classical algorithms couldn’t do better.

Lidar’s team has now done something far more ambitious: they proved an exponential speedup, and they did it unconditionally—meaning the result doesn’t rest on hypothetical claims or the assumption that no better classical algorithm exists.

A Guessing Game with Quantum Stakes

To achieve this milestone, the team turned to a challenge known as Simon’s problem, a foundational puzzle in quantum computing.

Imagine a black box—a game host, of sorts—that has a secret number embedded within a function. Players can feed numbers into the box, and it returns outputs that hint at the secret. The goal is to find the hidden pattern that defines the function. Classical computers can solve this problem, but only after slogging through an exponential number of guesses.

Quantum computers, however, can win this guessing game exponentially faster. This was proven theoretically decades ago, and Simon’s problem later inspired Shor’s famous algorithm, which could one day break widely used cryptographic codes.

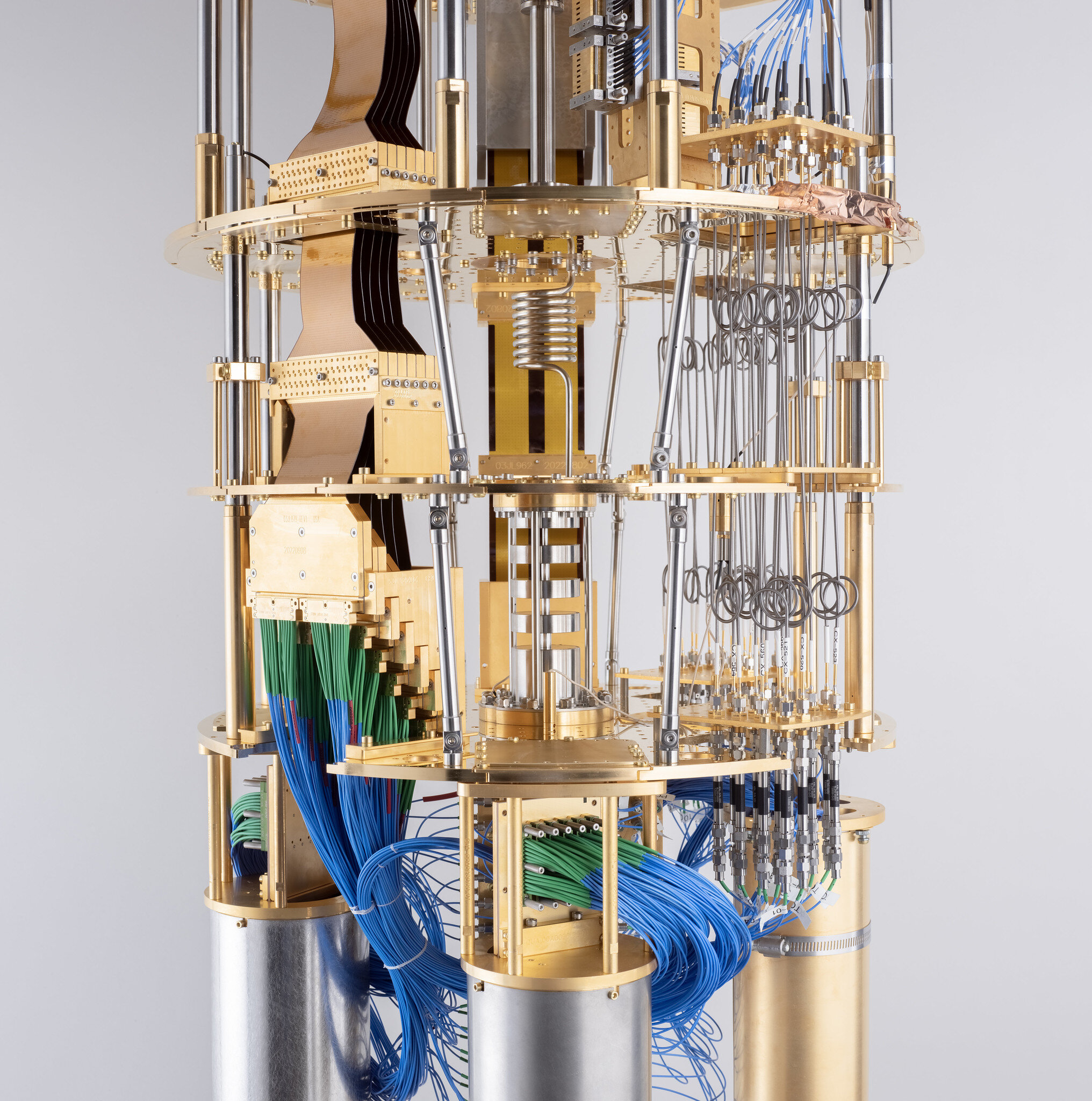

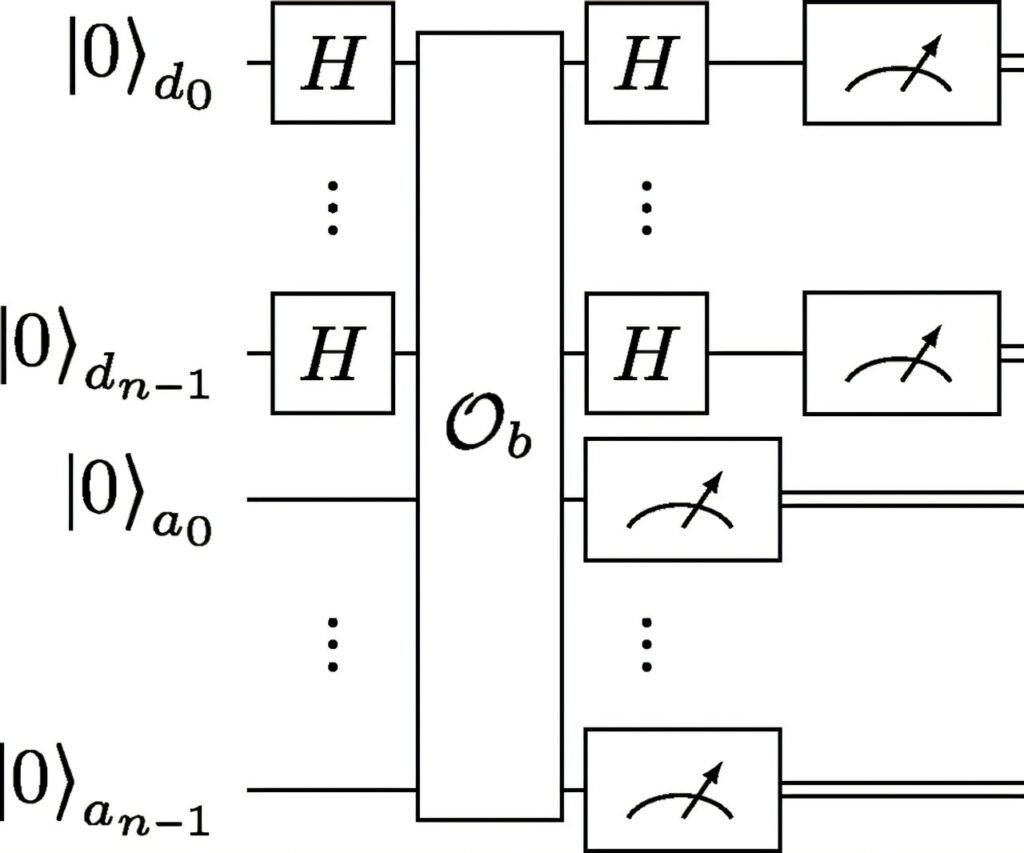

What Lidar and his team did was translate this theoretical edge into a real-world triumph. Using two 127-qubit IBM Quantum Eagle processors over the cloud, they tweaked and optimized a version of Simon’s problem to run on today’s noisy quantum devices—and then proved, unequivocally, that the quantum approach scaled exponentially faster.

Engineering Victory from Fragility

Achieving this wasn’t just about picking the right algorithm. It was about wrestling performance from quantum hardware still in its technological adolescence.

Phattharaporn Singkanipa, a USC doctoral researcher and lead author of the study, described their approach as “squeezing every ounce of performance from the hardware.” And they did it using a suite of advanced strategies:

First, they limited the complexity of the input data, reducing the burden on the quantum circuits. Next, they compressed the number of logic operations needed using transpilation—a technique that essentially rewrites the algorithm to run more efficiently on the specific quantum machine.

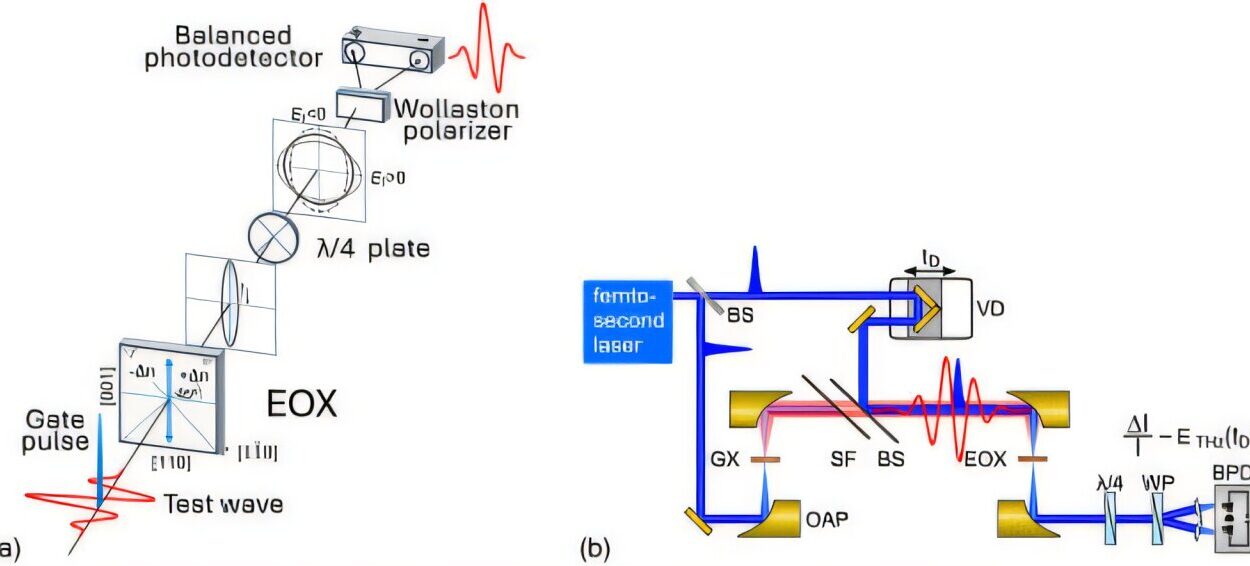

But the true hero of the experiment was dynamical decoupling—a method of shielding qubits from environmental noise using carefully timed pulses. This technique, akin to rhythmic CPR for quantum bits, kept the system alive and coherent long enough to reach the finish line.

Lastly, they cleaned up what remained with measurement error mitigation, correcting for any final glitches when reading the qubits’ states.

Together, these methods didn’t just make the quantum computer usable—they made it indisputably superior for the task at hand.

Exponential and Unconditional: Why That Changes Everything

To understand the weight of this achievement, consider what “exponential speedup” actually means. It doesn’t imply being just ten or a hundred times faster. It means that as the size of a problem increases—say, by adding more variables—the quantum solution’s advantage grows faster than fast. The performance gap doesn’t just widen; it explodes.

Even more crucial is the term “unconditional.” Past quantum speedup claims often rested on the assumption that classical computers couldn’t someday find a smarter way to solve the problem. In other words, they were vulnerable to being disproven.

Not this time.

“There’s no better classical algorithm hiding around the corner,” said Lidar. “The performance separation cannot be reversed.”

It’s like watching a new kind of engine win a race and knowing—with mathematical certainty—that no amount of tuning the old engine could have kept up.

From Theory to Hardware, the Quantum Era Deepens

This experiment doesn’t mean quantum computers are ready to tackle climate modeling or crack cryptographic codes tomorrow. Simon’s problem, after all, is a toy problem—relevant for testing principles, but with no direct real-world use. Lidar acknowledges this.

“This result doesn’t have practical applications beyond winning guessing games,” he said. “Much more work remains to be done.”

But that shouldn’t diminish its importance. The field of quantum computing has now reached a threshold many believed was still years away: real hardware, solving a real problem, better than any classical computer could—and proving it without question.

That proof doesn’t just validate decades of quantum theory. It opens the door for new confidence in what comes next.

A New Dawn for Quantum Ambition

The race to quantum advantage is no longer theoretical. The USC-led team has shown that with clever engineering, careful optimization, and a clear understanding of where quantum can shine, exponential gains are now achievable—even with today’s imperfect machines.

As quantum processors grow in size and stability, and as error-correcting methods evolve, the playground will expand. Tasks once unimaginable may become routine. And the vision of quantum computing—once blurry and wrapped in caveats—is finally starting to come into focus.

This study isn’t the finish line. It’s the green light at the beginning of the quantum highway.

Because now, the promise of exponential speedup is not just a theory.

It’s a fact.

Reference: Phattharaporn Singkanipa et al, Demonstration of Algorithmic Quantum Speedup for an Abelian Hidden Subgroup Problem, Physical Review X (2025). DOI: 10.1103/PhysRevX.15.021082