In a quiet lab within Meta’s Reality Labs division, a team of researchers is reimagining what it means to touch technology. But instead of screens or keyboards, they’re placing their bet on something much more intimate—the subtle twitch of a muscle, the whisper of a nerve, the invisible thread between thought and action.

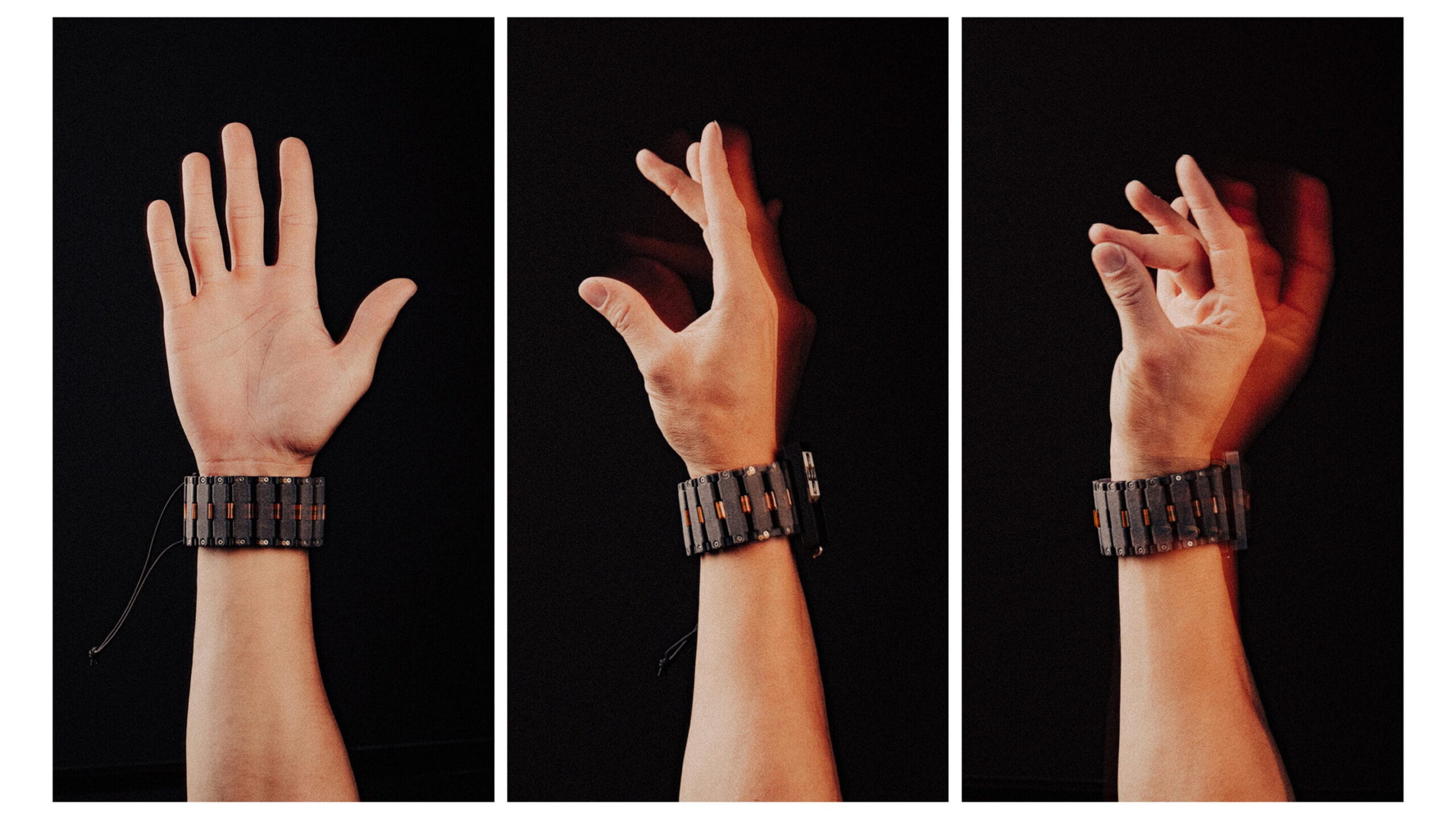

Their experimental wristband doesn’t scream with blinking lights or futuristic shapes. It sits gently on the forearm, almost like a watch. But what it promises is nothing short of revolutionary. Using the science of surface electromyography (sEMG), artificial intelligence, and the natural language of the human body, Meta’s wristband reads your intent—before your hand even moves. A flick of the fingers, a subtle squeeze of the palm, even a thought toward movement is translated into digital command.

If perfected, it could render mice, keyboards, and even touchscreens obsolete. The interface of the future may no longer live in your pocket. It may live beneath your skin.

Decoding the Language of Intention

At the core of this technology lies a profound insight: every movement we make begins not with action, but with intention. When you reach for a glass of water, the command doesn’t begin in your fingers—it begins in your brain. A cascade of electrical signals flows down your spinal cord and into the nerves that control your muscles. Even the subtlest thought of movement leaves a trace.

Meta’s wristband captures these traces using surface electromyography, a technique that records the electrical signals traveling across muscle fibers. Unlike earlier approaches to gesture control, which relied on cameras, gloves, or mechanical sensors, sEMG interfaces tap directly into the body’s internal conversation.

What’s remarkable is how little movement is required. Even when a hand remains motionless to the naked eye, the wristband can detect neural intent and translate it into digital input. A ghost of a gesture becomes an action. A virtual keystroke. A swipe. A command.

In tests, users could move cursors, launch applications, and even “write” in midair—all without touching a device. With current prototypes, handwriting transcription from air movements reached speeds of 20.9 words per minute. This is just the beginning.

Training the Machine to Understand You

Gesture detection isn’t a new concept. Engineers and scientists have been trying to build intuitive human-machine interfaces for decades. What has always stood in the way is the human body’s inherent variability. Muscles behave differently in every person. Hand sizes, skin conductivity, nerve pathways—they all differ from individual to individual. Traditional systems had to be calibrated per user, often requiring time-consuming training.

What Meta has done differently is to turn this challenge into an advantage. By applying deep learning algorithms to data from thousands of test subjects, the company trained its AI system to recognize common signal patterns across the human population. This allowed them to create a kind of universal gesture model.

When a new user puts on the wristband, the device doesn’t start from scratch. It taps into this shared neural language. The AI rapidly adjusts and personalizes itself based on your unique signals, but it starts from a foundation built on the collective movement of many others. This is what makes it “performant out-of-the-box,” as Meta researchers described in their Nature publication—a first for any high-bandwidth neuromotor interface.

A Bridge for Those Left Behind

While the vision of seamlessly controlling your phone with a hand twitch might thrill tech enthusiasts, Meta’s team is looking beyond the everyday consumer. Their eyes are on those whose hands cannot twitch—those living with paralysis, neurodegenerative diseases, or spinal cord injuries. For these individuals, a simple act like sending a text or navigating a computer can be impossible.

Here, the wristband’s sensitivity becomes a lifeline. Because it doesn’t require visible movement, even the faintest muscle signal—a trace of an intention—can be enough to activate the system. In early collaborations with Carnegie Mellon University, researchers are exploring how the wristband could empower people with severe physical disabilities to interact with computers and smart devices independently.

Imagine a person with complete hand paralysis able to send an email, turn on lights, or control a robotic arm—just by thinking about moving their fingers. That’s not science fiction. That’s what this interface is designed to do.

This noninvasive approach also avoids the ethical and surgical complexities of invasive technologies like Elon Musk’s Neuralink, which proposes implanting brain chips. Meta’s wristband, in contrast, sits harmlessly on the skin, relying on muscle signals rather than brain implants—offering a more accessible, lower-risk solution with faster potential rollout.

Blurring the Line Between Thought and Action

The implications of this technology extend far beyond disability access or gaming. They touch the very way we define interaction. Until now, interacting with machines has been fundamentally external. We touch screens. We type on keyboards. We speak to voice assistants.

But what if the next interface doesn’t require outward expression at all? What if your thoughts alone, translated by your own nervous system, could control the world around you?

Meta’s wristband brings us closer to a future where our physical devices fade into the background, replaced by an almost telepathic interface—something that doesn’t ask you to adapt to the machine, but allows the machine to adapt to you.

The result is something that feels natural, seamless, and intimate. It’s not about commanding a computer—it’s about extending your will into the digital realm with the same ease you reach out to pick up a pencil.

A Future Closer Than You Think

While many cutting-edge neurotechnologies remain years—if not decades—away from practical use, Meta’s wristband might be much closer. According to the researchers, this technology is not bound by the same obstacles that hold back invasive brain-machine interfaces. The sensors are already manufacturable. The AI algorithms are continually improving. And public familiarity with wearables is growing rapidly.

The vision isn’t just futuristic. It’s feasible. A commercial version could appear in the coming years, perhaps integrated with Meta’s AR glasses or other smart devices.

As augmented and virtual reality environments become more prevalent, intuitive control systems like this will be critical. No one wants to fumble with physical controllers in the metaverse. The ideal interface will be invisible—an extension of the body, as natural as breathing.

Rewiring Connection and Control

There’s something almost poetic in this shift. The same hands that once carved stone, played piano, or wrote letters now have a new language—a digital one, communicated not through touch but through intention. Meta’s wristband doesn’t just represent a new kind of interface. It represents a shift in how we relate to our machines and, by extension, to the world.

Instead of reaching for technology, it reaches back toward us, listening not to our voices or our fingertips but to the electricity within us—the quiet pulse of intention that precedes every action.

Whether for a person living with paralysis, a gamer immersed in a virtual universe, or an everyday user trying to simplify their digital life, this technology suggests a more human kind of computing. One where devices fade into the background, and the boundary between thought and command, between desire and response, becomes nearly invisible.

A Gesture Toward Tomorrow

Meta’s wristband isn’t just a new gadget. It’s a gesture toward a world where interaction is intuitive, accessible, and deeply personal. A world where our bodies speak directly to our tools, and where intention itself becomes the interface.

We may not be ready to discard our smartphones or keyboards just yet. But the seeds of a new era are being planted—invisible, electric, and wrapped quietly around the wrist. If Meta succeeds, the way we connect to our digital lives may soon feel less like work and more like thought made visible.

And it will all begin with a flick of the wrist.

Reference: Patrick Kaifosh et al, A generic non-invasive neuromotor interface for human-computer interaction, Nature (2025). DOI: 10.1038/s41586-025-09255-w