Once upon a digital dawn, the world of software was guided by certainty. Every line of code was a rule etched by human hands, an instruction meant to tame silicon into submission. This was the era of traditional programming—a world where the computer was a tool, not a partner; a servant, not a thinker.

In this world, programmers were the ultimate artisans of logic. They told the computer exactly what to do, how to do it, and what to expect. If the user clicked a button, the code responded precisely. If the database needed querying, it was summoned line by line through structured, procedural rituals. Everything was human-defined, predictable, and engineered with a kind of digital craftsmanship that echoed the elegance of architecture and the precision of mathematics.

For decades, this model built our digital civilization. Operating systems, word processors, spreadsheets, games, websites—each was constructed like a machine, with nuts, bolts, gears, and engines formed from conditionals, loops, variables, and classes. Code was king, and the programmer its sovereign.

But then, something began to stir. A new paradigm crept in—not quietly, but also not explosively. It didn’t knock on the doors of computing and ask to be let in. It seeped through research labs, grew in the wombs of data centers, and matured in the cloud. Its name was machine learning, and it didn’t ask what the rules were.

It asked: What does the data say?

The Shift from Instructions to Inference

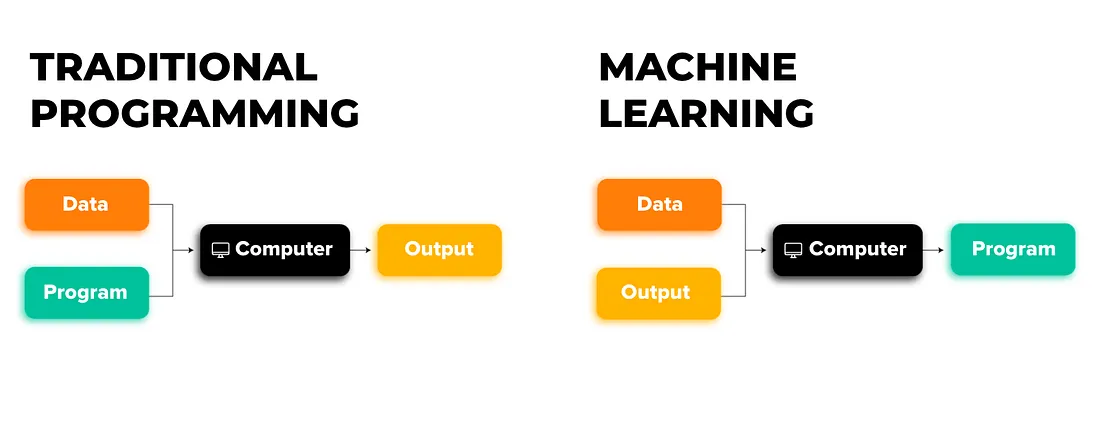

The fundamental distinction between machine learning and traditional programming lies in how decisions are made. In traditional programming, humans write the logic: if this, then that. Every possibility, every edge case, every behavior must be explicitly encoded. The program is a mirror of human intent, distilled into logical syntax.

But machine learning does not follow this path. Instead of telling the computer what to do, we give it examples and ask it to figure out the logic on its own. The code doesn’t declare: “If the temperature is above 30°C, turn on the fan.” It learns, through hundreds of samples, that when certain conditions appear in the data, the fan should come on.

This shift from instruction to inference is nothing short of revolutionary. It means we’re not just automating tasks—we’re teaching machines to generalize, to recognize patterns, to develop a form of judgment that, in narrow domains, can rival or surpass that of humans.

For the first time in the history of software, we are beginning to write programs that write themselves.

From Engineers to Mentors

This change transforms the role of the programmer. No longer merely an architect of logical systems, the modern developer working with machine learning becomes a kind of mentor—a guide who curates data, shapes models, and tunes parameters, hoping to coax intelligence from entropy.

Instead of obsessing over edge cases in a switch statement, the programmer now focuses on whether their dataset reflects reality, whether the model is overfitting, whether their features are meaningful. It’s less about logic and more about intuition, statistics, and empathy for data.

In traditional programming, the correctness of your code is binary: it works, or it doesn’t. But in machine learning, performance is statistical. Your model is 92% accurate. It fails sometimes, and that’s expected. There is no perfect answer—only a better approximation.

This acceptance of imperfection is both liberating and unsettling. It means letting go of control. It means trusting the machine to find meaning where we might not see it. It means embracing the unknown.

The Cognitive Leap of Machines

To understand how profound this shift is, imagine training a model to detect cats in photos. In traditional programming, you’d have to define exactly what a cat looks like: two ears, fur, whiskers, oval eyes. But how do you describe “fur” in pixels? What about cats in shadows, or cats at angles, or cats hiding in laundry baskets?

The traditional path quickly collapses under the weight of variation. But with machine learning, you don’t write rules. You show it 10,000 pictures of cats, and it learns what a cat looks like—not through logic, but through layers of abstraction.

Neural networks—the workhorses of modern machine learning—don’t see like we do. They see matrices of numbers, activate neurons in response, and slowly learn to extract meaning. Early layers detect edges. Mid-level layers see textures and shapes. Higher layers recognize faces, ears, and ultimately: cat.

This cognitive layering is not pre-programmed. It is learned, through exposure and feedback. It mimics the way our own brains process the world. And this is why machine learning isn’t just another tool in the software toolkit—it’s a new language of computation.

The Fragility of the Old Order

In traditional programming, complexity is the enemy. Every new requirement, every exception, makes the code longer, harder to maintain, and more prone to bugs. This is why building large-scale software systems is so hard—human logic doesn’t scale easily.

But in the era of machine learning, complexity becomes a friend. The more data, the richer the patterns. The more nuance, the better the model can generalize. Complexity, once feared, becomes fuel.

This doesn’t mean traditional programming is obsolete. It still powers operating systems, banking systems, infrastructure, hardware drivers—domains where rules are fixed, performance is critical, and ambiguity is dangerous.

Yet in areas where uncertainty reigns—language, vision, prediction, personalization—the old methods struggle. You can’t write rules to detect sarcasm. You can’t hardcode empathy into a chatbot. These are spaces where machine learning thrives, not despite uncertainty, but because of it.

The rigid scaffolding of traditional programming is giving way to systems that bend, learn, and adapt. The future isn’t just about writing better code. It’s about writing less code and teaching machines to write the rest.

The Emotional Weight of Letting Go

This transition is not just technical. It is deeply emotional. For many programmers, the shift feels like a loss of control, a betrayal of craft. How do you debug a neural network? How do you trust a black box? How do you explain to a client that the model is “mostly” right?

There’s a vulnerability in working with systems that you don’t fully understand. Even the best machine learning engineers can’t always explain why a deep model made a certain decision. Interpretability becomes a challenge. Accountability becomes blurred. Ethics becomes essential.

But in this vulnerability lies a kind of creative rebirth. The programmer, once a deterministic rule-setter, becomes a data artist, a model whisperer, a problem explorer. The relationship with the machine changes. It becomes collaborative, even conversational.

And with that shift comes a profound humility. The machine is not just a servant anymore. It is a mirror. It learns what we show it. It reveals our biases. It mimics our patterns. In teaching the machine, we begin to understand ourselves.

The Rise of the Data-Driven World

Already, machine learning is everywhere. Your email filters spam. Your phone recognizes your voice. Your social feed curates your interests. Your navigation app predicts traffic. Your bank flags fraud. These are not programmed systems in the traditional sense. They are trained systems.

What powers this revolution is not just algorithms—it’s data. The lifeblood of machine learning is the constant stream of real-world examples. Every click, every swipe, every purchase is a datapoint. And with enough data, machines become not just reactive, but predictive.

This is why machine learning represents more than a shift in programming—it signals a fundamental rewiring of the human-digital interface. In the old model, we adapted to software. In the new model, software adapts to us.

It learns our preferences. It anticipates our needs. It changes in response to behavior. Code, once static and universal, becomes personalized and evolving.

A Future Written in Probabilities

So where does this leave us? Is traditional programming dead? Hardly. It remains the foundation of the digital world. The CPU still runs instructions. The database still needs queries. The hardware still speaks in drivers.

But increasingly, the crown jewels of user experience—the intelligence, the personalization, the magic—come from models, not logic. The next great product is not the one with the most features. It’s the one that learns fastest.

This means that the future of code is not deterministic—it’s probabilistic. It’s not about perfect rules, but about likely outcomes. It’s not about being right every time, but being useful most of the time.

This future requires a new mindset. We must embrace uncertainty. We must think statistically. We must become curators of data, not just authors of logic. And we must build systems that are not just efficient, but ethical, transparent, and fair.

When the Machine Writes Back

In recent years, large language models like GPT, image generators like DALL·E, and video creators like Sora have shown us a new frontier: machines that not only learn but create. These systems don’t just analyze—they imagine. They generate poetry, code, art, music, stories. They autocomplete our thoughts and inspire our next move.

This is no longer programming in the old sense. This is co-creation. And it changes everything.

Soon, you won’t write all your code. You’ll describe what you want, and the machine will suggest snippets, test cases, and even architectures. You’ll debug not just syntax, but reasoning. You’ll design systems that learn continuously from their environment, evolving long after the first deployment.

You’ll stop seeing yourself as the sole creator. You’ll become part of a dialogue—a conversation between human and machine, between logic and learning, between certainty and surprise.

The Human Touch in a Machine World

As machines get smarter, the role of the human becomes more important, not less. Because while machines can learn from data, they don’t know what to care about. They don’t know what matters. They don’t understand suffering, joy, fairness, justice. They only know what they’re shown.

It is the human who must decide what goals are worth pursuing. It is the human who defines the loss function. It is the human who sees the unintended consequences, the hidden biases, the ethical boundaries.

In this sense, the future of programming is not just technical—it is moral. It asks us to be not just better coders, but better people.

Writing the Future Together

We are at the dawn of a new epoch in computation. Traditional programming gave us structure, precision, and control. Machine learning gives us adaptability, creativity, and intelligence. Together, they form the two wings of the future.

The best systems will not choose one over the other. They will blend them. Rules where necessary. Learning where possible. Code that adapts, models that obey, software that understands.

As we step into this future, let us do so not with fear, but with awe. For we are no longer just builders of machines. We are teachers of minds, curators of knowledge, architects of possibility.

The future of code is not written yet.

It is learning, just like we are.

Would you like this article formatted for publication (e.g., as a PDF, web page, or Word doc), or should I help you generate a title, SEO metadata, or accompanying visuals?