Software systems are the invisible engines of the modern world. Every action taken on a computer, smartphone, or embedded device — from typing a message to running a complex simulation — is driven by millions of lines of code that work together in intricate harmony. Yet, beneath the surface of graphical interfaces and seamless user experiences lies a universe of abstractions, logic, data structures, and physical interactions between hardware and software. Understanding how code becomes functionality reveals the profound layers of transformation that occur between a programmer’s written instructions and a system’s tangible behavior. It is a journey that spans human logic, mathematical precision, machine execution, and distributed collaboration between countless interacting components.

The process of transforming code into functionality is not a single step but an ongoing dialogue between ideas and implementation. It begins in the human mind with the conception of a problem and culminates in a digital system capable of performing tasks with astonishing precision. From programming languages to compilers, from operating systems to microprocessors, every layer contributes to turning abstract thought into mechanical action.

The Nature of Software Systems

At its core, a software system is a structured collection of programs, data, and interfaces that work together to perform a specific set of tasks. It is both an artifact and a process — an engineered creation designed to solve real-world problems through computational means. Software systems range from small utilities with a few hundred lines of code to massive distributed architectures powering global networks, databases, and artificial intelligence models.

Unlike physical systems, software systems are intangible. They exist as layers of logical constructs that can be replicated infinitely at negligible cost. Yet they interact with the physical world through hardware — processors, memory, storage, and input/output devices — which execute and respond to the instructions encoded in software. Every click, tap, or command initiates a cascade of computational events that ultimately produce the output a user perceives as functionality.

A software system must balance complexity, scalability, maintainability, and efficiency. As it grows, so does the need for structure and organization. Modern systems are composed of layers — from low-level firmware to high-level applications — that encapsulate functionality and isolate complexity. This layered design enables developers to modify, extend, or optimize parts of a system without breaking others.

From Idea to Algorithm

Before code exists, there is intent. The creation of a software system begins with the identification of a problem or goal. Engineers and designers analyze what the system should do, how it should behave, and what constraints it must operate under. These requirements form the foundation upon which algorithms are constructed — formalized methods that describe step-by-step how a task should be completed.

An algorithm is the bridge between abstract reasoning and executable code. It defines logic independent of programming language or hardware architecture. For example, an algorithm to sort a list of numbers may specify comparisons and swaps without referencing the exact syntax or data types used in implementation.

Designing effective algorithms requires understanding computational complexity — how resources like time and memory scale with input size. Efficient algorithms reduce redundancy and ensure scalability, allowing systems to perform well under heavy loads. Once an algorithm has been defined, the next step is to express it in a programming language that a machine can interpret or compile into executable instructions.

Programming Languages: The Vocabulary of Machines

Programming languages serve as the medium through which humans communicate with computers. They transform logical thought into structured syntax that can be parsed and executed by machines. While early programming required writing directly in machine code or assembly — sequences of binary instructions understood by hardware — modern languages provide multiple layers of abstraction that make code more expressive and maintainable.

High-level programming languages such as Python, Java, and C++ allow developers to focus on algorithms and data rather than hardware details. These languages are closer to human language, providing constructs like loops, conditionals, and object-oriented structures that simplify complex logic. They enable programmers to describe “what” the software should do without specifying “how” it should be done at the machine level.

Each language comes with a compiler or interpreter that bridges the gap between human-readable code and machine-executable instructions. Compilers translate entire programs into machine code before execution, optimizing the code for performance. Interpreters, on the other hand, execute code line by line, offering flexibility and rapid feedback during development. Some languages, like Java, use an intermediate representation called bytecode, which runs on a virtual machine (JVM) for portability across different platforms.

Through this translation process, abstract human instructions are gradually refined into precise machine operations. Every variable, function, and class in a program ultimately becomes a sequence of binary commands executed by a processor.

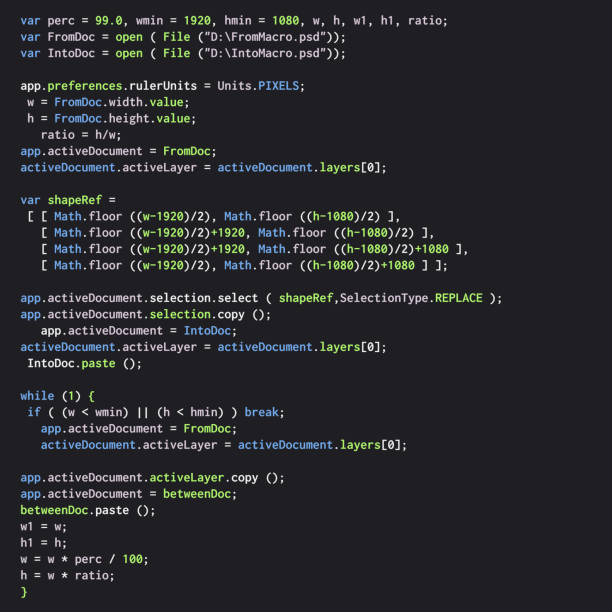

Compilation: Turning Code into Machine Instructions

The process of compilation is central to how code becomes functionality. When a programmer writes code in a language like C++, the compiler parses the source code, checks for errors, and converts it into object code — a set of low-level instructions specific to a target architecture.

Compilation typically occurs in several stages. First, lexical analysis breaks the source code into tokens — basic elements like keywords, identifiers, and symbols. Then, syntax analysis constructs a parse tree that represents the grammatical structure of the program. Semantic analysis checks for logical consistency, ensuring variables are used correctly and functions receive valid arguments.

Once the program passes these stages, the compiler performs optimization. Optimization algorithms analyze the code to remove redundancies, reorder instructions, and reduce execution time or memory usage without altering functionality. The result is an optimized intermediate representation that can be translated into machine code.

Finally, the compiler generates binary instructions — sequences of bits corresponding to operations like addition, branching, or memory access. These instructions are linked with system libraries to produce an executable file. When executed, this file interacts with the operating system and hardware to perform the desired actions.

Through compilation, a human’s abstract logic becomes a deterministic sequence of operations that a processor can follow precisely, billions of times per second.

The Operating System: The Mediator Between Code and Hardware

The operating system (OS) plays a crucial role in transforming compiled code into functionality. It acts as the intermediary between software applications and the hardware that executes them. The OS manages memory, schedules processes, handles input/output operations, and provides system-level services that applications rely on.

When an executable file runs, the operating system creates a process — a container that holds the program’s state, code, and data in memory. The OS allocates memory for variables, assigns CPU time for execution, and tracks file and network access. It also enforces boundaries between processes, ensuring that one program cannot directly interfere with another.

Through system calls and APIs (Application Programming Interfaces), software communicates with the OS to perform tasks like reading files, sending data over a network, or displaying graphics on the screen. The OS abstracts away hardware differences, providing a consistent interface that allows programs to run on various devices without modification.

For example, when a program requests to write data to a disk, it does not directly manipulate hardware sectors. Instead, it issues a system call to the OS, which interacts with the file system driver to perform the operation. This layered structure allows software to remain portable and secure, even in complex environments with diverse hardware configurations.

Memory and Data Representation

To understand how code becomes functionality, one must grasp how information is represented and manipulated in memory. Computers store data as binary — sequences of zeros and ones that correspond to electrical states in hardware. Each binary digit, or bit, can represent two possible values, and groups of bits form bytes, words, or larger structures.

When a program runs, the operating system loads its instructions and data into memory. Each variable in the code corresponds to a specific location in memory, identified by an address. The CPU retrieves and manipulates these values through a process called fetching and decoding.

Different data types — integers, floating-point numbers, characters, arrays, and objects — are represented in specific binary formats. For example, a 32-bit integer occupies four bytes of memory and represents values using two’s complement notation. Strings are stored as arrays of characters, often terminated by a null byte to indicate the end of the sequence.

Pointers and references allow programs to access and modify memory dynamically. This flexibility enables the creation of complex data structures such as linked lists, trees, and hash tables, which underpin the functionality of most software systems. However, it also introduces challenges like memory leaks and segmentation faults if used incorrectly, highlighting the need for careful memory management.

Execution: How the CPU Runs Code

Once the code has been compiled and loaded into memory, execution begins. The central processing unit (CPU) is responsible for interpreting and executing machine instructions. Each instruction specifies an operation — arithmetic, logic, control flow, or data movement — that the CPU performs in a single cycle or sequence of cycles.

The CPU follows the fetch-decode-execute cycle. It fetches the next instruction from memory, decodes it to determine what action to perform, and executes the operation. This process repeats billions of times per second, enabling modern processors to perform massive amounts of computation in parallel.

During execution, registers — small, high-speed storage units within the CPU — hold intermediate values and addresses. The instruction pointer (or program counter) tracks the next instruction to execute. Control flow instructions like jumps, branches, and function calls alter this pointer, allowing for loops, conditionals, and modular function execution.

The CPU interacts closely with the memory hierarchy, which includes caches, main memory, and storage. Caches store frequently used data near the processor to reduce latency, while main memory provides larger but slower access. This layered design optimizes performance and ensures efficient utilization of resources.

Through these micro-level operations, the CPU brings software to life, translating binary instructions into meaningful activity. Whether rendering an image, processing a database query, or training a neural network, every computation traces back to these fundamental execution cycles.

Abstraction Layers and Modularity

As software systems grow, they become too complex to manage as monolithic blocks of code. Abstraction and modularity are principles that allow developers to break systems into manageable components. Each module encapsulates a specific functionality and interacts with others through well-defined interfaces.

Abstraction hides implementation details, allowing programmers to reason about systems at different levels. For example, a file-handling module exposes operations like “open,” “read,” and “write” without revealing how data is stored on disk. Similarly, high-level frameworks abstract away network protocols or memory management, enabling developers to focus on business logic.

Object-oriented programming (OOP) extends this principle by organizing code into classes and objects that represent real-world entities. Encapsulation, inheritance, and polymorphism provide mechanisms for code reuse and extensibility. In functional programming, abstraction occurs through pure functions and higher-order operations that manipulate data without side effects.

These abstractions not only simplify design but also make software systems more resilient. When modules are independent, developers can modify or replace them without disrupting the entire system. This modular architecture is foundational to modern software engineering, from microservices in cloud computing to component-based user interfaces in web applications.

Data Flow and Control Flow

Every software system has two fundamental aspects: data flow and control flow. Data flow describes how information moves through a system — from input to processing to output. Control flow determines the order in which operations are executed, guided by conditionals, loops, and function calls.

In procedural programming, control flow is explicit: the program executes statements sequentially unless directed otherwise by control structures. In event-driven architectures, such as graphical user interfaces or web servers, control flow is determined by external events like user actions or network requests.

Data flow architectures, common in parallel and reactive systems, focus on how data transforms as it passes through a network of operators. These systems can handle continuous streams of input, making them ideal for real-time analytics and sensor processing.

Both flows converge in modern software, where asynchronous operations, callbacks, and message queues manage complex interactions. Understanding and optimizing these flows is critical for achieving responsive, efficient systems.

The Role of APIs and Interfaces

Software rarely operates in isolation. APIs (Application Programming Interfaces) define the boundaries through which different parts of a system communicate. They specify the format of requests and responses, allowing interoperability between modules, services, and even entirely different systems.

APIs can be internal, facilitating communication within a single application, or external, providing access to third-party services. For example, a weather application might use an external API to fetch real-time forecasts from a remote server. RESTful APIs, GraphQL, and gRPC are among the most common protocols that structure these interactions.

Interfaces are the contracts that guarantee consistent behavior. They enable loose coupling — the ability to change one component without breaking others — and promote scalability. In large systems, well-designed APIs become the backbone of maintainability and evolution, allowing teams to work independently on different parts of the software.

Testing and Validation

Once code has been written and integrated, it must be tested to ensure correctness and reliability. Testing verifies that the software behaves as intended under various conditions. It is an integral part of transforming code into dependable functionality.

Unit testing focuses on individual functions or modules, ensuring that each component works in isolation. Integration testing examines how components interact, while system testing validates the entire software against its requirements. Automated testing frameworks allow continuous verification as the code evolves, detecting regressions early in the development cycle.

Beyond functional correctness, performance testing evaluates speed and scalability, and security testing identifies vulnerabilities. Together, these practices ensure that the functionality users experience is not only correct but also robust, secure, and efficient.

Deployment and Execution in the Real World

After development and testing, software must be deployed into a real-world environment. Deployment translates code into operational systems accessible to users. Modern deployment pipelines automate this process through continuous integration and continuous delivery (CI/CD), ensuring smooth transitions from development to production.

In distributed systems, deployment involves orchestrating multiple services across servers or cloud instances. Tools like Docker and Kubernetes manage containers that encapsulate software and its dependencies, enabling consistent behavior across environments. Monitoring tools track performance and detect failures, allowing systems to recover autonomously through redundancy and scaling.

Once deployed, the software becomes part of a living ecosystem. It interacts with users, other applications, and changing data sources. Feedback loops from user behavior and analytics inform future updates, making functionality an evolving process rather than a static endpoint.

The Interplay of Software and Hardware

Though software operates in the abstract world of logic, its execution depends entirely on physical hardware. Processors execute instructions through electrical signals, memory stores data as charge states, and storage devices use magnetic or solid-state mechanisms to preserve information.

This deep interplay means that efficiency in software design directly affects hardware performance and vice versa. Compilers optimize code for specific architectures, while hardware evolves to support advanced instruction sets and parallelism. Graphics processing units (GPUs), for instance, emerged from rendering tasks to become the backbone of machine learning computations due to their massive parallel architecture.

Understanding this synergy allows engineers to write software that fully leverages hardware potential, achieving higher performance and energy efficiency.

The Human Element in Software Systems

Despite its technical foundations, software is ultimately a human endeavor. Every line of code reflects choices, trade-offs, and creativity. Teams collaborate through version control systems, communicate through documentation, and evolve systems over years of maintenance and refinement.

Good software design emphasizes readability, simplicity, and intent. Code is not only written for machines to execute but also for humans to understand and modify. This human-centric perspective ensures that functionality remains adaptable, sustainable, and aligned with the evolving needs of users.

Emergent Complexity and Evolution

As software systems grow, emergent complexity becomes inevitable. Interactions between modules can lead to behaviors that were not explicitly programmed, from performance bottlenecks to unpredictable bugs. Managing this complexity requires architectural foresight, rigorous testing, and continuous refactoring.

Over time, systems evolve through new requirements, technologies, and paradigms. Legacy code, written for outdated environments, often persists because it continues to provide essential functionality. Modernization efforts — refactoring, rewriting, or containerizing — breathe new life into old systems, ensuring their relevance in changing technological landscapes.

Conclusion

When we say code becomes functionality, we are describing a remarkable transformation. It begins with human thought — the abstract conception of an idea — and travels through layers of logic, language, compilation, and execution until it manifests as tangible behavior on a machine. Each layer, from algorithms to hardware, contributes to this metamorphosis, turning symbols into motion, data into decisions, and intention into outcome.

Software systems embody the fusion of creativity and precision. They are built upon mathematical rigor yet shaped by human imagination. Every time an application runs, it enacts a symphony of interactions among logic, memory, and energy — a dance that brings code to life as functionality. Understanding this process not only deepens appreciation for technology but also reveals the profound beauty of how ideas become reality in the digital age.