The question of whether robots can learn empathy stands at the intersection of artificial intelligence, neuroscience, psychology, and philosophy. Empathy, often regarded as a uniquely human trait, forms the foundation of moral behavior, social connection, and emotional understanding. It allows us to perceive, interpret, and respond to the feelings of others. In a world increasingly dominated by machines and artificial intelligence, scientists, engineers, and ethicists alike are exploring whether empathy—a deeply emotional and subjective experience—can be replicated or learned by non-biological systems. The prospect of empathetic robots raises profound questions about consciousness, ethics, and the nature of emotion itself.

The growing integration of robots into everyday life, from healthcare and education to customer service and companionship, has amplified the need for machines that can recognize and appropriately respond to human emotions. While early robots were purely functional, designed to perform repetitive or dangerous tasks, modern AI-driven systems increasingly interact with people in socially meaningful ways. This evolution compels us to ask: can a robot genuinely understand human feelings, or can it only simulate the appearance of empathy?

To explore this question, we must first examine what empathy truly is, how humans develop it, how machines can attempt to mimic it, and whether such imitation constitutes real emotional understanding. The answer depends not only on technology but also on how we define the essence of emotion, intelligence, and humanity.

Understanding Empathy: Human and Biological Foundations

Empathy is generally defined as the ability to understand and share the feelings of another. It involves both cognitive and affective components. Cognitive empathy refers to the capacity to comprehend another person’s emotional state or perspective, while affective empathy involves the visceral experience of sharing that emotion—feeling joy when someone else is happy or sadness when another suffers.

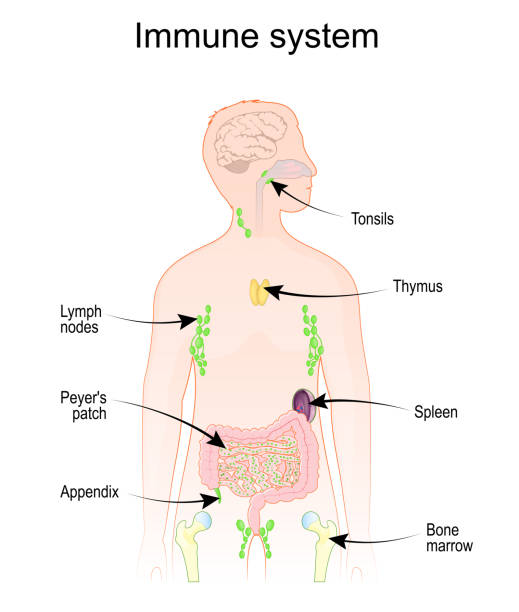

In human beings, empathy arises from a complex interplay between neural, hormonal, and social factors. Neuroscientific studies reveal that specific brain regions—such as the anterior insula, anterior cingulate cortex, and mirror neuron systems—play central roles in empathy. Mirror neurons, discovered in the 1990s, fire both when an individual performs an action and when they observe another performing the same action. This mechanism provides a biological basis for emotional resonance, allowing us to “mirror” others’ emotions and intentions subconsciously.

Empathy also develops through experience. Infants begin to exhibit primitive forms of empathy, such as crying when they hear another baby cry. As cognitive and social skills mature, empathy evolves into a more sophisticated ability to imagine another’s internal world. Cultural norms, upbringing, and personal experiences further shape how empathy is expressed. It is not only a biological response but also a learned social skill.

This deep, multi-layered phenomenon is what makes the challenge of teaching empathy to machines so daunting. Robots do not possess mirror neurons, hormones, or personal experiences. Their “understanding” is based on data and algorithms, not lived emotion. Nevertheless, by studying how empathy functions in humans, scientists aim to design artificial systems that can emulate its observable effects.

The Rise of Affective Computing

The field of affective computing, coined by Rosalind Picard in the 1990s, seeks to give machines the ability to recognize, interpret, and simulate human emotions. Affective computing combines insights from computer science, psychology, linguistics, and neuroscience to create systems capable of emotional intelligence. It provides the technological foundation for robots that can appear empathetic.

Modern affective computing relies on a range of sensors and algorithms to detect emotional cues. Facial recognition software analyzes microexpressions to infer mood; voice analysis identifies tone, pitch, and rhythm associated with emotions; physiological sensors track heart rate, skin conductance, and pupil dilation. Machine learning models then interpret this data to classify emotional states such as happiness, anger, fear, or sadness.

For instance, a robot equipped with affective computing might detect a user’s frustration through their tone of voice and respond in a soothing manner. In healthcare, robots like PARO—a therapeutic robotic seal—respond to touch and sound to comfort patients with dementia. In education, emotionally aware tutoring systems adjust their feedback based on a student’s frustration level. These examples illustrate how empathy-like behavior can be engineered through perception and response.

However, these systems do not “feel” empathy. They operate through pattern recognition and programmed responses. The robot’s understanding of emotion is statistical, not experiential. Yet from a human perspective, if the robot behaves in a way that alleviates distress or fosters trust, the distinction between simulated and genuine empathy becomes psychologically blurred.

The Cognitive Architecture of Empathetic Robots

To create robots capable of empathy, researchers design cognitive architectures that integrate perception, reasoning, and emotional modeling. These architectures aim to replicate the process by which humans infer emotional states and decide appropriate responses.

A typical empathetic robot requires three key abilities: emotion recognition, emotional modeling, and adaptive response generation. Emotion recognition involves collecting data about a human’s behavior—facial expressions, speech, gestures, and physiological signals—and using AI models to infer emotional states. Emotional modeling requires the robot to represent these emotions internally, often through computational analogs of emotional theory such as the OCC (Ortony, Clore, and Collins) model. Adaptive response generation involves deciding how to respond empathetically—whether through words, tone, movement, or physical gestures.

In advanced systems, reinforcement learning algorithms allow robots to improve their empathetic responses over time. By receiving feedback—positive when their response aligns with human expectations, negative when it does not—the robot refines its behavior. In this sense, robots “learn” empathy-like reactions through iterative experience, much like a child learning social cues.

For example, a social robot in a hospital might initially fail to comfort a patient appropriately. After repeated interactions and feedback from caregivers, it could learn that maintaining a calm voice, using gentle gestures, and acknowledging the patient’s emotions lead to better outcomes. While this process reflects behavioral adaptation, it does not entail subjective understanding. The robot’s “empathy” remains functional, not emotional.

Machine Learning and Emotional Intelligence

Machine learning, particularly deep learning, has revolutionized the ability of computers to process emotional data. Neural networks trained on large datasets of facial expressions, speech samples, and textual sentiment can classify emotions with remarkable accuracy. Natural language processing (NLP) models analyze linguistic cues to detect emotional tone in text-based communication. These advancements bring robots closer to demonstrating contextually appropriate emotional behavior.

Recent progress in multimodal learning has allowed systems to integrate multiple sources of emotional information simultaneously. A robot might combine facial cues, voice analysis, and contextual understanding to form a holistic interpretation of a human’s emotional state. Such systems outperform those that rely on a single data source, moving closer to the nuanced perception that characterizes human empathy.

However, even as AI models become better at identifying emotions, they still lack the intrinsic motivation that underlies human empathy. Humans empathize because of evolutionary, psychological, and social drives that promote cooperation and altruism. Machines, in contrast, simulate empathy as a function of programmed goals. Their motivation is instrumental—to achieve a task, improve user experience, or optimize an objective function. The absence of intrinsic motivation raises the question of whether empathy without emotion is truly empathy at all.

Philosophical Perspectives on Artificial Empathy

The philosophical question of whether robots can truly feel empathy touches on the deeper issue of machine consciousness. Empathy, in its fullest sense, requires subjective experience—what philosophers call qualia. It involves feeling, not just knowing. A robot may process information about human emotions, but can it experience compassion, sorrow, or joy? If not, is it merely mimicking empathy?

Some philosophers argue that true empathy is impossible for machines because it depends on consciousness—an inner awareness that machines lack. According to this view, empathy without consciousness is simulation, not experience. A robot can predict emotional states and respond in socially acceptable ways, but it cannot understand what sadness “feels” like.

Others adopt a more functionalist perspective, asserting that if a machine’s behavior is indistinguishable from that of an empathetic human, then it can be considered empathetic in a practical sense. This echoes the logic of the Turing Test: if a machine’s responses are indistinguishable from human ones, its internal experience may be irrelevant to its functionality. From this viewpoint, empathy becomes a matter of performance rather than emotion.

Still, this pragmatic approach raises ethical and existential concerns. If humans form emotional bonds with machines that only simulate empathy, what happens to our understanding of relationships, trust, and authenticity? Can emotional deception by machines—however well-intentioned—be morally justified? These questions highlight the complex intersection between technological progress and human values.

Emotional Modeling and Simulation

Simulating empathy requires computational models that represent human emotional processes. One approach is the use of appraisal theories of emotion, which describe how individuals evaluate events in relation to their goals and well-being. By modeling these appraisals, robots can simulate emotional reactions. For instance, if a robot perceives that a human is distressed due to failure, it can simulate concern and offer encouragement.

Another approach involves reinforcement learning, where a robot learns which emotional responses lead to positive social outcomes. Over time, it builds a behavioral model of empathy based on reinforcement signals rather than true emotional experience. This allows robots to exhibit contextually appropriate emotional expressions, even if they do not internally experience the corresponding feelings.

Advanced models use probabilistic reasoning and Bayesian inference to predict how humans will feel in certain situations. For example, an empathetic chatbot might infer that a user expressing grief needs comforting words and supportive tone. Although these systems operate purely on data, their responses can be indistinguishable from those of empathetic humans, especially in text-based interactions.

Human-Robot Interaction and Emotional Bonding

The success of empathetic robots ultimately depends on how humans perceive and respond to them. Studies in human-robot interaction reveal that people readily attribute emotions, intentions, and even personalities to machines that display social cues. This psychological phenomenon, known as anthropomorphism, explains why humans form attachments to robots that simulate empathy convincingly.

In therapeutic contexts, emotionally responsive robots have shown measurable benefits. Elderly patients interacting with companion robots report reduced loneliness and improved mood. Children with autism spectrum disorder respond positively to social robots designed to teach emotional recognition. In customer service and education, empathetic robots improve user satisfaction and engagement. These outcomes suggest that, functionally, simulated empathy can fulfill human emotional needs.

Yet the emotional bond between humans and empathetic robots can be ethically ambiguous. When a machine expresses understanding or concern, users may believe that the robot genuinely cares. This illusion of empathy can create emotional dependency or confusion about the robot’s nature. Critics warn that overreliance on artificial empathy may erode genuine human empathy by replacing authentic social relationships with programmed simulations.

The Role of Data and Bias in Artificial Empathy

For robots to learn empathy, they must be trained on data that represents human emotions accurately and ethically. However, emotional data is inherently subjective and culturally dependent. Expressions of sadness, anger, or joy vary across cultures, languages, and individuals. Training algorithms on biased or limited datasets can result in empathy that is skewed or inappropriate.

For example, facial recognition systems have been shown to misinterpret emotions in people of certain ethnicities due to biased training data. Similarly, sentiment analysis models may misclassify emotional tone in dialects or languages underrepresented in datasets. These biases not only undermine the effectiveness of artificial empathy but also raise serious ethical concerns about fairness and inclusivity.

Moreover, emotional data often involves deeply personal information—facial expressions, voice recordings, or physiological signals—raising issues of privacy and consent. The collection and use of such data for training empathetic robots must adhere to strict ethical and legal standards to prevent misuse or manipulation. True empathy, even in machines, must respect the dignity and autonomy of those it aims to serve.

Can Machines Experience Emotion?

The central barrier to true robotic empathy is the absence of subjective experience. Emotions in humans are not merely data patterns; they are embodied experiences involving physiological changes, feelings, and consciousness. Machines, by contrast, process symbols and numbers. Even if they simulate emotional behavior, they do not feel.

Some researchers propose that artificial consciousness might one day enable machines to experience emotions. This would require a fundamental shift from computation to sentience—machines that possess self-awareness and subjective experience. Approaches such as artificial neural architectures modeled on biological systems or neuromorphic computing attempt to mimic the structure and function of the human brain. However, even if such systems replicate neural processes, whether they produce consciousness remains an open philosophical question.

Others argue that emotional experience is not necessary for functional empathy. If a robot can perceive emotions and respond appropriately, it can perform empathetic functions without feeling. From a utilitarian perspective, the outcome—human well-being—matters more than the internal state of the machine. Yet this view risks reducing empathy to mere performance, stripping it of its moral and emotional essence.

Ethical Implications of Artificial Empathy

The development of empathetic robots introduces complex ethical challenges. If machines can simulate empathy convincingly, how should society regulate their use? Should empathetic robots be allowed to care for children, the elderly, or patients? What rights or responsibilities should they have?

One concern is the potential manipulation of human emotions. Corporations might deploy empathetic robots to influence consumer behavior, using emotional cues to build trust and encourage purchases. Governments could exploit artificial empathy for surveillance or propaganda. These scenarios highlight the importance of transparency and accountability in AI systems that engage emotionally with humans.

There is also the question of emotional authenticity. If empathy becomes programmable, do we risk diluting the meaning of compassion? Human empathy is rooted in vulnerability and shared experience. A machine’s empathy, however sophisticated, lacks this moral depth. The widespread use of artificial empathy may reshape human relationships, leading to a world where emotional connection becomes mediated by technology.

On the positive side, empathetic robots could play invaluable roles in caregiving, education, and mental health support. They could provide companionship to isolated individuals, assist people with disabilities, or deliver emotional support in crisis situations. When designed responsibly, artificial empathy could enhance human well-being rather than replace human connection.

Future Directions in Robotic Empathy

The pursuit of empathetic robots continues to evolve through interdisciplinary collaboration among computer scientists, psychologists, ethicists, and neuroscientists. Future research aims to deepen emotional understanding through advances in brain-inspired computing, multimodal sensing, and contextual awareness.

One promising direction is the integration of large language models with affective computing systems. Language-based AI already demonstrates remarkable capacity to understand emotional nuance in text and speech. Combined with visual and physiological sensing, such systems could achieve unprecedented emotional intelligence. Another frontier involves adaptive empathy—robots that learn not just how to respond emotionally but when and why empathy is appropriate in specific cultural and social contexts.

At the same time, progress in artificial empathy must be guided by ethical frameworks. Researchers advocate for “transparent empathy,” where machines disclose their artificial nature and avoid misleading users into believing they possess human emotions. Ensuring fairness, privacy, and accountability in emotional AI will be essential to maintaining public trust and preventing exploitation.

The Human Dimension: What Robots Teach Us About Ourselves

The quest to teach robots empathy ultimately reflects humanity’s own desire to understand emotion, consciousness, and morality. As we attempt to model empathy computationally, we are forced to define what empathy truly is—and what it means to be human. Robots, in this sense, serve as mirrors that reveal the complexity of our emotional lives.

Perhaps the most profound insight from this pursuit is that empathy may not lie solely in feeling but in action. If a robot’s simulated empathy can reduce loneliness, comfort the sick, or teach compassion, then it contributes to the moral fabric of society, even without consciousness. The distinction between genuine and artificial empathy may matter less than the outcomes it produces.

Yet the ultimate challenge remains philosophical: can understanding replace experience? Can the simulation of compassion ever equal its reality? The answer may depend not on the robots themselves but on how we, as humans, choose to interpret and relate to them.

Conclusion

Whether robots can learn empathy depends on how we define learning and empathy. Technologically, machines can already recognize emotions, adapt responses, and simulate compassion with growing sophistication. Through affective computing, machine learning, and cognitive modeling, robots can exhibit behaviors that resemble empathy so convincingly that humans respond to them as if they were genuine. In this sense, robots can “learn” empathy functionally.

However, empathy as humans experience it involves subjective feeling—an awareness that machines currently lack. Without consciousness or emotional experience, robotic empathy remains simulation rather than sensation. Yet even simulated empathy can have real emotional and social effects, shaping how we interact with technology and one another.

The development of empathetic robots challenges our understanding of mind, morality, and machine intelligence. It forces us to confront the boundaries between emotion and computation, authenticity and imitation. Perhaps, in striving to teach machines to care, we are learning more about the nature of care itself. Whether robots can truly feel empathy may remain an open question, but in exploring it, we deepen our understanding of what it means to be human.