Sign language is a powerful means of communication for the deaf and hard-of-hearing communities around the world. While each country or region has developed its own sign language to reflect local cultures and contexts, these languages tend to be complex, with each consisting of thousands of unique signs. The structure and use of sign language are shaped by local customs, and the expressions used can vary dramatically, which often makes sign languages challenging to learn for non-native signers and difficult to understand across regions.

The increasing recognition of sign language as an essential form of communication has led to a growing interest in the use of technology to bridge the gap between deaf communities and hearing people. Researchers have long been working on automatic sign language translation systems that use artificial intelligence (AI) to convert the signs into written or spoken words, an area referred to as “word-level sign language recognition.” This field aims to create an accessible solution for deaf and hard-of-hearing individuals by using machine learning models to recognize signs in real-time, improving communication and interaction.

In the latest advance in this field, a research group from Osaka Metropolitan University, led by Associate Professor Katsufumi Inoue and Associate Professor Masakazu Iwamura, has succeeded in significantly improving the accuracy of word-level sign language recognition through AI. Their findings, published in the IEEE Access journal, represent a milestone in the development of more reliable automated sign language systems.

Overcoming the Challenges of Sign Language Recognition

Word-level sign language recognition has faced numerous challenges over the years. Early methods primarily focused on tracking general movements of the signer’s hands and upper body. While this allowed for a basic level of recognition, the approach failed to fully account for the complexities of human sign language. In sign languages, small changes in hand shape, hand position relative to the body, and even subtle movements of the face can alter the meaning of a sign. These tiny differences can cause serious issues in the accuracy of recognition systems.

Moreover, the difficulty of accurately interpreting sign language isn’t simply a matter of following hand movements. Hand gestures often involve close attention to facial expressions, body posture, and the spatial relationship of the hands. Misinterpreting any of these factors can result in miscommunication or failure to correctly identify the sign being performed.

How AI is Helping to Solve These Issues

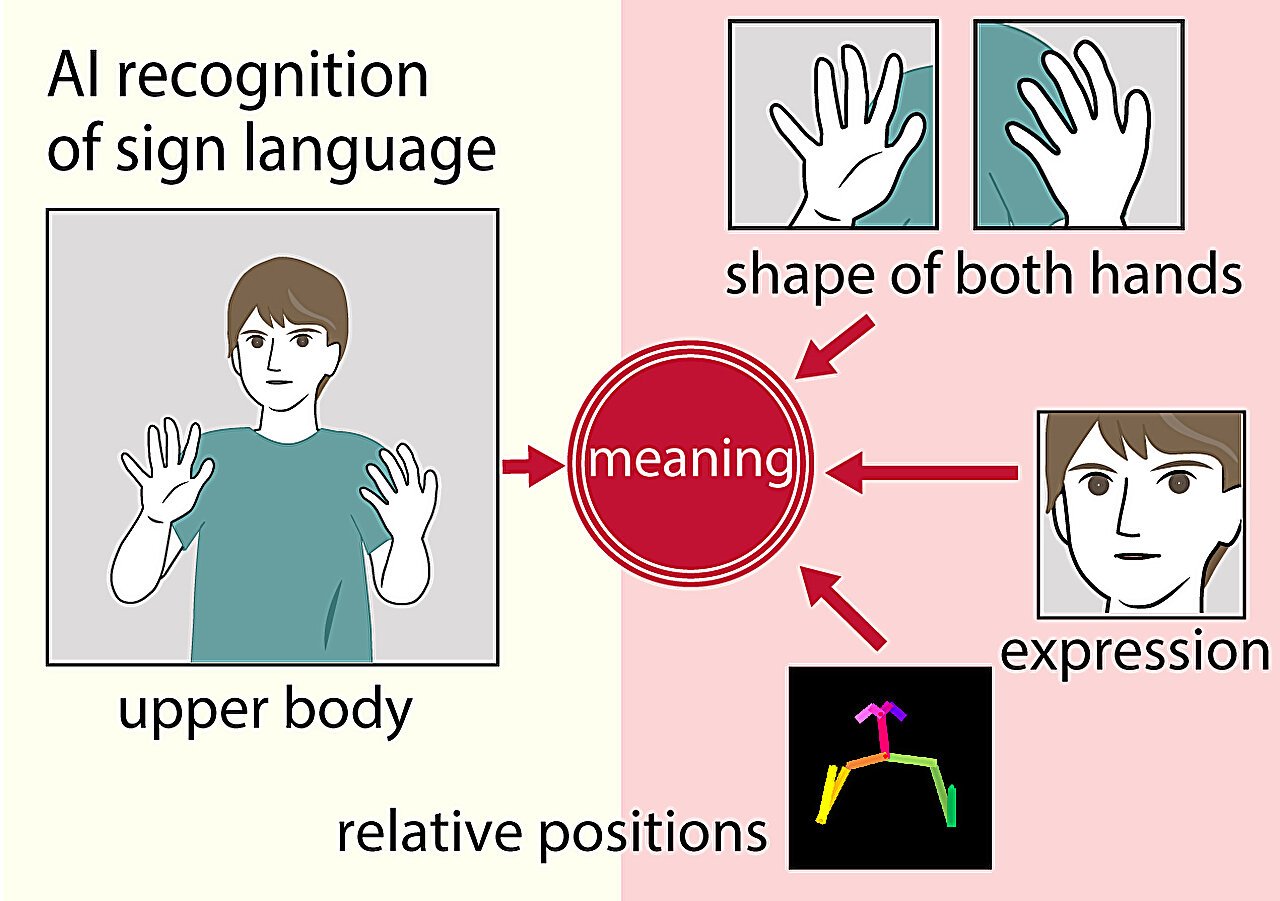

Professor Inoue and his team worked with their colleagues at the Indian Institute of Technology Roorkee to enhance the AI system’s understanding of these subtle nuances by integrating more detailed data beyond basic upper-body movement tracking. Traditional sign language recognition models used 2D or 3D positional data from the hands and arms. Inoue’s team advanced this by incorporating additional sources of information such as facial expressions and skeletal data reflecting the position of the hands and body. This combination allows the system to create a more accurate and comprehensive model of a signer’s intent, capturing the finer details necessary for true recognition.

By incorporating these additional layers of data, the team’s method has achieved a significant breakthrough in translation accuracy. According to Professor Inoue, their new approach improves the accuracy of word-level sign language recognition by 10–15% compared to traditional methods. This boost is considerable in the realm of machine learning and AI, where even modest improvements can make a big difference in real-world applications.

“By integrating facial expression data and full-body skeletal information, our method can more effectively interpret the nuances and subtleties of individual signs,” Professor Inoue explained. “The increase in accuracy is crucial because it reduces misunderstandings and provides a clearer translation of the message, which is important for communication in a diverse range of social contexts.”

Future Applications and Global Impact

The implications of this work extend beyond mere academic curiosity. The improved accuracy in sign language translation could enable more effective communication in various environments, whether that be in educational settings, workplaces, public services, or daily interactions. As the AI system is further developed and refined, it can be applied to different sign languages, from American Sign Language (ASL) to Japanese Sign Language (JSL), British Sign Language (BSL), and more. As Inoue highlights, this advancement could create the possibility for smoother communication between speaking, hearing, and deaf individuals from different parts of the world. With the adoption of this technology, local speech communities could communicate with the deaf and hard-of-hearing, breaking down barriers that have existed for years.

One of the anticipated benefits of this breakthrough is improving access for the hearing- and speech-impaired population in various countries. Translation systems powered by AI could be embedded in daily tools—such as smartphones or public kiosks—providing real-time translation services for sign language users wherever they go. This has the potential to create a more inclusive environment for individuals with hearing impairments, offering them an easier way to interact with the larger society, whether through customer service or through communication in public spaces.

The advances could also lead to better educational tools and content that cater specifically to sign language users, enhancing literacy and academic performance for students who use sign languages as their primary mode of communication. Furthermore, the implementation of more accurate recognition models might ease the process of automated translation in official settings like medical facilities, government services, and other domains that are critical for people with hearing impairments.

Another avenue of progress could include international collaboration between deaf communities from different cultural backgrounds. The ongoing development of AI-based sign language systems could encourage widespread adoption across diverse countries, contributing to greater global communication for the deaf community.

Conclusion

The research led by Professor Inoue and his colleagues represents a significant milestone in the evolving field of sign language recognition. By integrating multiple types of data, such as facial expressions and hand-body relationships, the system is poised to provide higher accuracy levels and overcome many of the limitations faced by previous models. This achievement not only improves the effectiveness of technology-based translation for the deaf and hard-of-hearing communities but also highlights the potential of AI to bridge communication gaps globally.

As AI continues to advance, the goal is clear: to empower individuals—both with and without hearing impairments—to communicate more efficiently and inclusively. With the progress demonstrated by Inoue’s research, word-level sign language recognition is poised to become a fundamental tool for the future, helping to pave the way for more accessible, inclusive communication worldwide. The hope is that the methods developed in this study can expand and evolve, eventually creating a world where the ability to communicate effortlessly—across sign languages and spoken languages alike—is no longer a barrier to connectivity and understanding.

Reference: Mizuki Maruyama et al, Word-Level Sign Language Recognition With Multi-Stream Neural Networks Focusing on Local Regions and Skeletal Information, IEEE Access (2024). DOI: 10.1109/ACCESS.2024.3494878