The human brain is a remarkable organ—capable of abstract reasoning, imagination, memory, and creativity. It has built civilizations, composed symphonies, and sent spacecraft to distant planets. Yet despite its power, the brain is far from perfect. Beneath its brilliance lies a deeply flawed decision-maker that constantly takes shortcuts, misinterprets information, and makes systematic errors. These errors are not random; they are predictable and deeply ingrained in how our minds evolved to process information. Scientists call them cognitive biases—mental distortions that shape our perception, influence our judgments, and guide our behavior in ways we rarely recognize.

Understanding cognitive biases is essential not only for psychology and neuroscience but for everyday life. Whether we are making financial decisions, forming political opinions, or judging other people, our biases silently steer our thoughts. Recognizing them does not make us immune, but it gives us a crucial advantage: the ability to think more clearly and make better choices.

The Origins of Cognitive Biases

To understand why our brains are biased, we must go back to the roots of human evolution. The human mind did not evolve to be an objective truth-finding machine; it evolved to help us survive and reproduce in a complex and often dangerous environment. Our ancestors faced countless decisions that required rapid judgments—whether to flee or fight, whom to trust, what to eat, and how to interpret the behavior of others.

The brain developed heuristics, or mental shortcuts, to handle this overwhelming flow of information. Heuristics are efficient—they allow us to make quick decisions without extensive analysis. For example, if a rustle in the bushes once signaled a predator, reacting instantly rather than pausing to evaluate could mean the difference between life and death. However, these shortcuts, while useful for survival, can misfire in the modern world where threats are psychological, social, or abstract rather than physical.

The term cognitive bias was introduced by psychologists Amos Tversky and Daniel Kahneman in the 1970s. Their research showed that people systematically deviate from rationality when making judgments under uncertainty. Instead of weighing evidence logically, we rely on intuitive patterns that are often flawed. These biases influence how we interpret reality, recall memories, and make predictions.

The Brain’s Shortcuts and the Illusion of Rationality

The brain’s primary goal is not accuracy but efficiency. Every second, it processes an enormous amount of sensory information—visual images, sounds, smells, and tactile sensations. To manage this flood of data, the brain simplifies. It filters out irrelevant details, fills in gaps, and relies on patterns from past experiences to make sense of the present. This process is usually effective but occasionally deceptive.

Cognitive biases arise when these simplifications distort reality. We think we are rational, weighing evidence and drawing conclusions based on logic, but our perceptions are shaped by subconscious preferences and emotional influences. Even highly intelligent people fall victim to these mental illusions because they stem from the fundamental structure of the mind, not from ignorance or lack of intelligence.

The Confirmation Trap

One of the most pervasive biases is confirmation bias—the tendency to seek out, interpret, and remember information that confirms our existing beliefs while ignoring evidence that contradicts them. This bias is deeply intertwined with how we construct our sense of identity and worldview.

When faced with conflicting information, the brain experiences cognitive dissonance—psychological discomfort caused by inconsistency between beliefs and evidence. To reduce this discomfort, the mind selectively interprets data to support what it already believes. For example, if someone believes a particular diet is healthy, they are more likely to notice articles and testimonials that support that view and dismiss scientific studies that contradict it.

Confirmation bias explains why political debates often entrench opinions rather than change them. In an age of information overload, people can easily find sources that align with their views, reinforcing their biases and creating echo chambers. This bias not only distorts personal reasoning but also fuels social polarization, misinformation, and resistance to scientific evidence.

The Availability Illusion

Another powerful distortion is the availability heuristic, a mental shortcut that relies on immediate examples that come to mind. If something is easy to recall, we assume it must be common or likely to occur. This bias is why people often overestimate the probability of dramatic events like airplane crashes or shark attacks—they are vivid and heavily covered in the media, so they dominate our memory.

The availability illusion affects how we assess risk, make plans, and evaluate danger. After seeing news reports of natural disasters or violent crimes, people may feel the world is more dangerous than it statistically is. Conversely, because mundane threats like heart disease or poor diet do not receive the same attention, we underestimate their risks.

This bias illustrates how emotion and memory interact in decision-making. Events that evoke strong emotions—fear, anger, surprise—are stored more vividly in memory, making them easier to recall and thus appear more probable. Our minds equate familiarity with truth, blurring the line between what is memorable and what is real.

Anchoring and the Power of First Impressions

Human judgment is often anchored by the first piece of information encountered. This anchoring bias influences decisions in subtle but powerful ways. For example, if a car dealer first mentions a high price, all subsequent negotiations are unconsciously measured against that number, even if it is arbitrary. Similarly, when estimating the population of a city, the first figure that comes to mind acts as a reference point that shapes the final estimate.

Anchoring affects not only economic decisions but also social perception. The first impression of a person—based on appearance, voice, or behavior—creates an initial anchor that colors all subsequent evaluations. Once formed, these impressions are hard to change, even when contradictory evidence emerges.

Experiments show that even completely irrelevant numbers can bias judgment. In one study, participants who spun a wheel of fortune with random numbers later gave estimates influenced by the number they had spun, even though it had no logical connection to the question asked. This demonstrates how easily the mind latches onto arbitrary anchors, revealing our vulnerability to suggestion.

The Halo Effect and the Shadows of First Impressions

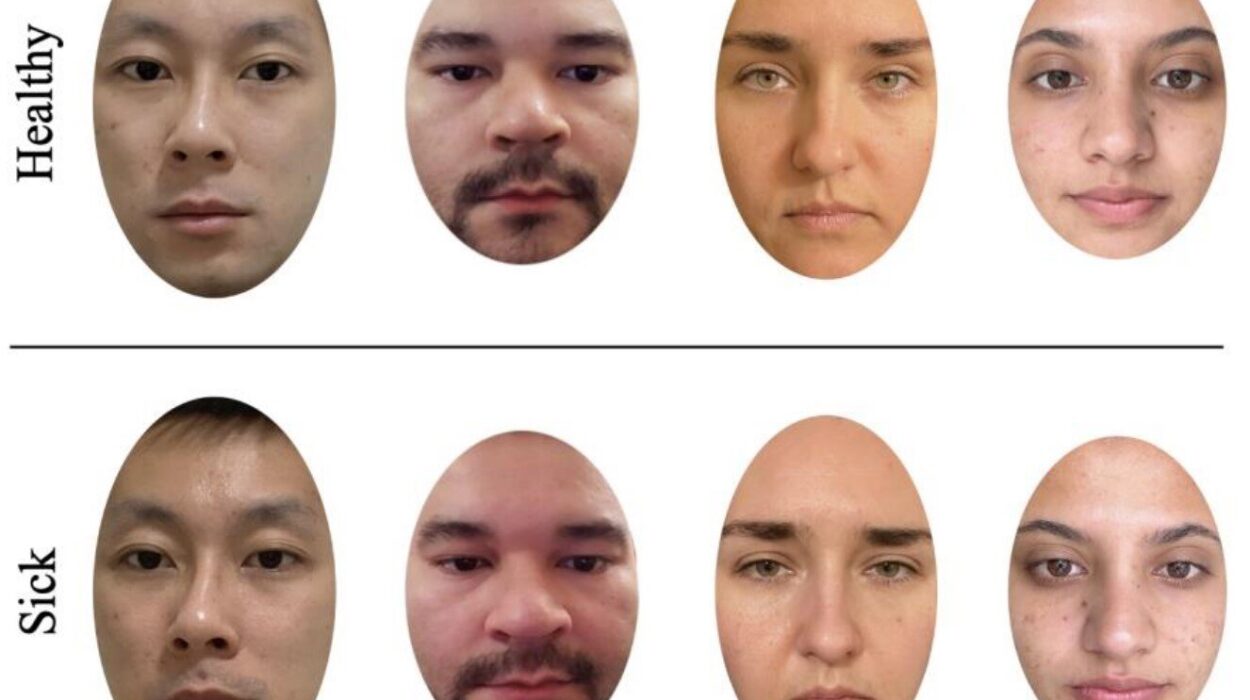

The halo effect describes the tendency to let one positive trait of a person or thing influence our overall perception of them. If someone is physically attractive, we are more likely to assume they are intelligent, kind, and competent. Conversely, the horn effect occurs when one negative trait leads us to view someone unfavorably in general.

These biases are deeply rooted in social cognition. Our ancestors needed to make quick judgments about others’ intentions—friend or foe, trustworthy or dangerous. The halo effect simplifies this process by allowing a single attribute to stand in for a complex evaluation. However, in the modern world, it can lead to unjust assumptions and poor decision-making.

Employers, teachers, and even juries are often influenced by these subconscious biases. Attractive defendants receive lighter sentences, charismatic speakers are perceived as more credible, and students who make a good first impression may be graded more generously. The halo effect reminds us how emotion and appearance can distort our perception of truth.

The Overconfidence Illusion

Perhaps the most ironic cognitive bias is overconfidence—our tendency to overestimate our knowledge, abilities, and control over outcomes. Most people believe they are above-average drivers, more intelligent than average, and less likely than others to make mistakes. Statistically, this is impossible, but psychologically, it feels true.

Overconfidence arises because our brains reward certainty. Confidence signals competence in social settings and has evolutionary benefits—it helps leaders inspire followers and individuals take risks that can lead to success. However, in modern contexts, it often leads to costly errors. Investors overestimate their ability to predict markets, doctors sometimes overdiagnose due to certainty in their expertise, and individuals make poor life decisions based on inflated self-assurance.

The overconfidence effect is closely linked to the illusion of control, where people believe they can influence outcomes that are purely random. Gamblers, for example, feel they can “beat the odds” with skill, and managers may attribute success entirely to their strategies rather than to luck or external conditions. These illusions can be comforting but dangerously misleading.

The Dunning–Kruger Effect

A particularly striking manifestation of overconfidence is the Dunning–Kruger effect, named after psychologists David Dunning and Justin Kruger. Their research showed that people with low ability in a particular area tend to overestimate their competence because they lack the knowledge to recognize their own mistakes. Meanwhile, experts, aware of the complexity of their field, often underestimate their relative skill.

This effect explains why unskilled individuals may appear more confident than true experts. It also highlights a paradox of knowledge: understanding more makes us aware of how much we do not know. The Dunning–Kruger effect contributes to misinformation, as confident but uninformed voices often dominate public discussions, while genuinely knowledgeable experts may sound less certain.

The Status Quo Bias and Resistance to Change

Humans are creatures of habit. The status quo bias refers to our preference for things to remain the same rather than change, even when change might bring improvement. This bias arises from the brain’s desire for stability and predictability. Change introduces uncertainty, which the mind often interprets as risk.

People tend to stick with default options—be it retirement plans, software settings, or social norms—simply because doing nothing feels safer. This inertia is exploited in marketing, politics, and policy design. For instance, countries that automatically enroll citizens in organ donation programs have significantly higher participation rates than those requiring individuals to opt in.

Status quo bias also explains why societies resist social and technological shifts. Innovations often face skepticism not because they are flawed, but because they disrupt familiar patterns. The brain’s preference for cognitive ease—the comfort of the known—creates resistance even to beneficial change.

The Sunk Cost Fallacy and the Fear of Loss

Another cognitive trap is the sunk cost fallacy, the tendency to continue investing time, money, or effort into something simply because we have already invested in it. This bias violates rational decision-making principles, which dictate that past costs should not influence future choices. Yet emotionally, abandoning an investment feels like admitting failure.

People stay in unfulfilling jobs, failing projects, or unhealthy relationships because they “don’t want their effort to go to waste.” Businesses pour resources into failing ventures for the same reason. The sunk cost fallacy is driven by loss aversion—the psychological fact that losses feel about twice as painful as equivalent gains feel pleasurable.

Loss aversion was first described by Kahneman and Tversky in their prospect theory, which showed that people evaluate outcomes not by absolute value but by potential gains and losses relative to a reference point. This asymmetry explains why people prefer avoiding losses over acquiring gains, leading to overly cautious or irrational behavior.

The Hindsight Illusion

After an event occurs, people often believe they “knew it all along.” This hindsight bias makes outcomes seem more predictable than they actually were. Once we know the result of an election, a medical diagnosis, or a market crash, it becomes difficult to recall our previous uncertainty.

This bias distorts our memory and understanding of causality. It makes us overestimate our foresight and underestimate the role of randomness. In research, medicine, and law, hindsight bias can have serious consequences, leading to unfair blame or overconfidence in predictive abilities.

Our brains reconstruct memories, not replay them like recordings. This reconstruction is influenced by current knowledge and emotions, so the past is constantly being rewritten in light of the present. Recognizing hindsight bias is crucial for learning from mistakes without distorting the lessons they teach.

The Group Mind: Social and Cultural Biases

Cognitive biases are not limited to individuals—they operate on social and cultural levels as well. Groupthink, for instance, occurs when the desire for harmony in a group leads members to suppress dissenting opinions, resulting in poor collective decisions. Historical events like corporate scandals or political blunders often reveal how group dynamics can override rational analysis.

Another social bias is the in-group/out-group effect, the tendency to favor those who belong to our own group while distrusting outsiders. This bias has evolutionary roots in tribal survival but contributes to modern problems such as prejudice, discrimination, and polarization. Our brains are wired to categorize quickly, creating mental divisions that can distort judgment and empathy.

Cultural factors also shape biases. What seems rational in one culture may appear biased in another because beliefs, values, and social norms influence perception. For example, collectivist societies may exhibit stronger conformity biases, while individualist cultures may emphasize self-serving interpretations of success.

Memory Distortions and the Constructed Self

Memory, one of the most treasured human faculties, is also one of the most unreliable. We like to think of memory as a faithful record of past events, but in reality, it is reconstructive and malleable. Each time we recall an event, we rebuild it from fragments, often altering details without realizing it.

The false memory effect demonstrates how easily memory can be manipulated. Studies by psychologist Elizabeth Loftus showed that people can be led to remember events that never occurred through suggestive questioning. This phenomenon has major implications for eyewitness testimony and legal reliability.

Biases also shape how we remember ourselves. The self-serving bias leads us to attribute successes to our abilities and failures to external factors, preserving self-esteem. The rosy retrospection bias makes us remember past experiences as more positive than they actually were. Together, these distortions create a narrative of the self that is coherent but not necessarily accurate.

Why Biases Persist

Cognitive biases persist because they serve deep psychological and emotional needs. They simplify complexity, protect our self-image, and provide comfort in an uncertain world. From an evolutionary standpoint, they often enhance survival and social cohesion, even at the expense of logical accuracy.

Moreover, biases are reinforced by social structures and technology. Algorithms on social media amplify confirmation bias by showing us content that aligns with our interests and beliefs. Marketing exploits anchoring and scarcity biases to influence purchasing behavior. Even scientific research is not immune, as researchers may unintentionally favor results that confirm their hypotheses—a phenomenon known as publication bias.

Overcoming Cognitive Biases

Completely eliminating cognitive biases is impossible—they are built into our neural architecture. However, awareness and critical thinking can reduce their impact. The first step is recognizing that we are all biased, regardless of intelligence or education. Actively seeking opposing viewpoints, questioning initial impressions, and using data-driven decision-making can counteract intuitive errors.

Training in statistical reasoning, mindfulness, and slow, reflective thinking helps people override impulsive judgments. Daniel Kahneman described this contrast as the difference between System 1 (fast, intuitive) and System 2 (slow, analytical) thinking. By engaging the slower system more often—especially in important decisions—we can mitigate the influence of biases.

Institutions can also design environments that reduce bias. In business and policy-making, using blind evaluations, peer review, and diversity of perspectives helps ensure fairer decisions. On a personal level, humility, curiosity, and skepticism are powerful antidotes to the brain’s illusions.

Conclusion

Your brain is both your greatest ally and your most subtle deceiver. Cognitive biases are the invisible threads weaving through your thoughts, shaping how you perceive reality, remember the past, and imagine the future. They remind us that our minds are not purely rational machines but emotional, adaptive systems shaped by evolution and experience.

Understanding these biases is not about condemning human thought—it is about mastering it. By recognizing how the brain tricks us, we can learn to question our assumptions, think more critically, and see the world with greater clarity. The challenge is not to eliminate bias but to understand its patterns, so that we can guide our thinking rather than be guided by illusion.

In the end, the study of cognitive biases reveals something deeply human: that the pursuit of truth begins with the courage to doubt our own minds.