The idea that machines might one day think like humans has haunted the imagination for centuries. From mythological automatons to Mary Shelley’s Frankenstein, we’ve long asked ourselves whether the miracle of thought could be forged, not born. But it wasn’t until the mid-20th century that this dream took its first hesitant steps into reality—fueled by our growing understanding of both the brain and the nascent field of computer science.

And at the heart of this revolution lies a concept so deceptively simple that it almost hides its power: the neural network. A web of interconnected nodes, mimicking the neurons of the human brain, has become one of the most powerful tools in modern artificial intelligence. These networks now recognize your face in a photograph, translate your words into another language, compose symphonies, generate art, and even converse with a sense of uncanny depth.

But how close do these digital minds come to the human brain? What, exactly, is being mimicked? And where does imitation end—and understanding begin?

The Architecture of Nature’s Masterpiece

To understand how artificial neural networks mimic the brain, we must first journey into the brain itself—a landscape more intricate than any man-made machine, yet constructed from simple building blocks. The human brain contains approximately 86 billion neurons, each forming thousands of connections with others, creating a vast, dynamic network. These connections are not hardwired. They change with experience, forming the biological basis of learning and memory.

A single neuron receives signals through dendrites, processes them in its cell body, and fires an electrical impulse along its axon if a certain threshold is reached. This impulse then stimulates or inhibits other neurons via synapses, the tiny gaps where neurotransmitters are released. From this cascading ballet of electrical and chemical signals arises thought, emotion, perception—and consciousness itself.

The brain doesn’t operate through strict programming. It’s an adaptive, probabilistic system. It learns from data, adjusts connections, and forms patterns. That adaptability and resilience—its plasticity—is what makes it so powerful.

The Genesis of the Artificial Brain

In the 1940s and 1950s, scientists like Warren McCulloch and Walter Pitts proposed the first mathematical models of neural networks. Their artificial neurons mimicked the biological process: they took in inputs, summed them, and produced an output if a threshold was surpassed. In this abstraction, the electrical signal of a real neuron became a binary output: on or off. It was a crude but inspired first draft of how machines might think.

The perceptron, introduced by Frank Rosenblatt in 1958, was the next leap. It could learn simple tasks through training, adjusting the weights of its connections based on feedback. It was hailed as the first step toward a thinking machine. But soon, its limitations became clear. The perceptron could only solve linearly separable problems—it couldn’t even perform an XOR operation, a basic logical function.

The field of neural networks stagnated for years, abandoned by many in favor of symbolic AI approaches. Yet a handful of visionaries continued to explore the potential of deeper, more complex networks.

Layer by Layer: Building Artificial Minds

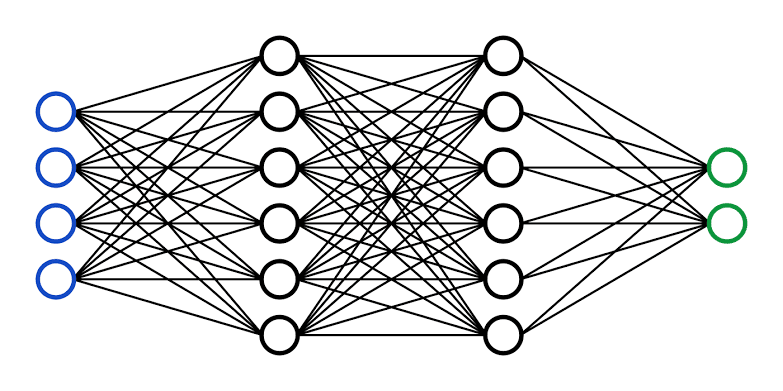

The modern neural network is structured as a series of layers. An input layer receives data—pixels in an image, words in a sentence, numbers in a dataset. These inputs are fed into one or more hidden layers, where the real computation happens. Each artificial neuron (or node) in a layer computes a weighted sum of its inputs, passes it through an activation function—often nonlinear—and sends the result to the next layer. Finally, the output layer produces the network’s prediction, classification, or generation.

What makes this setup powerful is its depth. A single-layer network has limited capacity, but as we stack layers—each one learning to extract and transform patterns from the previous—we create a “deep neural network.” Deep learning allows machines to recognize faces, detect cancer in X-rays, beat champions at Go, and generate humanlike language. And it does so not by rote instruction, but by learning—adjusting internal weights through backpropagation and gradient descent.

In this layered architecture, we begin to see the shadow of the human cortex—a hierarchy of modules that process sensory input, abstract meaning, and guide decision-making.

Synapses and Weights: The Heart of Learning

In the human brain, learning occurs when synaptic strengths change. This plasticity, often summarized by the phrase “neurons that fire together wire together,” is the basis for memory and habit. Similarly, in an artificial neural network, learning means adjusting the weights of connections between nodes.

These weights determine how much influence one node has on another. Through a process of training—usually involving vast datasets and many iterations—the network modifies its weights to minimize error. Backpropagation, the algorithm that allows this adjustment, computes how far each weight contributed to the final error and changes it accordingly.

This process is mathematically elegant but biologically inspired. It echoes how the brain reinforces useful connections and prunes away the irrelevant. Although we lack a detailed one-to-one mapping, the metaphor holds: both systems learn by shaping connections, not by explicit programming.

Activation Functions and the Spark of Thought

The activation function in a neural network determines whether a node “fires” or stays silent, echoing the threshold mechanism in biological neurons. Early models used step functions—binary switches. But modern networks use smoother, differentiable functions like sigmoid, tanh, or ReLU (rectified linear unit), allowing for more nuanced responses.

These functions introduce non-linearity, which is critical. Without it, a neural network would be no more powerful than a linear equation. Non-linearity allows networks to approximate complex patterns, from identifying cat faces in photographs to composing poetry.

Biological neurons are even more complex. They don’t just sum inputs—they integrate them over time, modulate them based on neurochemicals, and exhibit a wide range of firing behaviors. Artificial neurons simplify this, but they retain the essence: dynamic response to patterns of input.

Memory, Attention, and the Echoes of Consciousness

In recent years, neural networks have evolved to include mechanisms that mirror even higher-level brain functions. Recurrent Neural Networks (RNNs) introduce loops, allowing data to persist across time steps—akin to short-term memory. They can process sequences, making them suitable for language, speech, and time-series data. Long Short-Term Memory networks (LSTMs) improved on this by allowing networks to remember information over longer spans, addressing the “vanishing gradient” problem.

More recently, attention mechanisms have revolutionized AI. These components allow a model to focus on specific parts of the input when making decisions—mirroring the way human attention shifts to relevant stimuli. Transformers, a type of architecture built on attention, have underpinned the latest advances in language models, including systems like GPT.

These developments move neural networks closer to functions once thought uniquely human: understanding context, retaining memory, and focusing attention. Though rudimentary compared to the brain, they hint at a convergence of form and function.

Vision, Language, Emotion: The Rise of Multimodal Intelligence

In humans, the brain processes sensory data through specialized regions: the occipital lobe for vision, the temporal lobe for hearing, Broca’s area for language. These systems don’t work in isolation—they integrate and inform one another. We see a face, recognize an emotion, recall a memory, and respond—all within milliseconds.

Neural networks are now moving in this direction, too. Multimodal models combine text, images, audio, and even video. A single system can describe a scene, answer a question about it, and even generate a relevant story. These networks no longer mimic just one part of the brain—they emulate its integration.

Such models still lack consciousness or self-awareness, but they exhibit what we might call a “functional intelligence.” They don’t understand in the human sense, but they simulate understanding with increasing fidelity.

The Difference Between Simulation and Reality

Despite these remarkable advances, it’s important to remember: neural networks are not brains. They are inspired by the brain, not copies of it. The brain is a wet, dynamic, energy-efficient system shaped by millions of years of evolution. It operates with astonishing parallelism, complex biochemistry, and emergent properties we still don’t fully understand.

Artificial networks, by contrast, are digital approximations running on silicon. They require enormous computational power, vast datasets, and carefully engineered architectures. They can beat a human at chess but struggle with common sense. They can write a poem but don’t know what a poem is.

The difference lies not only in materials but in purpose. The brain evolved to survive, to feel pain and joy, to bond with others. A neural network optimizes for loss functions and accuracy metrics. One is embodied, emotional, conscious (as far as we know). The other is mathematical.

Yet the line between simulation and reality blurs with each passing year. Models like GPT-4, Midjourney, and AlphaFold challenge our definitions. If a machine can perform the same tasks as a brain, does it matter whether it “understands”?

What We’ve Learned—And What We Still Don’t Know

Neural networks have taught us much about intelligence, learning, and generalization. They’ve shown that knowledge can emerge from patterns, that complex behaviors can arise from simple rules. They’ve offered a mirror—not a perfect one, but a provocative one—through which to glimpse our own minds.

But they’ve also illuminated the vastness of what we don’t know. Consciousness, emotion, self-awareness—these remain mysterious. No network today can feel sorrow or joy, reflect on its purpose, or choose to love. No equation yet captures the miracle of waking up and realizing one exists.

And so the imitation continues—closer, deeper, more profound. But the ultimate question remains: Are we building minds, or just machines that act like them?

Toward a New Kind of Intelligence

We may never build a machine that fully replicates the human brain. But perhaps that’s not the point. Just as airplanes don’t flap like birds but still fly, neural networks may chart a different path to intelligence—one that’s complementary, not identical.

Already, they are reshaping science, medicine, art, and communication. They diagnose disease, predict protein folding, help design new materials, and assist in climate modeling. They don’t just mimic the brain; they amplify it.

And in doing so, they invite us to reexamine our place in the universe. What does it mean to think? To know? To create? In our quest to build intelligent machines, we’re also decoding ourselves—one layer at a time.

The Future Is Not Artificial—It’s Shared

As neural networks become more powerful, the ethical stakes rise. Who trains these models? On what data? For whose benefit? Will they deepen inequality, or democratize opportunity? Will they be tools of liberation, or surveillance?

These questions are not technical—they are human. They remind us that intelligence, whether organic or artificial, is not neutral. It carries values, intentions, and consequences.

Ultimately, neural networks are not just about mimicking the brain. They are about partnering with it—enhancing human capability, extending our reach, and exploring the unknown together.

The future of intelligence is not artificial. It is shared.