In the pitch-black night, as we reach for a light switch, bats take flight without ever needing one. These nocturnal navigators glide, dart, and dance through the air, detecting prey and avoiding obstacles with pinpoint precision—all guided by sound alone. But it’s not just the echoes of the world around them that matter; bats, like many animals, must also make sense of the sounds made by others of their kind. In the wild, survival hinges not just on hearing, but on understanding. And understanding begins with categorization.

The ability to quickly distinguish between different types of sounds—especially vocalizations—is crucial to animal survival. This auditory sorting process, known as categorical perception, allows the brain to transform a continuous stream of sounds into neatly packaged, meaningful categories: threat calls, mating signals, navigational chirps, or cries for help. Despite decades of research into how animals manage this sonic miracle, scientists have only scratched the surface. Until now.

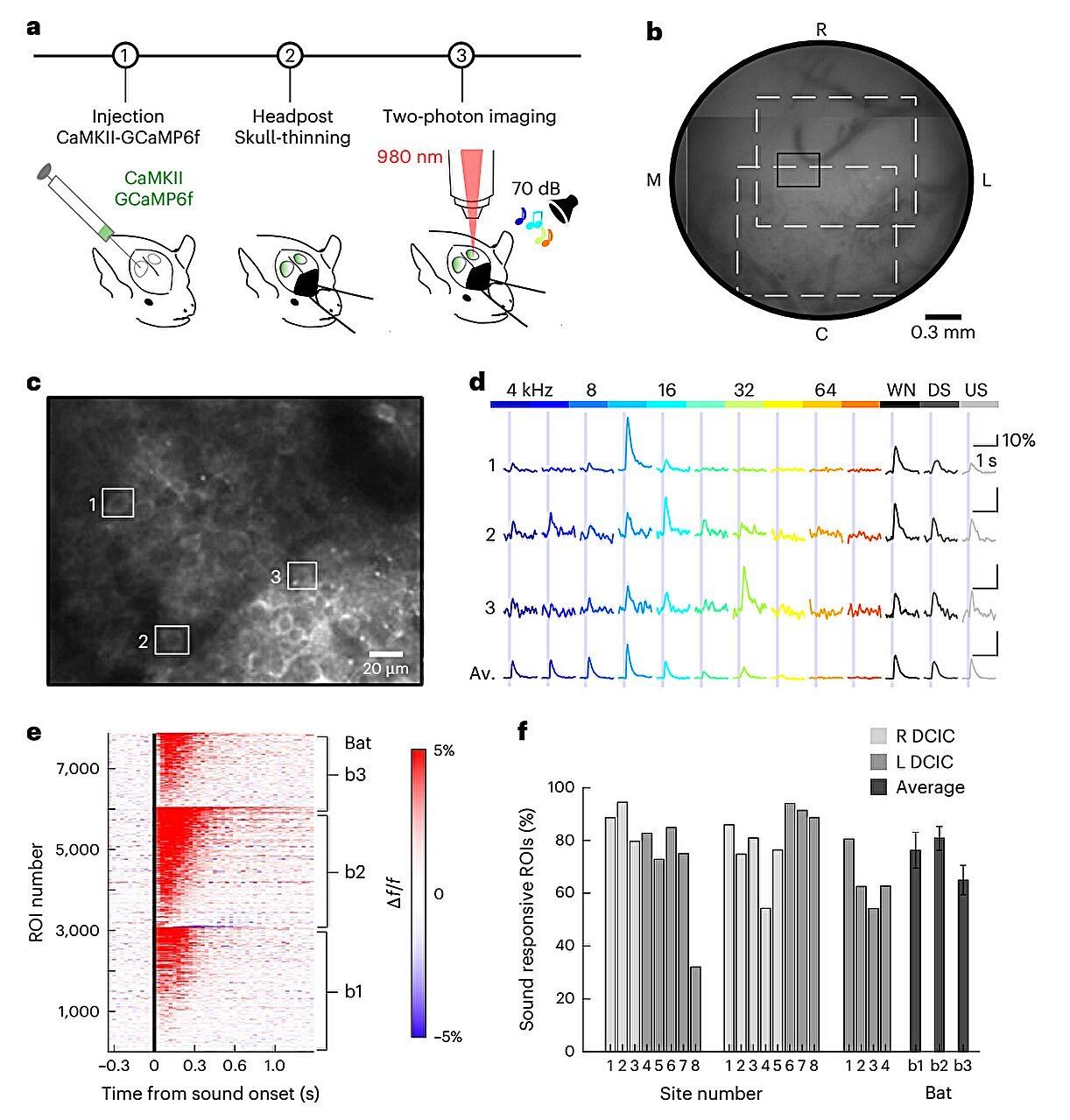

A groundbreaking study conducted by researchers at Johns Hopkins University, recently published in Nature Neuroscience, offers fresh insights that might rewrite what we know about how animals interpret sound. By peering deep into the brains of big brown bats (Eptesicus fuscus), the team discovered that the key to vocal categorization lies not in the brain’s higher regions, as long assumed, but in a surprising mid-level player: the inferior colliculus, nestled in the midbrain.

This revelation doesn’t just enhance our understanding of bat biology. It may force neuroscientists to rethink how animals—possibly including humans—swiftly categorize the world of sound in real-time, before conscious thought even begins.

The Science of Sound and Survival

To comprehend the importance of this discovery, it helps to understand the power of categorical perception. Imagine listening to a friend’s voice shift from a question to a command. Or hearing the difference between a bird’s mating call and its alarm cry. The underlying acoustics might change only subtly—perhaps a slight rise in pitch or a shift in tone—but your brain doesn’t interpret them as a continuous gradient. It snaps the input into discrete categories with stark boundaries, ensuring that vital messages are never misread.

In animals, this is a life-saving skill. A misinterpreted signal might mean the difference between evading a predator or flying straight into danger. It’s why the brain has evolved mechanisms for lightning-fast interpretation of auditory information.

Traditionally, neuroscientists believed that this process took place primarily in the neocortex—the brain’s high-level processing center responsible for reasoning, perception, and consciousness. However, the latest study from Johns Hopkins proposes a startling twist: the bat’s brain may categorize vocalizations long before the neocortex ever gets involved.

Meet the Echolocators: Big Brown Bats

The stars of this scientific drama are big brown bats, a common North American species known for their remarkable echolocation skills. These bats rely on frequency-sweep-based vocalizations, meaning their calls rapidly change in pitch. They use these to map their environment, avoid obstacles, and interact socially—all in near-total darkness.

This species provided the perfect model for testing whether the brain categorizes vocal sounds at earlier stages of processing than previously thought. Unlike humans, who mostly use sound for communication, bats use it for both communication and navigation, making them an ideal organism for observing how different types of vocalizations are neurologically separated and processed.

Imaging the Mind in Motion: Two-Photon Calcium Imaging

To observe how these bats interpret vocal sounds, the researchers turned to a powerful neuroscience tool: two-photon calcium imaging. This technique allows scientists to monitor real-time neural activity by tracking the flow of calcium ions, which flood into neurons when they fire.

By applying this imaging to awake, freely echolocating bats, the team achieved a stunning level of detail. They were able to watch the brains of these animals as they listened to different vocalizations—essentially observing how the bats’ neural circuits sorted sounds into categories on the fly.

The target of this investigation was the inferior colliculus, a midbrain structure involved in early auditory processing. Located just two synapses away from the inner ear, it’s one of the brain’s first major hubs for interpreting sound. But until now, its role in categorical perception had been underappreciated.

The Brain’s Sonic Sorting Center

What the researchers found was nothing short of astonishing.

Individual neurons in the inferior colliculus responded selectively to certain categories of calls. Some lit up for social vocalizations—those used in communication between bats—while others responded to navigational echolocation signals. The neural activity wasn’t random; it showed sharp transitions as the sounds morphed from one category into another. This “switch-like” behavior suggested a hardwired capacity for drawing boundaries between categories, even when the acoustic differences between calls were gradual.

To push this further, the researchers morphed one type of vocalization into another in equidistant acoustic steps, essentially creating a gradient from a social call to a navigation chirp. If the brain perceived this as a smooth continuum, you’d expect neural responses to vary gradually. But instead, the neurons in the inferior colliculus flipped from one response to another, like a light switch—highlighting the existence of an internal category boundary.

Perhaps most intriguingly, neurons that responded to the same categories formed spatial clusters within the midbrain. These clusters were independent of tonotopy, the brain’s usual method of organizing sounds by frequency. In other words, the brain wasn’t just sorting by pitch—it was organizing by meaning.

Early, Fast, and Purposeful: A New Model of Auditory Processing

This study flips conventional neuroscience on its head. Categorical perception, once thought to be the domain of higher cognition in the neocortex, now appears to begin in the subcortical midbrain, earlier and more efficiently than previously believed. This has profound implications.

“Specified channels for ethologically relevant sounds are spatially segregated early in the auditory hierarchy,” the authors write, “enabling rapid subcortical organization into categorical primitives.”

Put simply, animals don’t need to “think” to recognize a vocal cue. Their midbrains are already primed to identify it—sorted into categories at nearly the speed of hearing itself. This kind of pre-conscious processing allows for faster reaction times, essential for survival in the wild.

Beyond Bats: Implications for Neuroscience and AI

While the study focused on bats, its implications are far-reaching. If categorical processing can happen in the midbrain of one species, could it also occur in others—including humans?

This challenges long-standing assumptions about the hierarchical nature of the brain’s auditory system. It raises the possibility that we, too, categorize certain sounds long before they reach conscious awareness—such as detecting danger in a scream or recognizing a loved one’s voice.

Moreover, the findings may inspire advances in artificial intelligence. Many machine learning systems today attempt to mimic how humans categorize sensory data. Understanding how biology performs this task rapidly and efficiently could lead to smarter, more adaptable algorithms in speech recognition, robotics, and autonomous navigation.

Echoes of Insight: What Comes Next?

The study opens up exciting paths for future research. Could similar spatial clusters exist in other animals—or even in the human midbrain? How flexible are these categorical boundaries? Are they shaped by experience or hardwired by evolution?

Moreover, the discovery could shed light on auditory disorders. If categorical perception begins subcortically, impairments in midbrain processing might explain difficulties seen in conditions like autism spectrum disorder or dyslexia, where sound categorization is often disrupted.

It also raises evolutionary questions: Did the ability to categorize sounds in the midbrain give early mammals a survival edge? And if so, how did this architecture evolve across different lineages?

Conclusion: Decoding the Symphony of Life

In the rhythmic pulse of bat calls, amidst the eerie clicks and fluttering wings, lies a story of extraordinary neural sophistication. The research from Johns Hopkins University has peeled back another layer of that mystery, revealing a stunning fact: the brain begins to make sense of the world long before we are aware of it.

For the bat, this means navigating darkness with stunning agility, communicating across distance, and recognizing danger in a single sound. For science, it’s a glimpse into a previously hidden world—a world where the brain categorizes life’s soundtrack before thought even begins.

In this discovery, we see not only how bats survive, but how nature itself engineers intelligence—not in lofty cerebral heights, but in the deep, primal core of the brain. A core that listens, sorts, and reacts—not with deliberation, but with instinct.

The night may be silent to us, but for the bat, it is alive with meaning. And thanks to this new research, we now understand just a bit more of that hidden language.

Reference: Jennifer Lawlor et al, Spatially clustered neurons in the bat midbrain encode vocalization categories, Nature Neuroscience (2025). DOI: 10.1038/s41593-025-01932-3