In the vast timeline of human innovation, there are moments that change everything — the invention of the wheel, the harnessing of electricity, the decoding of DNA. And now, in our century, there is the rise of artificial intelligence powered by deep learning. It is a revolution not in metal and gears, but in invisible networks of numbers and code that allow machines to “see,” “hear,” “speak,” and, in a way, “imagine.”

Deep learning is often portrayed as a mysterious black box — a technology so complex that only a select few can truly understand it. But its story is not just about algorithms and mathematics. It is a story about how humanity learned to teach machines in a way that mirrors our own brains, about the decades of false starts and breakthroughs, about the people who refused to give up when the world thought they were chasing science fiction.

To understand deep learning, we must first step back and ask a deceptively simple question: how do we learn? For humans, it’s about experiences, patterns, and feedback. We see the sun rise every morning, and we learn to expect it. We hear a language spoken around us, and we absorb its patterns until words form naturally in our mouths. Deep learning is our attempt to give machines that same gift — to make them capable of discovering patterns in data, without being told every step of the way what to do.

The Brain as Blueprint

The inspiration for deep learning is rooted in neuroscience. In the 1940s, long before smartphones and cloud computing, scientists like Warren McCulloch and Walter Pitts began building mathematical models of neurons — the nerve cells that make up the brain. A neuron takes inputs, processes them, and decides whether to send a signal onward. These scientists imagined a network of artificial neurons that could perform logical tasks, much like a biological brain.

This idea was intoxicating. If we could replicate the brain’s structure in silicon and code, perhaps machines could learn just like us. The first artificial neural networks were painfully simple — just a few “neurons” connected together. They could recognize extremely basic patterns, but nothing close to human intelligence. Still, the seed was planted.

In the 1950s and 60s, pioneers like Frank Rosenblatt built perceptrons — early neural network models that could learn from examples. They seemed magical: show them enough images of circles and squares, and they could tell the difference without being explicitly programmed. But then came the winter.

The Cold Years of AI

In the late 1960s, a devastating critique from Marvin Minsky and Seymour Papert pointed out that perceptrons had severe limitations. They could not solve even simple problems like recognizing patterns that required multiple layers of reasoning. Funding dried up, enthusiasm waned, and for decades neural networks were dismissed as a dead end.

This was the first “AI winter” for deep learning. Researchers who believed in the idea kept working in the shadows, tinkering with mathematical improvements, but the mainstream AI world moved on to other methods — expert systems, symbolic reasoning, and hand-coded rules. The dream of machines that could learn like brains was shelved as an impractical fantasy.

Yet, quietly, progress was being made. In the 1980s, the rediscovery and popularization of a technique called backpropagation — a way for neural networks to adjust their internal connections using feedback from mistakes — reignited interest. Backpropagation allowed networks with multiple layers to learn complex patterns. This was the birth of deep neural networks, though the term “deep learning” had not yet entered the spotlight.

The Rise of Data and Computing Power

For all their promise, deep neural networks in the 1980s and 90s were still limited by the two great bottlenecks of the era: data and computing power. Training a large network required enormous amounts of both. At the time, computers were too slow, and large labeled datasets — the fuel for deep learning — were rare.

But technology does not stand still. The explosion of the internet in the 2000s changed everything. Suddenly, humanity was generating more data than in all of previous history combined. Images, videos, text, speech — billions of examples of human knowledge and activity — were being created and stored online.

At the same time, the gaming industry, in its quest for realistic graphics, had driven the development of powerful graphics processing units (GPUs). These chips turned out to be perfectly suited for the massive parallel computations that deep learning demands. What was once a slow, impractical process could now be done thousands of times faster.

The stage was set for deep learning’s renaissance.

The Breakthrough That Changed Everything

The turning point came in 2012, when a deep learning model called AlexNet stunned the scientific community. In an international competition to recognize objects in images, AlexNet achieved an unprecedented leap in accuracy, leaving all other methods far behind. Its creators, Alex Krizhevsky, Ilya Sutskever, and Geoffrey Hinton, had trained a deep convolutional neural network on millions of images using powerful GPUs.

The effect was electric. Overnight, deep learning went from an obscure corner of AI research to the method everyone wanted to use. Companies rushed to hire deep learning experts. Academic conferences were suddenly dominated by neural network papers. The black box was no longer a curiosity — it was a winning strategy.

How Deep Learning Works

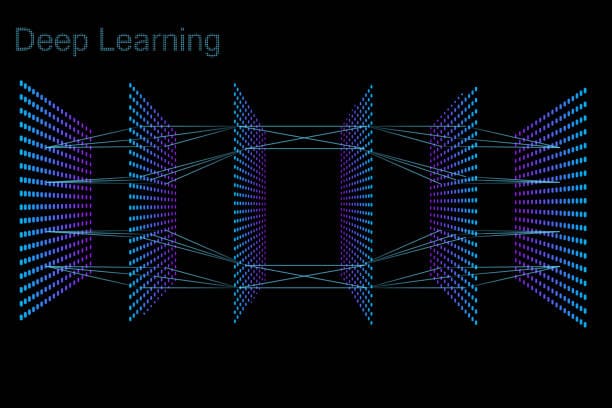

At its core, a deep learning system is a network of artificial neurons organized in layers. The first layer takes raw input — pixels of an image, words in a sentence, or samples of audio. Each neuron in this layer processes its inputs, applies a mathematical function, and passes the result to the next layer. As the data flows through the network, the layers gradually extract more and more abstract features.

For example, in an image recognition network, the first layers might detect simple edges and colors. The middle layers combine these into shapes and textures. The final layers identify whole objects — a cat, a car, a face. The magic is that the network learns these features automatically from data, without a human programmer deciding what to look for.

The learning happens through a process of trial and error guided by a cost function — a mathematical measure of how wrong the network’s predictions are. Backpropagation calculates how each connection in the network contributed to the error, and gradient descent adjusts the weights to make the network better next time. Over millions of iterations, the network becomes astonishingly good at its task.

The Many Faces of Deep Learning

Today, deep learning is not a single technology but a family of architectures, each suited to different types of data. Convolutional neural networks (CNNs) dominate in computer vision. Recurrent neural networks (RNNs) and their successors, long short-term memory (LSTM) networks, handle sequential data like speech and text. Transformers, a newer architecture, have revolutionized natural language processing, enabling models like GPT and BERT to understand and generate human-like text.

Generative adversarial networks (GANs) have opened the door to machines that can create — producing realistic images, music, and even deepfake videos. Reinforcement learning combined with deep networks has produced agents that can master complex games like Go and StarCraft at superhuman levels.

Each breakthrough has come from researchers pushing the boundaries of what these networks can do, often inspired by both mathematics and biology, and always fueled by vast computational resources.

The Power and the Peril

Deep learning has given us machines that can diagnose diseases from medical images better than human doctors, translate between languages in real time, and generate artwork indistinguishable from human creations. It is the engine behind autonomous vehicles, voice assistants, and countless hidden systems that recommend what we watch, buy, and read.

But with this power comes danger. Deep learning models are often opaque — their decisions difficult to explain. They can be biased, amplifying the prejudices present in their training data. They can be fooled by adversarial examples — tiny changes to input data that cause absurdly wrong outputs. And their hunger for data raises serious privacy concerns.

There is also the question of control. As models grow larger and more capable, who decides how they are used? The same technology that can detect tumors could also be used for mass surveillance. The same generative models that create art could also create convincing propaganda. The future of deep learning is as much an ethical challenge as a technical one.

Towards a More Transparent and Human-Centered AI

The next chapter in deep learning’s story may be less about raw performance and more about trust, transparency, and alignment with human values. Researchers are working on methods to make neural networks explain their reasoning, to ensure they remain fair and unbiased, and to reduce their energy consumption.

There is also a growing movement to combine deep learning with other forms of reasoning, creating hybrid systems that can both learn from data and apply logical rules. The goal is to build AI that not only recognizes patterns, but also understands them in a way that aligns with human thinking.

As we move forward, it’s worth remembering that deep learning is a tool — a powerful one, but still a tool. Its impact on the world depends on the hands that wield it.

The Human Spark in the Machine

In a sense, deep learning is not about making machines more like humans, but about reflecting the best of human curiosity in our creations. We have given computers the ability to learn from experience, to find meaning in oceans of data, to surprise us with their capabilities. But the spark that started it all — the refusal to stop asking questions, the drive to see patterns in chaos — is still deeply human.

The black box is slowly opening, revealing a technology that is both an achievement of engineering and a mirror of our own minds. Deep learning demystified is still awe-inspiring, because the mystery was never entirely in the algorithms — it was in the fact that we could dream them into being.