For centuries, the skies have been symbols of freedom, ambition, and power. From the first fragile aircraft lifting off the ground to the roaring engines of jet fighters and spacecraft, humanity has always looked upward with determination and imagination. Today, however, a new revolution is unfolding—one not driven by stronger engines or faster jets, but by intelligence itself. Artificial Intelligence (AI) is reshaping aerospace and defense in profound ways, introducing new dimensions of autonomy and assurance that are redefining how we explore, protect, and understand our world.

This is not just a story of technology; it is a story of trust, ethics, and responsibility. As AI takes on roles once reserved for human judgment—navigating, targeting, decision-making, and analyzing vast streams of data—the question becomes not only what AI can do, but how we can ensure it does so reliably, safely, and in alignment with human values.

The Fusion of AI and Aerospace

At its heart, aerospace has always been about pushing limits. The industry demands precision, efficiency, and innovation, operating in some of the most unforgiving environments imaginable: the thin upper atmosphere, the vacuum of space, and the high-pressure realities of defense missions. AI fits into this ecosystem naturally, offering tools to process data at speeds no human can match, recognize patterns invisible to the eye, and learn from experience to adapt in real time.

From air traffic management to satellite navigation, from predictive maintenance of aircraft to mission planning for defense systems, AI has become a co-pilot, analyst, and strategist. It is the silent force that enables pilots to fly safer, commanders to make faster decisions, and engineers to design smarter systems.

Autonomy in the Air

One of the most visible transformations AI brings to aerospace and defense is autonomy. Autonomous systems, whether unmanned aerial vehicles (UAVs), drones, or spacecraft, rely heavily on AI to operate without direct human control. But autonomy is not a simple concept—it spans a spectrum.

At the lower end are systems that assist humans, like autopilots or collision-avoidance systems that provide warnings and guidance. At the higher end are fully autonomous drones that can carry out reconnaissance missions, adjust their paths to avoid threats, and return home, all without human intervention. In between lies a rich landscape of shared control, where humans and machines collaborate, each leveraging their strengths.

The rise of autonomy raises both awe and anxiety. On one hand, autonomous drones can save lives by undertaking dangerous reconnaissance in hostile zones or delivering supplies in disaster-stricken areas. On the other, questions emerge about accountability: if an autonomous system makes a mistake, who is responsible? These are not technical questions alone—they touch on law, ethics, and philosophy, requiring societies to grapple with how much authority we entrust to machines.

Assurance: Building Trust in Intelligent Systems

Autonomy cannot exist without assurance. Assurance is the foundation of trust—confidence that a system will behave as expected, safely and securely, even in the most unpredictable environments. In aerospace and defense, assurance is not optional. A miscalculation in civilian air traffic management can endanger hundreds of lives; a malfunction in a defense drone can escalate into conflict.

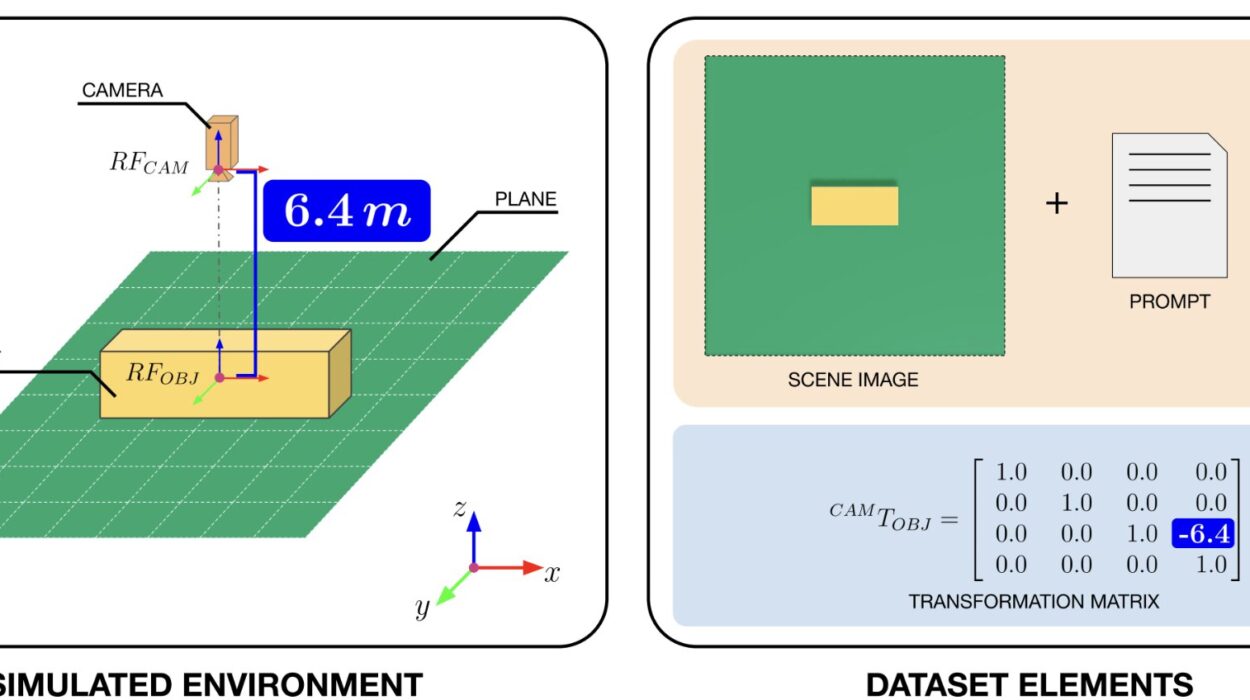

AI assurance involves multiple layers. It requires transparency, so that engineers and operators can understand how a system reaches its conclusions. It demands robustness, ensuring that AI can withstand cyberattacks or unexpected inputs without failing catastrophically. It needs rigorous testing, not just in simulations but in real-world scenarios, to prove reliability under stress.

Yet assurance also involves human psychology. People must feel comfortable trusting AI, not just because the system is technically reliable but because its purpose aligns with human intent. In defense contexts especially, this trust must extend to life-or-death decisions. The challenge is not simply to make AI intelligent, but to make it worthy of trust.

Predictive Maintenance: AI Behind the Scenes

Much of AI’s impact in aerospace is invisible to passengers and operators but critical nonetheless. Predictive maintenance is a perfect example. Modern aircraft are packed with sensors that record vibrations, temperatures, pressures, and countless other variables. These data streams are far too complex for humans to analyze in real time. AI steps in to recognize patterns that signal potential failures before they happen.

By predicting when a part will fail or when a system needs servicing, AI prevents costly breakdowns, reduces downtime, and enhances safety. Airlines save billions, defense forces keep their fleets mission-ready, and lives are safeguarded by avoiding catastrophic malfunctions. It is AI not as a glamorous pilot but as an invisible guardian, quietly ensuring the skies remain safe.

AI in Space Exploration

Aerospace is not limited to Earth’s atmosphere. AI has already traveled to Mars, where rovers like Perseverance use autonomous navigation to explore landscapes millions of miles from human controllers. Delays in communication with Earth make full autonomy essential, as rovers must make split-second decisions about which paths to take, which rocks to sample, and how to avoid hazards.

In future missions—whether to the Moon, Mars, or beyond—AI will play even greater roles. Autonomous spacecraft will chart courses through the stars, monitor life support systems for astronauts, and even assist in scientific research by identifying anomalies in data faster than humans could. In space, where help is impossibly far away, AI becomes not just an assistant but a partner in survival.

Defense and Decision-Making

In defense, AI’s role is both promising and controversial. Intelligent systems can analyze enormous amounts of surveillance data, detecting patterns of movement or activity that suggest threats. They can coordinate fleets of drones, manage logistics, and even simulate battlefield scenarios to aid commanders in planning.

Yet when AI enters the realm of decision-making about life and death, the ethical stakes soar. Autonomous weapons, often called “killer robots” in public debate, represent a frontier where autonomy and assurance collide. Can we allow machines to make lethal decisions? Should there always be a human “in the loop”? These are debates unfolding not just in laboratories and defense agencies but in the halls of the United Nations, where international agreements attempt to balance technological possibility with moral responsibility.

Cybersecurity and Resilience

Aerospace and defense systems are increasingly digital, connected, and therefore vulnerable. AI can both strengthen and threaten cybersecurity. On one hand, AI can detect anomalies in networks, identifying cyber intrusions faster than human analysts. It can learn from attacks and adapt its defenses, making systems more resilient.

On the other hand, adversaries can also use AI to craft sophisticated cyberattacks, creating a new digital arms race. Assurance, in this sense, extends to ensuring that AI itself cannot be corrupted, manipulated, or fooled. Defending the digital frontier has become as critical as defending airspace.

Human-AI Collaboration

Despite the rise of autonomy, humans are not being replaced—they are being redefined. The future of aerospace and defense will be one of collaboration, where humans and AI work together, each complementing the other’s strengths. Humans bring judgment, values, and creativity; AI brings speed, precision, and pattern recognition.

For pilots, AI may serve as a trusted co-pilot, monitoring conditions and suggesting actions without removing ultimate authority. For commanders, AI may act as a strategic advisor, presenting options and outcomes without enforcing decisions. For astronauts, AI may function as a teammate, handling routine tasks so humans can focus on discovery and innovation.

The essence of assurance is not blind trust in machines, but confidence in the partnership between humans and AI.

Ethics and Responsibility

As AI assumes greater roles in aerospace and defense, the question of ethics grows ever more urgent. What principles guide the use of AI in warfare? How do we balance efficiency with humanity? How do we ensure AI does not exacerbate inequalities or become a tool of oppression?

International efforts are underway to define standards and guidelines, but technology often outpaces regulation. The responsibility falls on scientists, engineers, policymakers, and citizens alike to shape AI’s role in ways that reflect shared values. Autonomy without ethics is dangerous; assurance without accountability is hollow. Together, they must form a framework where AI strengthens security without undermining humanity.

The Future of Autonomy and Assurance

Looking forward, AI in aerospace and defense will become more sophisticated, more integrated, and more essential. Swarms of autonomous drones may patrol skies, guided by AI coordination. Satellites may use AI to adapt to threats in orbit. Spacecraft may chart courses to new worlds with minimal human oversight.

Yet no matter how advanced AI becomes, assurance will remain central. Trust cannot be assumed—it must be earned through transparency, testing, and ethical design. The ultimate measure of AI’s success in aerospace and defense will not be how autonomous it becomes, but how well it earns and keeps human trust.

A Sky Shared Between Minds and Machines

Artificial Intelligence in aerospace and defense is not about replacing human beings—it is about extending human reach. It allows us to fly further, react faster, and explore deeper than ever before. But more than that, it challenges us to reconsider what it means to trust, to decide, and to protect.

Autonomy without assurance is reckless. Assurance without autonomy is stagnant. Together, they define the path forward: a future where human and machine share the sky, the mission, and the responsibility.

In the end, AI in aerospace and defense is not only about machines learning to fly. It is about humanity learning to trust—wisely, cautiously, and with vision—so that the wonders of the sky remain not just accessible, but secure, for generations to come.