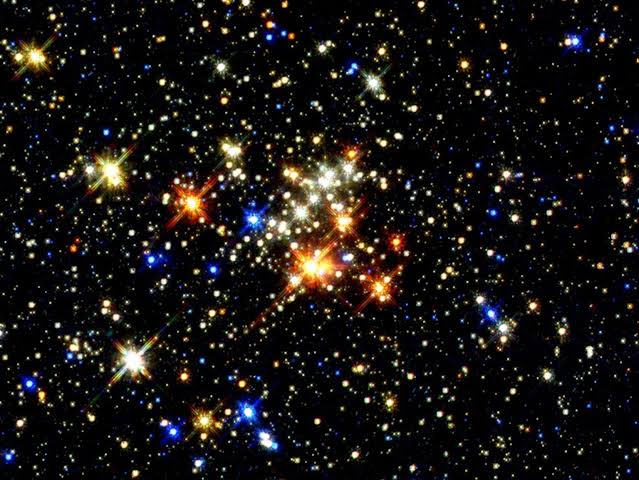

Night after night, the sky opens above us like an endless tapestry, stitched with light from billions of stars, galaxies, and mysterious objects that lie far beyond human reach. Yet beneath the beauty of this cosmic artwork lies an urgent scientific quest: to make sense of it all.

Every point of light represents a question. Is it a nearby star, burning its ancient fuel in quiet isolation? Is it a galaxy, spinning with millions of suns, evolving over eons? Or is it a quasar—a brilliant beacon from the young universe, powered by a supermassive black hole feeding furiously at its center?

Answering these questions isn’t just about satisfying cosmic curiosity. It’s about decoding the history of our universe, understanding how galaxies form and grow, how matter evolves, and ultimately, how we got here. But classifying millions—soon billions—of celestial objects is a task beyond human capacity. It demands not just telescopes, but new tools. And now, thanks to a breakthrough by scientists at the Yunnan Observatories of the Chinese Academy of Sciences, astronomy has just gained one.

Intelligence Born of Starlight

Published in The Astrophysical Journal Supplement Series, this new study introduces a neural network-based method that reimagines how we classify stars, galaxies, and quasars. At the heart of the effort is a sophisticated artificial intelligence (AI) model trained to interpret the complex visual and spectral fingerprints of the cosmos.

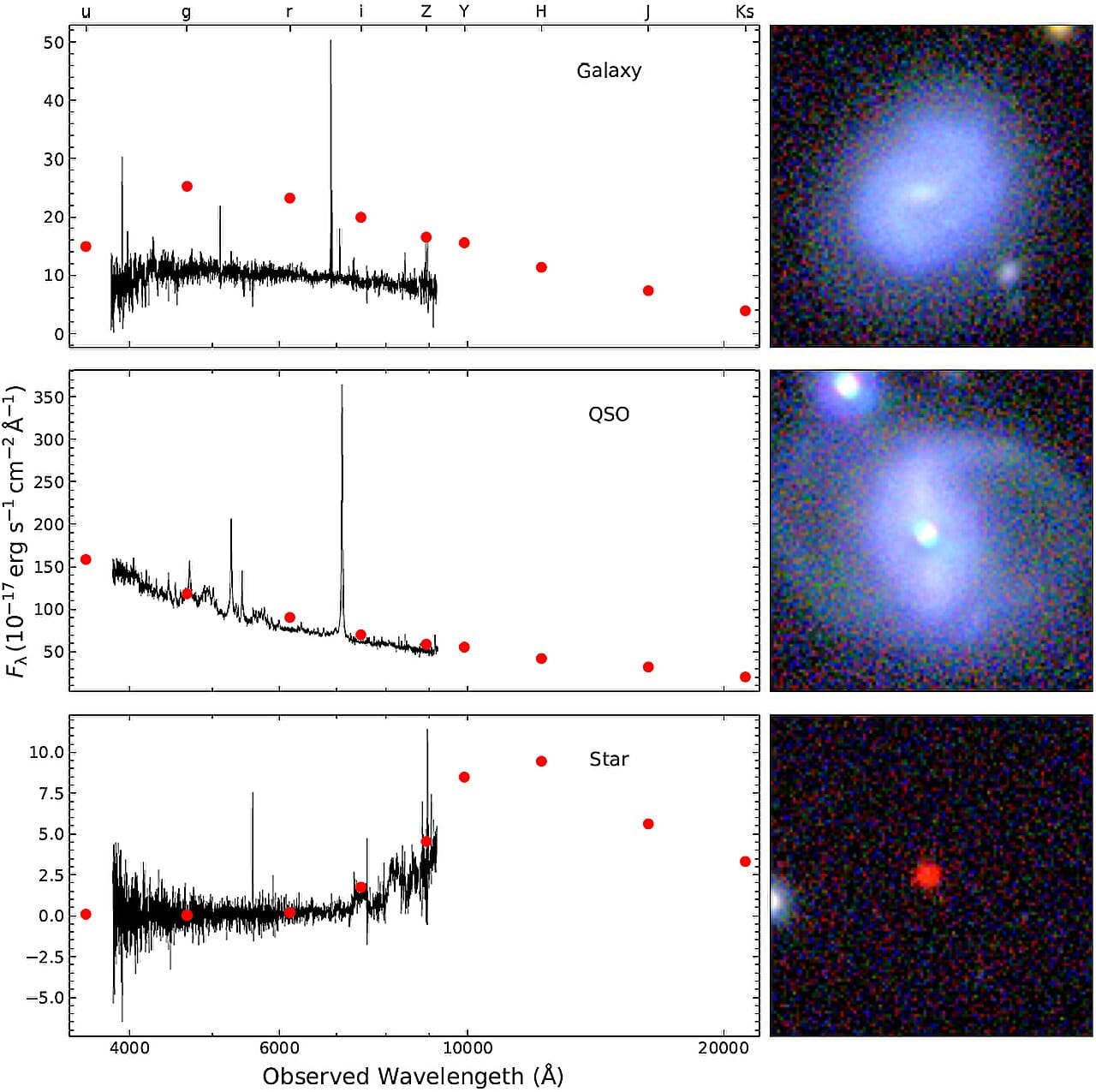

Until now, astronomers have had to choose between two imperfect paths: the precision of spectroscopy, and the scale of photometry. Spectroscopy—breaking light into its component colors—yields exquisite detail, like reading a star’s biography in full. But it’s slow, costly, and impractical for millions of faint objects.

Photometric imaging, in contrast, can capture vast swaths of the sky quickly, detecting light in broad bands and revealing faint sources otherwise invisible. But when limited to shape or brightness profiles, it often blurs the boundaries between stars, galaxies, and quasars—especially at great distances. A distant quasar and a nearby star can look deceptively alike: simple points of light, stripped of context.

The researchers knew that relying on either method alone wouldn’t be enough. So, they turned to the realm of machine learning—where patterns are not explicitly programmed, but learned.

Learning to See the Universe in Full Dimension

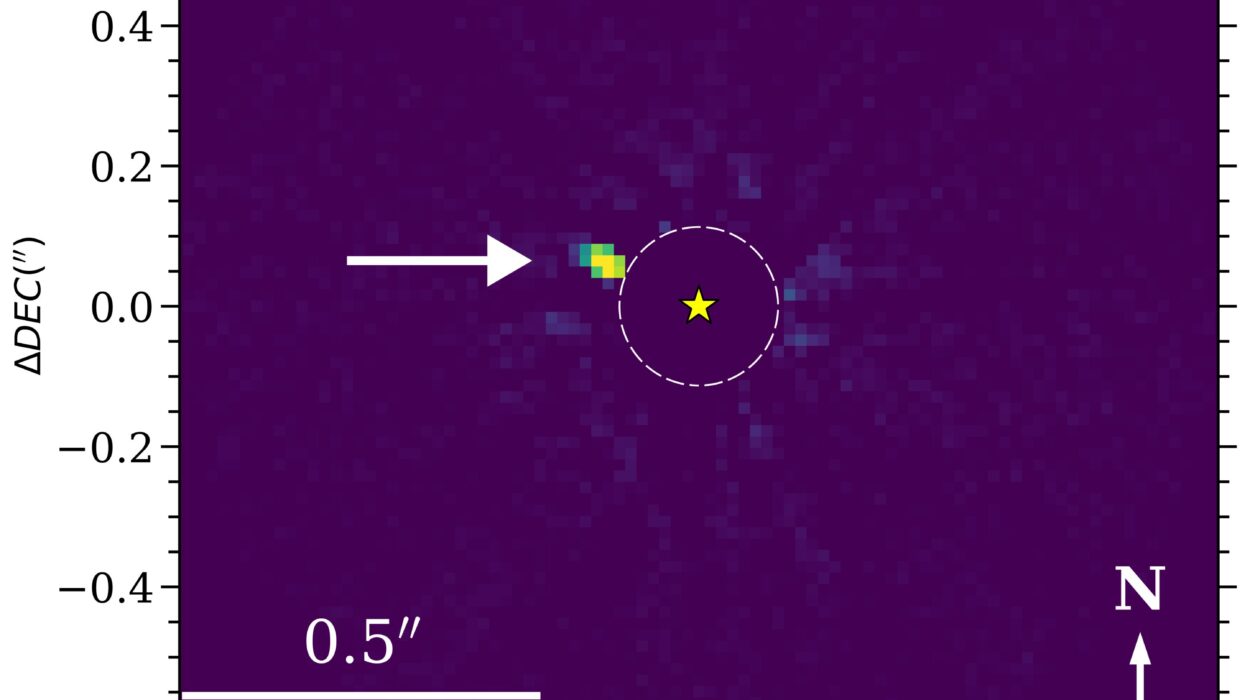

What sets this neural network apart is its multimodal design. Rather than analyzing an object’s shape (morphology) or color distribution (SED, or spectral energy distribution) in isolation, the model weaves both types of information into its decision-making process. Like a seasoned astronomer who considers not only what an object looks like, but how it behaves, this AI has learned to think dimensionally.

It was trained using spectroscopically confirmed data from the Sloan Digital Sky Survey (SDSS) Data Release 17—a goldmine of well-labeled cosmic examples. The AI was shown hundreds of thousands of examples of stars, galaxies, and quasars, learning the subtle differences in their light profiles, structures, and motion through space.

Armed with that knowledge, the model was unleashed on a far larger task: analyzing over 27 million sources from the fifth data release of the Kilo-Degree Survey (KiDS), focusing on all objects brighter than magnitude r = 23—far deeper than the naked eye could ever see. Across 1,350 square degrees of sky, the model classified objects with a clarity that would be impossible for any human or even a conventional algorithm.

The Sky Speaks Clearly When AI Listens Closely

Testing the model’s performance wasn’t just about accuracy—it was about trust. After all, a misclassified star or galaxy isn’t just an error; it could distort our entire understanding of the cosmic web.

So the team tested their AI with datasets known for their reliability. When applied to 3.4 million sources from the Gaia space observatory that had high proper motion or parallax—hallmarks of nearby stars—the AI correctly identified 99.7% of them as stars. It was, in essence, able to “see” what Gaia, using completely different methods, already knew.

In another comparison using the Galaxy And Mass Assembly (GAMA) survey, the AI was just as accurate, correctly identifying 99.7% of galaxies and quasars. These results weren’t just good—they were extraordinary.

What’s more, the model didn’t just match existing catalogs; it improved them. Random checks showed that many objects misclassified in SDSS—particularly galaxies mistaken for stars—were accurately reclassified by the neural network. It’s a subtle but critical correction. In cosmology, the difference between a star and a galaxy is a bit like the difference between a pebble and a mountain.

From Static Maps to Living Atlases

This isn’t just a leap in efficiency. It’s a philosophical shift in how we explore the sky.

Traditional astronomical surveys, for all their brilliance, create static maps—snapshots frozen in time, reliant on painstaking human validation. With AI-based methods like this one, those maps become living atlases—responsive, updatable, and scalable. They allow for faster discovery, more nuanced population studies, and the capacity to handle the coming tidal wave of data from next-generation observatories like the Vera C. Rubin Observatory’s Legacy Survey of Space and Time (LSST), which will catalog tens of billions of objects.

With neural networks that can learn and adapt, we can move beyond raw detection to deep understanding. We can probe galaxy evolution across cosmic time, discover rare classes of quasars, or find subtle anomalies that hint at unknown physics.

The Cosmos, Reimagined Through Machines

There is something profoundly human about building machines to help us see more clearly. The sky has always been our mirror, our map, our mythology. From the early days of navigating by starlight to today’s immense digital sky surveys, we’ve sought not only to chart the heavens, but to know them.

This neural network does not replace the astronomer’s eye—it augments it. It doesn’t remove the wonder of discovery; it magnifies it. By freeing scientists from the grinding task of manual classification, it allows more time for insight, theory, and exploration. And in doing so, it brings the universe closer.

The lead scientists—visionaries in both astrophysics and machine learning—have not just developed a tool. They’ve opened a new window, letting light flood in from millions of previously misunderstood or misclassified points in the sky. Behind each is a story—of a star being born, of a galaxy colliding, of a black hole feasting in the deep past.

We now have a sharper lens with which to read these stories.

The Future of Skywatching is Intelligent

The beauty of this development is its foundation for what comes next. As AI models become more refined, as training datasets grow richer, and as telescopes grow more powerful, this union between machine and cosmos will only deepen.

The same approach could be expanded to study variable stars, gravitational lenses, or transient events like supernovae. It could be tuned to recognize exotic or rare objects we don’t even fully understand yet—fast radio bursts, rogue planets, or candidates for dark matter signals. In time, AI may not just classify the cosmos but help redefine it.

In an age of data too vast for human minds to grasp alone, artificial intelligence becomes our co-pilot. It’s not the cold replacement of astronomers, but their amplifier. It brings structure to chaos, order to confusion. And in doing so, it allows the wonder of the night sky to be not only seen—but understood.

The stars have always been there. Now, at last, we can hear what they’ve been trying to tell us.

Reference: Hai-Cheng Feng et al, Morpho-photometric Classification of KiDS DR5 Sources Based on Neural Networks: A Comprehensive Star–Quasar–Galaxy Catalog, The Astrophysical Journal Supplement Series (2025). DOI: 10.3847/1538-4365/adde5a