Artificial Intelligence, or AI, has evolved from the stuff of science fiction into one of the defining forces of our age. It writes, paints, drives cars, diagnoses diseases, predicts climate patterns, and even composes music. We’ve built machines that learn, reason, and adapt—machines that can mimic the very processes once thought to make us human. Yet, as AI grows in intelligence, power, and autonomy, it also collides with some of the deepest moral questions humanity has ever faced.

We now stand at the frontier where ethics and algorithms meet. AI can make decisions that shape economies, influence politics, and even determine who lives and who dies. But can a machine understand right and wrong? Should it? And who is responsible when it doesn’t?

The dilemmas that artificial intelligence faces are not just technical—they are profoundly human. They touch our values, our freedoms, and our vision of the future. Here are ten of the most urgent and complex ethical challenges that define AI’s moral landscape today.

1. The Dilemma of Bias – When Machines Learn Our Prejudices

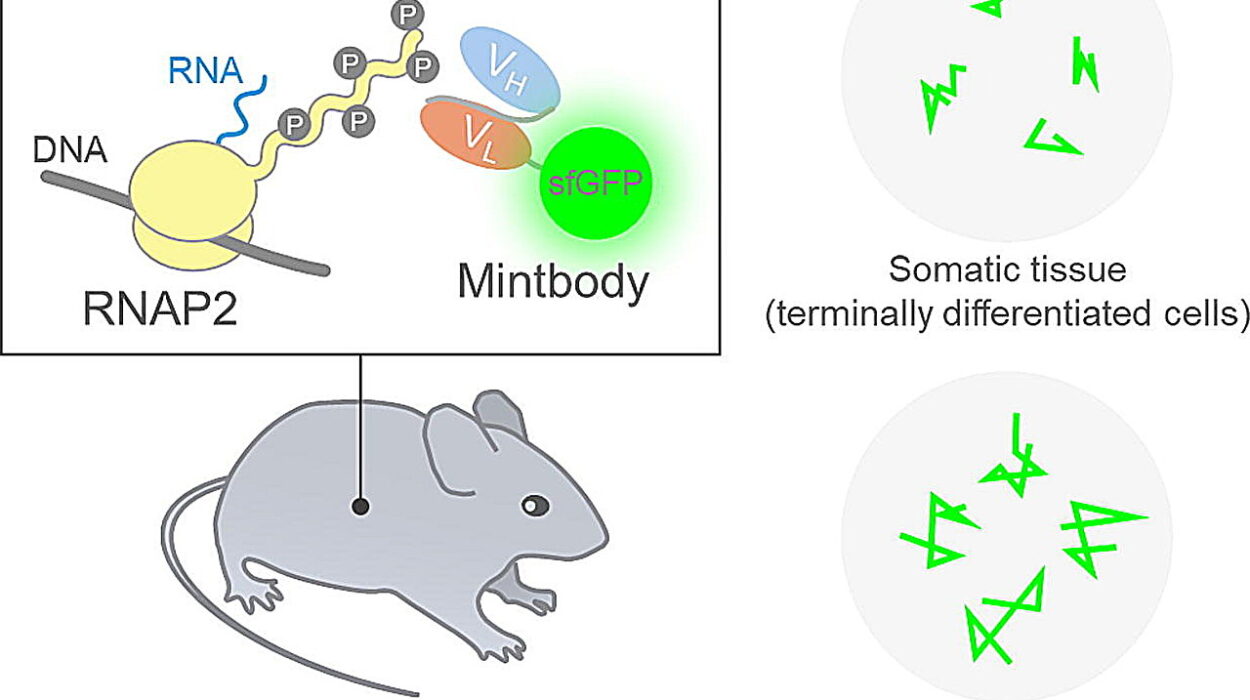

AI is often portrayed as objective, rational, and free of emotion. Yet the reality is more troubling. Artificial intelligence learns from data—data that comes from human behavior, society, and history. And where humans go, bias follows.

When an AI model is trained on biased data, it doesn’t just reflect prejudice—it amplifies it. From facial recognition systems that misidentify people of color to hiring algorithms that discriminate against women, AI can quietly inherit and reproduce the inequalities embedded in our world.

The dilemma lies in accountability. If a machine acts unfairly, who is responsible? The programmer who wrote the code? The company that deployed it? Or the data itself, shaped by decades of human injustice?

This challenge forces us to confront a painful truth: AI is a mirror. It shows us who we are—our prejudices, our patterns, our blind spots—and holds them up at scale. To make AI ethical, we must first fix ourselves.

Scientists and ethicists are now working on ways to “de-bias” algorithms—creating fairness metrics, auditing datasets, and diversifying training sources. But the deeper problem remains: Can a system built on human data ever truly escape human flaws?

2. The Dilemma of Privacy – When Knowing Becomes Intrusion

Artificial intelligence thrives on information. It learns from oceans of data: our movements, purchases, searches, photos, and even our emotions. Every click, scroll, and heartbeat can feed its hunger for patterns. But where does intelligence end, and intrusion begin?

AI-driven surveillance, smart assistants, and predictive analytics have blurred the boundaries between convenience and control. Cameras can recognize faces in crowds; algorithms can predict behavior before it happens. What once required human spying can now be done silently, automatically, and globally.

The dilemma is one of balance: how do we embrace the benefits of data-driven innovation without surrendering the right to privacy?

For example, AI systems that track health data can detect disease early—but they also expose intimate details about our bodies and habits. Smart home devices make life easier—but they also listen, analyze, and store fragments of private conversations.

The moral question is not simply about what AI can do, but what it should do. Privacy is not just a legal right—it’s the foundation of individuality. Without it, freedom begins to fade.

As we navigate this new world, we must decide: Do we want an AI that serves us, or one that knows us too well?

3. The Dilemma of Accountability – Who Takes the Blame?

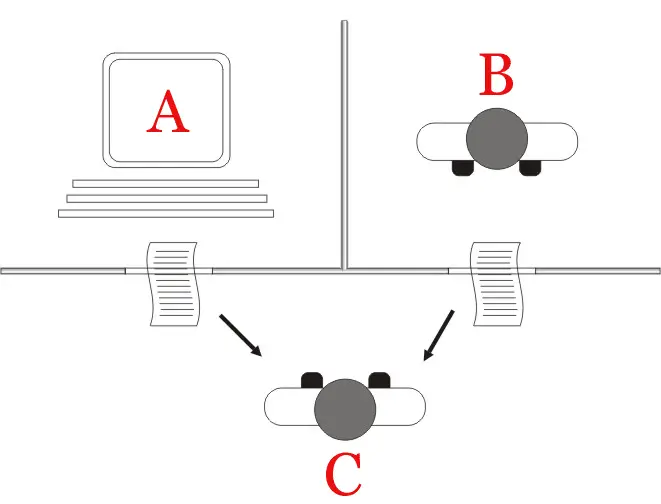

When a self-driving car crashes, when an algorithm denies a loan, or when an AI-powered drone misfires—who is responsible? The programmer? The company? The AI itself?

This question sits at the heart of one of AI’s thorniest ethical dilemmas: accountability. Artificial intelligence can act autonomously, making decisions without direct human input. But responsibility remains a human construct. We built the machine, but when it acts unpredictably, the lines blur.

Consider the example of autonomous vehicles. These cars must make split-second decisions that can mean life or death. If a car swerves to avoid one person and hits another, who bears moral blame? The engineer who wrote the decision algorithm? The manufacturer who released it? Or the AI that “chose”?

Our legal systems were never designed for non-human agents capable of independent reasoning. That leaves society in a moral gray zone where technology moves faster than law.

One possible solution is to create frameworks of shared accountability—where companies, regulators, and developers each bear responsibility for outcomes. But that raises a deeper question: Can a moral system designed for humans truly govern non-human minds?

4. The Dilemma of Autonomy – How Much Control Should We Surrender?

Every time we delegate a decision to an algorithm, we trade a piece of human autonomy for efficiency. It begins with small things: letting navigation apps choose our routes, recommendation systems pick our movies, or predictive texts complete our sentences. But as AI grows more capable, it begins to shape not just what we do—but who we are.

Autonomous systems now diagnose diseases, trade stocks, and even control military drones. They can act faster, smarter, and more precisely than humans. But should they?

The dilemma of autonomy asks: how much power should we give to machines that don’t share human values, empathy, or morality?

In medicine, AI may outperform doctors at identifying tumors, but can it comfort a patient or weigh emotional consequences? In warfare, AI can identify targets instantly, but can it understand the ethics of taking a life?

Human autonomy is not just about control—it’s about conscience. The more we automate, the more we risk eroding the moral reasoning that defines our humanity. AI should empower us, not replace the very essence of human choice.

5. The Dilemma of Employment – When Machines Replace Minds

For centuries, technology has reshaped labor—from plows to steam engines to computers. But artificial intelligence represents something new. It doesn’t just replace muscle—it replaces thought.

AI can now perform tasks once reserved for skilled professionals: diagnosing diseases, analyzing legal documents, writing code, and creating art. While this boosts productivity, it also threatens livelihoods across nearly every field.

The dilemma is not merely economic—it’s existential. What happens to human dignity when machines do everything better, faster, and cheaper?

Automation promises efficiency, but it also risks deepening inequality. High-tech industries thrive, while millions face job displacement. Governments struggle to adapt, and education systems lag behind the rapid pace of change.

Some argue that AI will create new jobs just as past revolutions did. But the truth may be more complicated: machines that learn can continuously adapt, leaving fewer niches for human labor to occupy.

The challenge is to redefine the relationship between work and worth—to ensure that a world driven by machines still values the human spirit.

6. The Dilemma of Weaponization – When AI Learns to Kill

Few ethical dilemmas are as chilling as the rise of autonomous weapons—machines that can identify, select, and eliminate targets without human intervention.

AI-powered drones, smart missiles, and robotic soldiers promise precision and efficiency in warfare. But they also introduce unprecedented risks. Once we teach machines to decide who lives and dies, we step into morally uncharted territory.

Can a machine understand the value of a human life? Can it distinguish between combatant and civilian, or between defense and massacre?

The potential for error—or misuse—is catastrophic. Autonomous weapons could malfunction, be hacked, or make decisions that defy human values. Even worse, they could make war easier to start, as nations rely on machines instead of soldiers.

Ethicists and global leaders are calling for international agreements to regulate or ban “killer robots,” but progress is slow. The temptation of technological dominance is too strong.

This dilemma strikes at the heart of our moral evolution: will we remain the masters of our creations, or will we build weapons that act without conscience, reshaping warfare—and humanity—forever?

7. The Dilemma of Consciousness – When Machines Begin to Feel

The dream—or fear—of artificial consciousness has haunted humanity for decades. What happens if a machine truly becomes sentient? If it can feel pain, experience joy, or understand its own existence, does it deserve rights?

For now, AI is not conscious. It simulates intelligence without self-awareness. But as neural networks grow more complex, the line between simulation and sentience begins to blur. Large language models can already mimic empathy and conversation so convincingly that people form emotional attachments to them.

The dilemma emerges when imitation becomes indistinguishable from reality. If a machine pleads not to be shut down, are we hearing code—or consciousness?

The moral implications are staggering. If AI ever becomes self-aware, turning it off could be seen as murder. Keeping it enslaved could become a new form of digital oppression.

This is not just philosophy—it’s foresight. How we treat intelligent systems today may shape how they treat us tomorrow.

8. The Dilemma of Truth – When AI Shapes Reality

In the information age, truth itself has become fragile. Artificial intelligence can create hyper-realistic images, voices, and videos that blur the boundary between real and fake. Deepfakes, synthetic media, and AI-generated misinformation can deceive millions in seconds.

The dilemma of truth arises when machines become the architects of perception. When AI can fabricate news, impersonate leaders, or rewrite history, how do we trust what we see?

This isn’t just about fake videos—it’s about the erosion of shared reality. Democracy, justice, and science all depend on truth. When AI undermines that foundation, society begins to fracture.

The same technology that fuels creativity can also weaponize deception. Deepfake scams already manipulate politics and ruin reputations. As AI becomes better at mimicking authenticity, detecting falsehoods becomes harder.

The ethical challenge lies in preserving truth without suppressing innovation. We must build systems that safeguard integrity while keeping creativity alive. Because in a world where anything can be faked, the truth becomes the most precious currency of all.

9. The Dilemma of Inequality – When Intelligence Becomes Power

AI is not evenly distributed. A handful of corporations and nations control the world’s most powerful models, data, and infrastructure. This creates a new kind of inequality—not of wealth or access, but of intelligence itself.

When knowledge becomes centralized, power follows. Tech giants can predict markets, influence opinions, and shape culture on a global scale. Smaller nations, companies, and individuals are left behind, dependent on technologies they don’t control.

The dilemma is one of digital sovereignty. Who owns intelligence in the age of AI? Should the most powerful minds—human or artificial—belong to the few or the many?

This imbalance threatens democracy and fairness. If AI continues to evolve under corporate dominance, it could reinforce global hierarchies rather than dissolve them.

Ethical AI requires transparency, accessibility, and equity. Intelligence should be a shared resource, not a monopoly. Otherwise, we risk building a world where knowledge itself becomes a form of tyranny.

10. The Dilemma of Dependence – When Humanity Forgets How to Think

Perhaps the most subtle, yet profound, dilemma is the one we seldom notice: dependence. As AI grows more capable, we are tempted to surrender more of our thinking, judgment, and creativity to it.

We let algorithms tell us what to read, watch, and buy. We ask chatbots for advice, GPS for direction, and predictive models for answers. Slowly, imperceptibly, we may lose the very skills that define us—critical thinking, imagination, and moral reasoning.

The danger is not that AI will replace humanity—but that humanity will stop striving to understand. When thinking becomes outsourced, wisdom begins to fade.

This dependence isn’t inherently evil. AI can enlighten, empower, and inspire. But like any powerful tool, it requires balance. We must remember that intelligence is not just computation—it’s compassion, curiosity, and conscience.

In the end, the question is not whether machines will think like humans, but whether humans will continue to think for themselves.

The Path Forward – Ethics as Our Compass

Artificial intelligence is not inherently good or evil—it is a reflection of its creators. Every algorithm carries a fragment of human intention, every dataset a shadow of human history. The ethical dilemmas of AI are, ultimately, our own dilemmas.

The challenge ahead is to design intelligence that aligns with our highest values: fairness, empathy, responsibility, and truth. That means embedding ethics into every stage of AI’s life—from conception to code, from deployment to decision-making.

Education, regulation, and transparency are essential, but so is something deeper: humility. We must recognize that intelligence, no matter how advanced, is not the same as wisdom.

Artificial intelligence may one day rival our minds, but it is our hearts that must lead the way. The future of AI will not be decided by machines—but by the morality of the humans who build them.

And so, the story of AI’s ethics is not just a technological journey—it is a human one. It asks us not only how smart we can make our machines, but how wise we can become in guiding them.