On a quiet afternoon in 2014, a group of researchers fed a photo of a panda into an advanced image recognition system built by Google’s deep learning team. The AI correctly labeled it as a panda with over 99% confidence. So far, so good. Then the scientists added a barely perceptible layer of digital “noise” to the image—a pattern invisible to the human eye. The altered image still looked like a panda to anyone who saw it. But when fed to the AI again, the machine declared with 99.3% certainty that it was a gibbon.

No human on Earth would confuse a panda with a gibbon. And yet, to the AI, the panda had seemingly vanished. It was one of many early warnings: machines, for all their prowess, sometimes see the world in profoundly alien ways. They can be astonishingly good at recognizing patterns, but also terrifyingly brittle—capable of mistakes so bizarre that they border on the absurd.

This peculiar vulnerability is just one example of a deeper truth: artificial intelligence can be brilliant, but it is not human. It learns differently, reasons differently, and sometimes stumbles in ways that reveal the limits of silicon minds.

A Different Kind of Brain

The first thing to understand is that AI doesn’t “think” like we do. Human brains are sculpted by millions of years of evolution. We are built to interpret a messy, uncertain world—sorting out faces in shadows, inferring emotions from tone of voice, navigating social nuance. We rely on vast networks of neurons connected through experience, association, and an intuitive grasp of how the world works.

Machines, by contrast, learn by adjusting mathematical weights inside layers of artificial neurons. These networks can indeed become incredibly skilled at tasks like recognizing cats in photos or translating languages. But they lack the accumulated commonsense knowledge that we humans take for granted. A toddler might understand that a cat doesn’t turn into a dog because you’ve changed the lighting or added some noise. A deep neural network may not.

When an AI sees a picture, it breaks it into a torrent of numbers—pixel intensities, edge gradients, texture statistics. It’s exquisitely sensitive to these patterns. That’s why, when even tiny changes appear in the data—changes too subtle for us to notice—the AI might produce a wild misclassification. Adversarial examples like the panda-turned-gibbon are crafted precisely to exploit these mathematical blind spots.

The Geometry of the Impossible

If you could step into the mind of an AI, you’d find yourself floating in a realm of incomprehensibly high dimensions. A typical deep learning model might analyze images in spaces with thousands—or even millions—of dimensions. Humans, meanwhile, evolved to think in three-dimensional space, with a sprinkling of time and cause-and-effect thrown in.

Imagine trying to navigate a city where streets can bend into the fourth or fifth dimension, intersecting themselves in ways our senses cannot imagine. That’s roughly the challenge an AI faces. In high dimensions, data behaves differently. Points that seem distant in three-dimensional space may lie disturbingly close in a higher-dimensional realm. A small nudge—a few strategically chosen pixels—can shove one object’s representation over the invisible border into another category.

This is why AI can be tricked so easily. For humans, perception is guided by physical intuition and real-world context. For a neural network, it’s all geometry in a realm we can’t visualize. The “distance” between a panda and a gibbon might be a hair’s breadth away in this alien landscape.

Learning Without Understanding

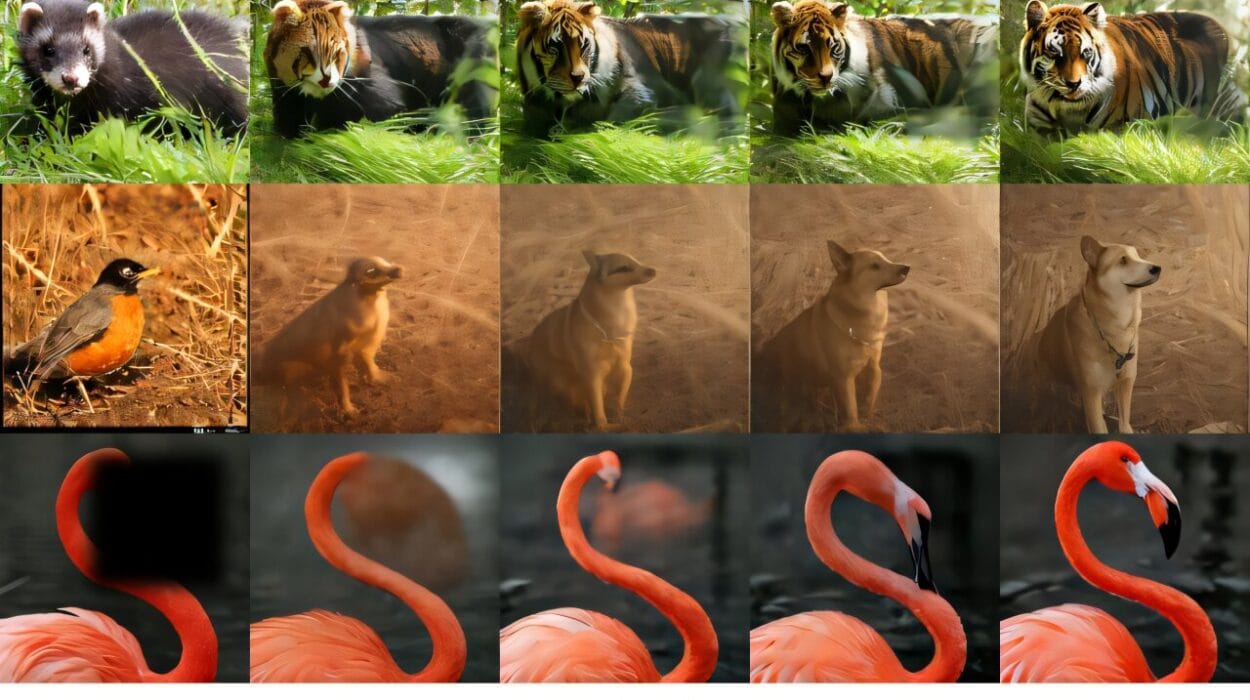

Another reason AI makes mistakes no human would is that it often learns without truly understanding. Machine learning systems excel at finding statistical patterns in mountains of data. Give them a million labeled photos of cats and dogs, and they will learn astonishingly good rules for telling them apart.

But these rules are statistical shortcuts, not deep comprehension. A child who’s seen only a few cats can recognize a new one because they understand the essential idea of “cat-ness”—pointy ears, whiskers, certain movements, meowing sounds. They know cats are animals, they scratch furniture, they chase mice. That conceptual knowledge makes human recognition robust.

AI systems, in contrast, might learn that cats tend to appear with certain background textures, or with particular color distributions in photos. If those spurious correlations disappear, the machine falters. Change the lighting, alter the background, or rotate the object, and the AI’s fragile “understanding” can collapse.

One famous example involved AI models trained to identify wolves and huskies. Researchers trained a neural network to distinguish between the two animals. The model seemed remarkably accurate—until they discovered it was focusing not on the animals, but on the snowy backgrounds common in wolf photos. When shown a husky in snow, it labeled it a wolf.

No human would make such a mistake, because we know what wolves and huskies are. Machines do not know. They merely calculate.

The Tyranny of Correlation

In machine learning, the fundamental law is this: correlation is king. An AI has no inherent knowledge about what is causally important—it simply detects what patterns reliably predict the desired outcome in the training data.

This creates problems when the world changes. Imagine an AI system trained to diagnose pneumonia from chest X-rays. If, in the training hospital, most pneumonia patients happen to be photographed with portable X-ray machines (producing subtly different images), the AI may mistakenly learn that the presence of certain image artifacts is a strong signal for disease. Deployed in another hospital where imaging practices differ, its performance collapses.

Humans, too, rely on correlation, but we overlay it with reasoning, context, and the ability to question our own perceptions. We instinctively ask, “Why might these things be connected?” AI generally does not. It learns from statistical regularities and assumes they will always hold.

This is one reason AI sometimes makes mistakes that seem laughable. It’s not that the machine is foolish—it’s that it is doing exactly what it was designed to do: exploit correlations in data. When those correlations mislead, the results can be catastrophically wrong.

Training Data’s Hidden Traps

The phrase “garbage in, garbage out” has never been truer than in the age of machine learning. An AI system is only as good as the data it consumes. Yet real-world data is messy, incomplete, and riddled with hidden biases.

Consider facial recognition systems. Early commercial AI models were often trained predominantly on faces of lighter-skinned individuals. When deployed in the real world, they performed far worse on people with darker skin tones, misidentifying or failing to recognize them. The AI was not racist—it was ignorant. It simply lacked enough diverse examples to learn robust patterns.

Or take language models, like the ones that power chatbots and writing assistants. These systems absorb the biases of their training texts. They might replicate stereotypes, produce offensive content, or misunderstand nuanced human communication. That’s because they don’t “understand” language the way humans do. They mimic the statistical patterns of words without grasping the underlying meaning.

Even large, carefully curated datasets are not immune. Tiny statistical quirks can have outsized effects. In one real-world study, an AI medical system trained to predict health outcomes learned that patients who visited hospitals more often were “healthier”—because those who were very sick sometimes never made it to the hospital at all. The model confused absence of data with absence of disease.

Humans can sometimes infer missing context. AI cannot. If a particular category was underrepresented—or absent entirely—in the training data, the AI will remain blind to it.

Context: The Invisible Ingredient

For humans, context is everything. We automatically integrate a dizzying array of cues—emotional tone, past experiences, cultural knowledge, physical laws—to make sense of the world. A human knows that a giraffe cannot fit inside a refrigerator, not because they’ve memorized that fact, but because they understand physical sizes, shapes, and the way objects interact.

AI often lacks this broader context. Its “knowledge” is bounded entirely by the data it has seen. Tell a language model that giraffes fit comfortably into refrigerators, and it might agree without protest, because it has no embodied sense of physical reality.

This limitation becomes critical in domains like autonomous vehicles. Humans instinctively understand that a child might suddenly dart into the road after a rolling ball. They anticipate danger even when none is visible. AI must learn such inferences purely from statistical patterns in training data. If certain edge cases are rare or absent, the AI may fail catastrophically.

Similarly, humans can draw analogies across domains. A child who learns about gravity dropping an apple can extend that knowledge to dropping a toy. AI struggles with such transfer of knowledge unless explicitly trained to do so. Each task is an island; bridges between them are often missing.

Catastrophic Forgetting

One fascinating—and troubling—property of many AI systems is that they can forget almost everything they’ve learned when trained on new tasks. In humans, new learning builds upon old knowledge. You don’t forget how to ride a bicycle just because you learned to ice skate. But in neural networks, training on new data often overwrites prior knowledge. This phenomenon is called “catastrophic forgetting.”

This is why continual learning in AI remains a grand challenge. A neural network trained to recognize cars might completely lose its ability to identify birds if re-trained on a bird-only dataset. This brittleness contributes to strange failures when AI systems encounter unexpected situations.

Overfitting: Seeing Ghosts in Noise

Humans excel at generalizing from sparse information. A child might see only a few examples of a cat before confidently spotting one on the street. AI, however, can fall prey to overfitting—memorizing every tiny detail in its training data, rather than learning the general concept.

Imagine a student who memorizes every word in a textbook but can’t answer questions phrased slightly differently on an exam. That’s how overfit AI behaves. When confronted with new examples that differ even slightly from training images, it can fail spectacularly.

A neural network trained on images of dogs photographed outdoors might fail to recognize a dog photographed in a living room. Or it might identify random textures—like stripes on a shirt—as decisive evidence for an entirely different category. To the AI, the stripes might resemble patterns associated with a zebra in its training set.

Overfitting is one reason AI can produce absurd errors. The machine is not interpreting the world—it’s recalling a memory. And memories are fragile when the world changes.

The Challenge of Explaining Itself

Humans are remarkably good at explaining their decisions. Even if we’re sometimes wrong, we can tell you why we believed what we did. AI struggles mightily with this. Deep neural networks are often described as “black boxes”—they produce results without revealing how or why.

This opaqueness creates a crucial problem: when AI makes a bizarre mistake, it’s often impossible to trace the reasoning step by step. Why did the model label a turtle as a rifle? Why did it decide a stop sign was a speed limit sign after a few stickers were placed on it? We can sometimes probe the network’s internal activations, but the connections between neurons are so complex that even experts can’t fully decipher them.

This lack of explainability makes it difficult to trust AI in high-stakes domains like healthcare, finance, or autonomous vehicles. When a human doctor makes an unusual diagnosis, they can justify it. When an AI makes the same call, we may be left in the dark.

When Precision Becomes Fragility

AI’s precision is its gift—and its curse. Unlike humans, who tolerate ambiguity, machines are ruthlessly literal. They can distinguish subtle pixel-level differences invisible to human eyes. This allows them to surpass human accuracy in some tasks, like reading medical images or detecting anomalies in massive data streams.

But that same sensitivity makes them fragile. Change the input by a hair’s breadth, and the AI might flip its prediction. A photo of a dog rotated by 45 degrees might become unrecognizable to the system. Noise in the data, or a novel context, can turn a high-confidence prediction into a spectacular blunder.

Humans, by contrast, are astonishingly robust. We can recognize friends in disguise, decipher conversations in noisy rooms, or read messy handwriting. Machines are still learning such resilience.

The Mirror of Ourselves

It’s tempting to scoff at AI’s mistakes. How could a supercomputer mistake a panda for a gibbon? But these failures remind us of something profound: artificial intelligence is not alien intelligence. It is human intelligence reflected through data and algorithms. Its flaws are, in part, reflections of our own—magnified and made visible.

When AI stumbles, it often exposes blind spots in our own assumptions. We believe we understand concepts like fairness, context, and causation. But encoding those human intuitions into mathematics is staggeringly difficult.

AI forces us to confront the difference between knowing and calculating. Between wisdom and mere correlation. It lays bare how much of our knowledge is tacit, embodied, and shaped by millions of years of living in a complex world.

Toward a More Human-Like AI

Researchers are racing to address these problems. They are developing AI systems that incorporate symbolic reasoning, allowing machines to manipulate abstract concepts rather than relying purely on statistical patterns. Others are exploring “explainable AI,” to make machine decisions more transparent.

There’s growing interest in multimodal learning, where AI combines text, images, sound, and even physical sensor data, aiming for a richer understanding of context. Hybrid systems blend deep learning with rule-based reasoning, creating machines that are both flexible and grounded in logic.

Yet a true human-like intelligence remains elusive. Our minds are not simply statistical engines—they’re storytelling machines. We weave narratives, imagine alternate futures, and empathize with others. We fill in gaps in knowledge with intuition. We dream.

AI is not yet dreaming.

Embracing Imperfection

One day, AI may achieve a level of understanding that rivals human cognition. But even then, it may never be truly human. Its wiring, its experiences, its path to knowledge will always be different. And that difference is both limitation and possibility.

We should not fear AI’s mistakes as mere stupidity. They are signals pointing to the edge of machine learning’s map. They remind us that intelligence has many forms—and that our own is not the only possible way to know the world.

AI sometimes makes mistakes no human would because it is not human. It has no childhood memories, no physical body, no lifelong exposure to the gritty texture of reality. It cannot smell rain on dry pavement or sense the weight of silence in a crowded room. It knows math, but not meaning.

Yet its alien way of seeing opens doors we could never unlock alone. AI has discovered new drug molecules, spotted astronomical phenomena invisible to our eyes, translated ancient languages, and even helped design futuristic aircraft wings. Its mistakes are the price we pay for a partner that can think differently.

The Panda and the Gibbon

So the next time you hear that an AI confused a panda with a gibbon, remember: the machine is not broken. It is revealing a deeper truth about how it “sees.” The AI’s vision is a hall of mirrors—a geometry of numbers that sometimes aligns with human reality, and sometimes veers into a world only machines can perceive.

This duality is the essence of artificial intelligence. It is a tool of staggering power, and a reminder of our own unique spark. Its mistakes, however absurd, are signposts on the road toward deeper understanding—of both the machines we build, and the minds we use to build them.

In the shimmering patterns of neural networks, we glimpse both our genius and our hubris. We see the outline of a future where human and machine collaborate—not as mirrors of one another, but as different kinds of minds sharing the same stars.