Imagine a world where the limits of classical computing dissolve—where machines no longer process information one bit at a time, but simultaneously explore infinite possibilities. That world is no longer confined to the imagination of science fiction authors or the theoretical musings of physicists. It is unfolding in research labs, startups, and tech giants across the globe. Welcome to the frontier of quantum computing—an intersection of mind-bending physics and revolutionary computational power.

To understand what a quantum computer is, we must embark on a journey into the heart of quantum mechanics—the science of the very small, where particles defy logic, exist in multiple states at once, and influence each other across cosmic distances. From this strange, counterintuitive world arises a new kind of computer, one that holds the promise of solving problems no classical computer ever could. But how does it work? Why is it so powerful? And what does it mean for the future of technology, science, and humanity?

Let’s dive in.

Classical Computers: The Baseline

To appreciate the revolution, we first need to understand the status quo. Classical computers—the laptops, smartphones, and servers we use daily—operate using bits. A bit is the smallest unit of data, and it can have one of two values: 0 or 1. Everything we do on a classical computer—from typing an email to streaming a movie—boils down to manipulating billions of these bits using a set of logical instructions, called algorithms.

Underneath the hood, bits are implemented using physical switches, usually transistors, that can either be on or off, corresponding to the binary values. With millions to billions of transistors packed into modern processors, classical computers can perform astonishing feats. Yet even the most powerful supercomputers have their limits. Certain problems, like simulating complex molecules or factoring massive prime numbers, scale so quickly in complexity that even the fastest machines become uselessly slow.

That’s where quantum computers come in—not as replacements for classical computers, but as tools designed to tackle a completely different class of problems.

Into the Quantum Realm: Superposition and Qubits

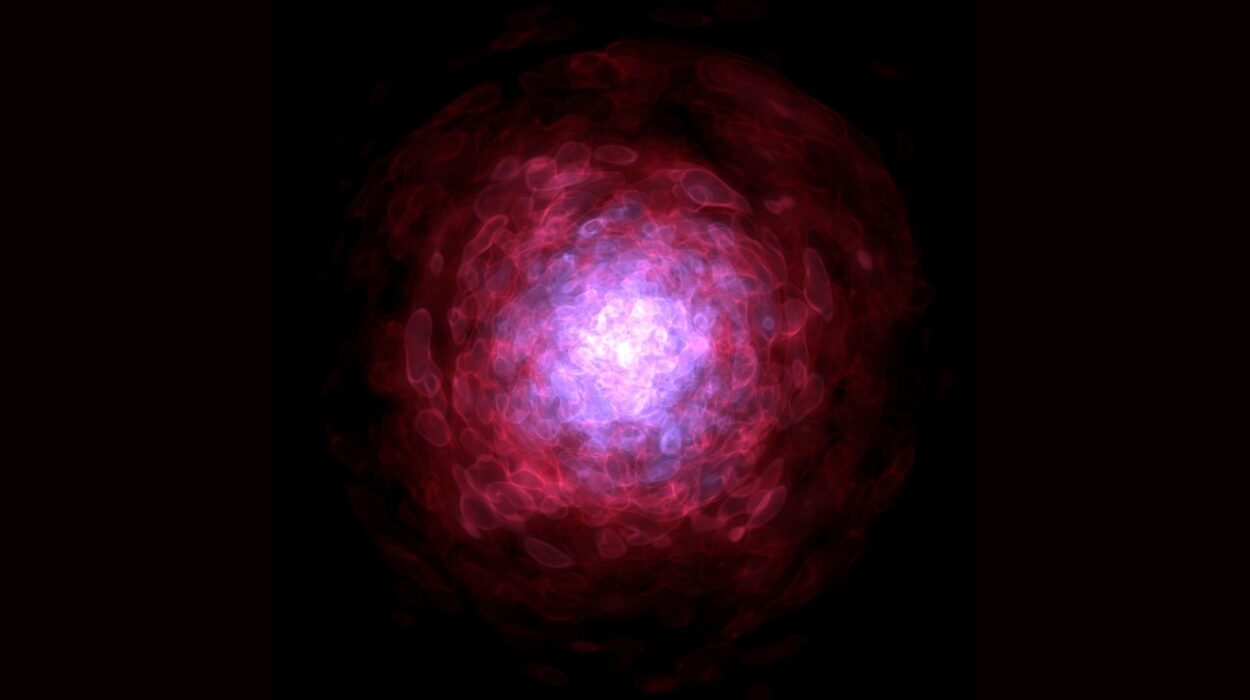

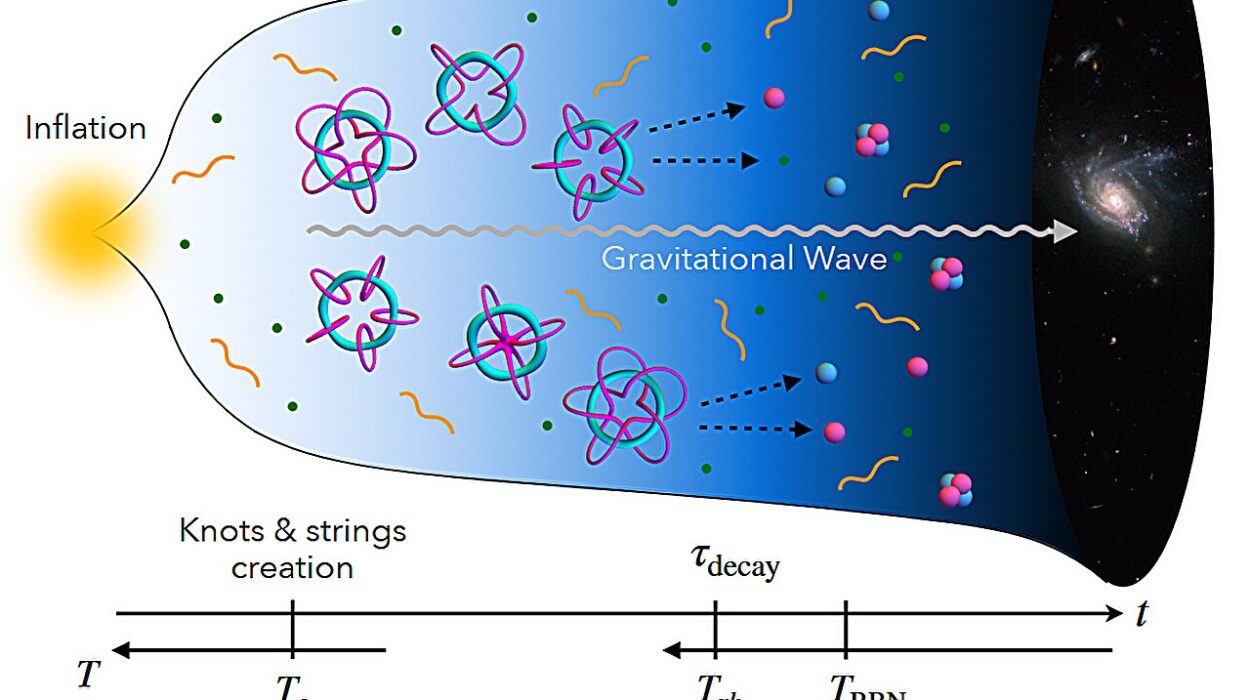

Quantum mechanics tells us that at a fundamental level, particles like electrons and photons don’t have definite positions, energies, or spins until they’re measured. Instead, they exist in a haze of probabilities—a state known as a superposition. A quantum computer leverages this principle by replacing classical bits with quantum bits, or qubits.

Unlike a bit, which is either 0 or 1, a qubit can be in a state that is both 0 and 1 simultaneously, thanks to superposition. This means that with each additional qubit, the amount of information a quantum computer can process increases exponentially. Two qubits can represent four states at once: 00, 01, 10, and 11. Three qubits represent eight. Ten qubits? 1,024 states. By the time you reach just 300 qubits, the number of simultaneous states exceeds the number of atoms in the observable universe.

But superposition is only part of the magic.

The Quantum Tango: Entanglement

Another strange feature of quantum mechanics is entanglement, a phenomenon Albert Einstein once famously dismissed as “spooky action at a distance.” When two qubits become entangled, their states become linked in such a way that the state of one qubit instantly affects the other, no matter how far apart they are. Entangled qubits form the backbone of quantum algorithms, enabling operations that are not just fast, but fundamentally impossible for classical systems.

With superposition, a quantum computer explores many possible solutions at once. With entanglement, it weaves those solutions together, allowing the results to interfere constructively or destructively, amplifying the correct answers and canceling out the wrong ones. This is not parallel computing in the classical sense; it is computing with probabilities, correlations, and waves of information.

Quantum Gates and Algorithms

Just as classical computers perform operations with logic gates (AND, OR, NOT), quantum computers use quantum gates. These gates manipulate qubits through carefully calibrated physical interactions—rotating their states, entangling them, and guiding their evolution through quantum circuits. Unlike classical gates, which operate on binary inputs, quantum gates deal with amplitudes—complex numbers that describe probabilities.

The most famous quantum algorithm is Shor’s algorithm, which can factor large numbers exponentially faster than any known classical method. This has profound implications for cryptography, as most modern encryption systems rely on the difficulty of factoring as a security foundation. Then there’s Grover’s algorithm, which can search unsorted databases in square root time—another dramatic speedup over classical approaches.

But designing quantum algorithms is notoriously hard. The algorithms must not only harness quantum effects but also survive the fragile, error-prone nature of quantum systems.

The Hardware Revolution: From Atoms to Ions to Superconductors

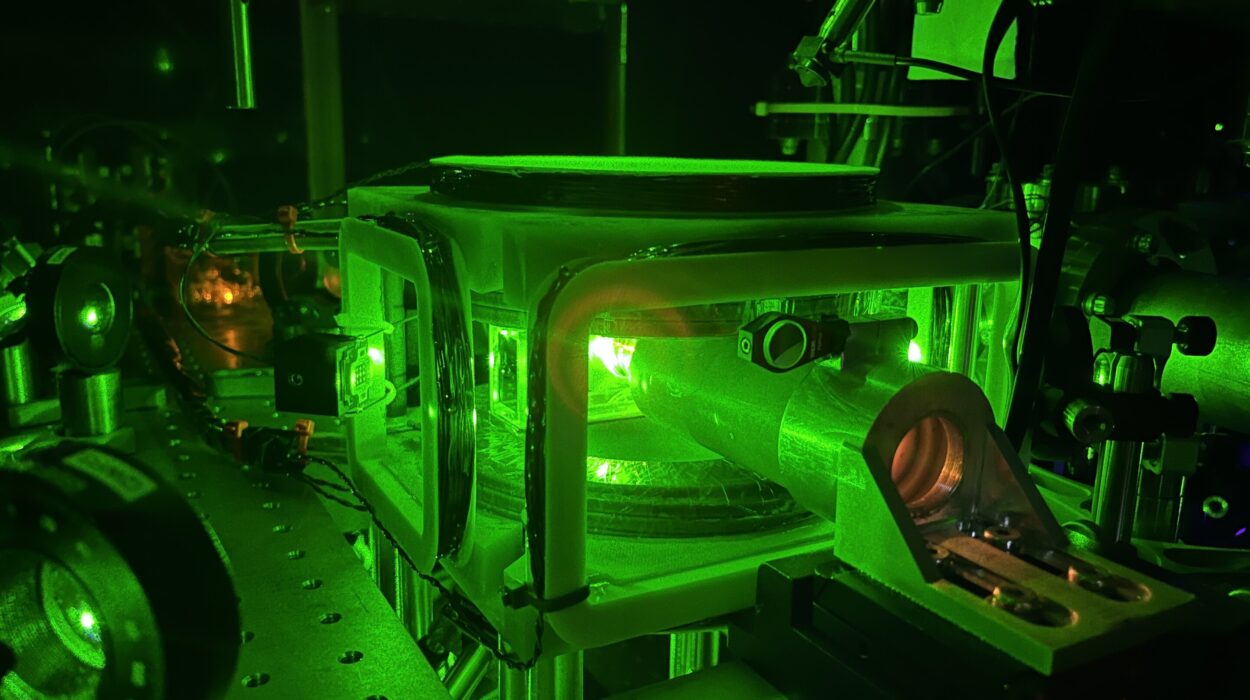

Quantum computing isn’t just about abstract theory—it’s a hardware revolution too. Creating and controlling qubits requires extreme precision, exotic materials, and often bizarre environments.

There are several leading technologies competing in the race to build scalable quantum machines:

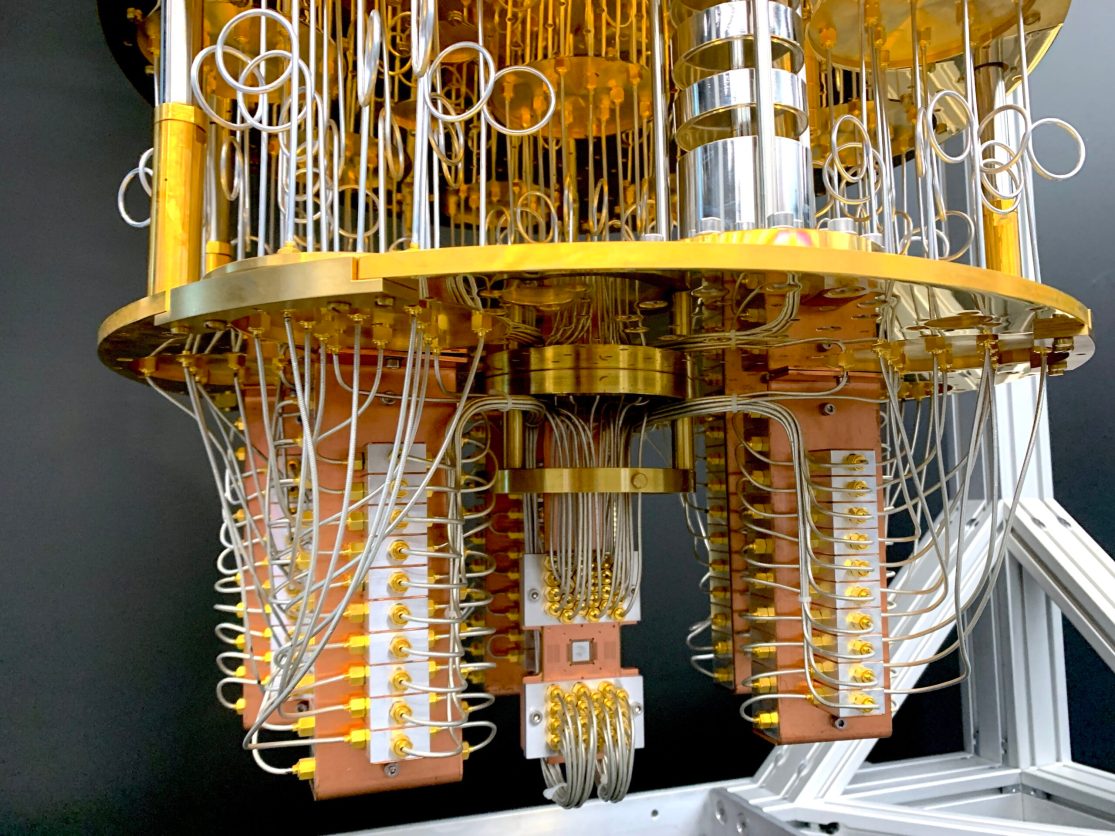

Superconducting qubits, used by companies like IBM and Google, rely on tiny loops of superconducting material chilled to near absolute zero. These circuits exhibit quantum behavior, allowing them to act as qubits. They are relatively easy to integrate with existing electronics, but suffer from short coherence times, meaning they can lose their quantum state quickly.

Trapped ions, favored by companies like IonQ, use individual charged atoms suspended in electromagnetic fields. Lasers manipulate the ions to implement quantum gates. These systems are incredibly stable and precise but are difficult to scale.

Photonic qubits use particles of light, manipulated through waveguides and beam splitters. This approach benefits from low interference and room-temperature operation, but faces challenges in reliably generating and detecting individual photons.

Topological qubits, a more exotic approach pursued by Microsoft and others, aim to encode qubits in “braids” of quantum information resistant to errors. This method is still largely theoretical but holds the promise of more robust computation.

Each technology has trade-offs in terms of fidelity, scalability, error rates, and coherence times. No clear winner has yet emerged.

Quantum Error Correction: The Fragile Dance

One of the biggest challenges in quantum computing is error correction. Unlike classical bits, which can be copied and backed up, quantum information is fundamentally uncloneable due to the no-cloning theorem. Moreover, qubits are extraordinarily sensitive to their environment—tiny fluctuations in temperature, radiation, or electromagnetic noise can cause decoherence, destroying the delicate quantum state.

To address this, researchers have developed quantum error correction codes that spread information across multiple physical qubits to create a more stable “logical qubit.” But this comes at a cost. Creating a single reliable logical qubit may require hundreds or even thousands of physical qubits. As a result, the quantum computers of today—known as Noisy Intermediate-Scale Quantum (NISQ) devices—are still far from the fault-tolerant machines needed for large-scale quantum applications.

Nonetheless, progress is accelerating. Every year brings higher fidelities, longer coherence times, and more qubits. The dream of practical quantum computation is inching closer to reality.

What Can Quantum Computers Do?

Quantum computers are not simply faster versions of classical computers. They’re specialized engines for certain kinds of problems. So what are they good for?

First and foremost, quantum computers excel at simulating quantum systems. This makes them invaluable for chemistry, materials science, and pharmacology. Designing new drugs, understanding enzyme behavior, modeling superconductors, or discovering new materials could become dramatically more efficient with quantum simulations.

They also shine in optimization problems—the kind that arise in logistics, finance, machine learning, and supply chains. Quantum algorithms can explore vast solution spaces more effectively than classical counterparts.

Then there’s cryptography, where quantum computers pose both a threat and an opportunity. On one hand, they could break widely used encryption systems, endangering cybersecurity. On the other, they could enable new forms of quantum encryption that are theoretically unbreakable, like quantum key distribution.

Finally, in machine learning, quantum-enhanced algorithms could someday tackle high-dimensional data sets and uncover patterns that elude classical methods. This field—quantum machine learning—is still in its infancy, but the potential is vast.

The Race for Quantum Supremacy

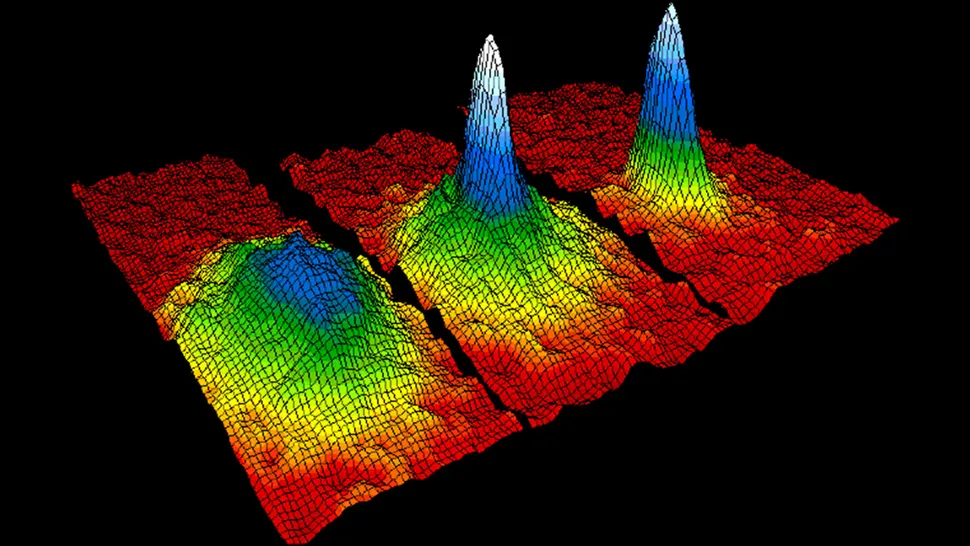

In 2019, Google claimed to have achieved quantum supremacy—the point at which a quantum computer performs a task no classical computer can do in a reasonable time. Using their 53-qubit Sycamore processor, they executed a complex random sampling task in about 200 seconds, which they claimed would take the fastest classical supercomputer 10,000 years.

The announcement was met with both excitement and skepticism. IBM, among others, challenged the claim, suggesting that clever classical algorithms could reduce the task to a matter of days. Still, the experiment marked a milestone: quantum computers had crossed a symbolic threshold.

Since then, other companies and labs have been racing to build more powerful machines, scale up qubits, and demonstrate useful quantum advantage—where quantum computers solve practical problems faster than classical ones. This race includes not just tech giants, but also startups, universities, and national governments, all investing heavily in quantum research.

Quantum Computing and the Future of Civilization

Quantum computing is not merely a scientific curiosity—it is a transformative technology with the potential to reshape entire industries and perhaps even civilization itself.

In healthcare, quantum simulations could accelerate drug discovery, personalize treatments, and decode the complex mechanisms of diseases. In climate science, quantum models could simulate atmospheric and oceanic systems with unprecedented precision, aiding efforts to combat global warming. In finance, quantum algorithms might revolutionize risk analysis, trading strategies, and market prediction.

Even in the arts and humanities, the implications are profound. Quantum computing challenges our very notions of knowledge, causality, and information. It forces us to rethink the boundaries between the known and the unknown, the deterministic and the probabilistic.

But with great power comes great responsibility. The same quantum algorithms that promise breakthroughs in medicine could also undermine global cybersecurity. The same quantum models that help stabilize economies could be used to manipulate markets or data. Managing the ethical, political, and economic implications of quantum technology will be one of the defining challenges of the 21st century.

Will We All Have Quantum Laptops?

One common question is whether quantum computers will ever become personal devices like laptops or smartphones. The answer, for now, is no. Quantum computers are incredibly complex machines that require extreme conditions—cryogenic temperatures, vacuum systems, and sophisticated error correction protocols.

Instead, quantum computing is likely to follow the model of cloud computing. Already, companies like IBM, Google, and Amazon offer access to quantum processors via the cloud, allowing researchers and developers to write and test quantum code without needing physical access to the hardware.

In the future, quantum processors may become accelerators, much like GPUs, integrated into larger hybrid systems that combine classical and quantum computing. The classical machine handles general tasks; the quantum core takes on the hard problems.

Quantum Literacy: A New Kind of Knowledge

As quantum technology matures, understanding the basics of quantum computing will become as essential as knowing how to use a spreadsheet today. Quantum literacy doesn’t require a PhD in physics—but it does require curiosity, humility, and a willingness to think in new ways.

Educational programs are emerging to teach quantum principles in high schools and colleges. New programming languages, like Q#, Qiskit, and Cirq, are lowering the barrier for entry. The next generation of scientists, engineers, and even artists will grow up with quantum ideas as part of their intellectual toolkit.

Conclusion: Embracing the Quantum Future

Quantum computing represents a profound shift—not just in how we compute, but in how we understand the universe. It takes us from a world of definite outcomes to one of probabilities, interference, and entanglement. It merges the philosophical with the practical, the theoretical with the technological.

It’s still early days. The field faces enormous hurdles: building scalable hardware, improving error correction, developing new algorithms, and ensuring ethical deployment. But the progress is undeniable, and the momentum is growing.

In the coming decades, quantum computers may help cure diseases, crack the mysteries of the cosmos, protect our data, and solve problems we haven’t even dreamed of yet. They may also challenge our assumptions about reality, mind, and information itself.

In embracing quantum computing, we are not just building faster machines. We are opening a new chapter in the story of human curiosity—a chapter where physics meets computing, and together, they explore the very limits of what is possible.