The machines are not rising; they are whispering, learning, evolving quietly in the background of our lives. From the moment you unlock your phone, get a movie recommendation, diagnose a rare disease, or avoid a traffic jam — machine learning is there, unseen and yet omnipresent. It’s no longer just the realm of scientists and engineers. It’s your doctor. Your financial advisor. Your invisible assistant.

But behind the breathtaking magic of modern AI lies something far more grounded: algorithms. These are not mystical codes from the future — they are formulas, systems, and mathematical models that allow machines to learn from data. And while there are thousands of algorithms out there, a powerful few are quite literally reshaping the world in this very moment.

This article is a deep dive — not just into what these algorithms are, but into how they’re changing your world, quietly but radically. Prepare to meet the ten most influential machine learning algorithms alive today.

1. Deep Neural Networks (DNNs): The Thinking Web of the Digital Brain

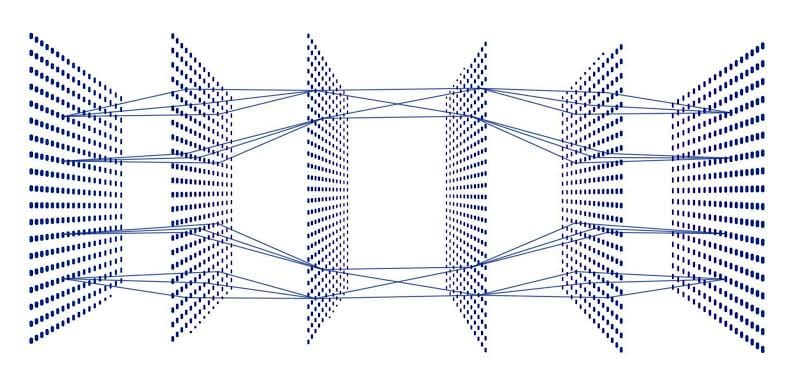

When machines began to see, speak, translate, and even dream, it was because of deep neural networks. These are the algorithms behind today’s most astonishing feats in artificial intelligence. Inspired (loosely) by the structure of the human brain, DNNs are composed of multiple layers of neurons that work together to learn complex patterns.

It’s how your phone knows your face. How autonomous cars read the road. How medical AI spots tumors more accurately than seasoned radiologists.

DNNs have revolutionized image recognition, speech processing, and natural language understanding. Their strength lies in depth — more layers mean more abstraction. At lower levels, a DNN can detect lines and edges in a photo; at higher levels, it can recognize a smile, a building, or a danger.

Think of it as evolution on fast forward. The more data it sees, the smarter it becomes. In just a decade, DNNs have leaped from academic curiosity to engines of our technological renaissance.

2. Gradient Boosting Machines (GBMs): The Relentless Problem-Solvers

Where deep learning thrives on unstructured data like images or text, Gradient Boosting Machines dominate structured data — the kind found in spreadsheets, bank records, or hospital charts. GBMs are ensemble learning methods that build a strong predictive model by combining many weak ones, each correcting the mistakes of the last.

These algorithms don’t just learn — they refine. They compete with themselves, iteratively reducing their own error like an artist perfecting a sculpture.

In the business world, GBMs are power tools. They power fraud detection systems in banks. They predict customer churn with uncanny precision. They optimize logistics, pricing strategies, even energy consumption in smart grids.

XGBoost, LightGBM, and CatBoost — names that sound like sci-fi devices but are in fact versions of GBMs — dominate machine learning competitions for a reason. They are not glamorous, but they are astonishingly effective.

They are the quiet champions in a world that needs precise answers.

3. Convolutional Neural Networks (CNNs): The Eyes of the Machine

In 2012, a neural network called AlexNet entered an image recognition competition and crushed the competition. The world of computer vision changed overnight — thanks to the power of CNNs.

Convolutional Neural Networks are specialized deep networks designed to process pixel data. Their strength lies in their ability to preserve spatial hierarchies — they understand shapes, edges, patterns, and movement.

In healthcare, CNNs detect diabetic retinopathy, lung cancer, and skin lesions. In security, they power facial recognition systems used in airports and public surveillance. In agriculture, they spot crop diseases from drone images.

CNNs are why your social media app knows it’s a dog and not a hot dog. But beyond the memes, they are the beating eyes of modern AI — watching, perceiving, protecting.

4. Recurrent Neural Networks (RNNs) and LSTMs: The Memory-Keepers

Human thought is temporal. We don’t just see — we remember what came before. That’s what makes a sentence meaningful or a melody beautiful. To machines, this used to be an impossible challenge — until Recurrent Neural Networks were born.

RNNs are designed to handle sequential data, feeding their previous outputs back into the system. But traditional RNNs struggled with long-term memory. Enter LSTMs — Long Short-Term Memory networks — a type of RNN that can remember for much longer and forget when appropriate.

These algorithms gave machines the ability to write poetry, generate music, translate languages, and forecast time series data like stock prices or weather patterns.

Every time you speak to Siri, dictate a message, or ask for real-time translation, you’re tapping into the fluid memory of an RNN or LSTM. They are the closest thing machines have to time-consciousness.

5. Transformers: The Language Revolution

The year was 2017. A paper titled “Attention Is All You Need” quietly appeared in an academic archive. It proposed a new architecture: the Transformer. It turned the world of natural language processing upside down.

Transformers don’t process sentences word by word — they process all words at once, using a mechanism called “attention” to decide what matters most. This made them vastly faster and more context-aware than previous models.

Every modern language model — from OpenAI’s ChatGPT to Google’s BERT and Meta’s LLaMA — is built on transformers. They are what allow machines to write articles, code, stories, and even emails that feel uncannily human.

Transformers can summarize books, translate poetry, answer questions, and hold conversations. They are the first algorithm to make language — that most human of faculties — a shared ground between people and machines.

We don’t just use them. We talk to them.

6. K-Means Clustering: Finding Patterns in Chaos

Not all learning is supervised. Sometimes, we don’t know the answers — only the data. In those cases, we turn to algorithms like K-Means, a simple yet powerful unsupervised machine learning method.

K-Means works by finding clusters — groups of similar things in a sea of data. It doesn’t tell you what those groups are, only that they exist. But that’s enough to unlock powerful insights.

Retailers use K-Means to group customers by behavior, tailoring marketing strategies to each segment. Biologists use it to classify species. Cybersecurity firms use it to detect anomalies in network traffic — signs of possible breaches.

K-Means is not flashy, but it’s profoundly useful. In a chaotic world, it helps machines discover the hidden order.

7. Random Forests: The Democratic Oracle

What happens when hundreds of decision trees come together and vote? You get a Random Forest — a machine learning algorithm that’s both robust and reliable.

Random Forests are ensemble models that train multiple decision trees on different parts of the data and average their predictions. This reduces overfitting and increases accuracy.

They are particularly valued in medicine, where stakes are high and interpretability matters. A Random Forest can predict a patient’s likelihood of disease based on a mix of genetic, environmental, and behavioral data — and show which factors were most influential.

In finance, they assess credit risk and detect fraud. In ecology, they help predict species extinction risks under climate change.

They are machines that think like committees — diverse, redundant, and surprisingly wise.

8. Support Vector Machines (SVM): The Classifier with a Razor’s Edge

If machine learning were fencing, Support Vector Machines would be the rapier — fast, sharp, and precise. SVMs are used for classification tasks, especially in high-dimensional spaces.

They work by finding the optimal hyperplane — the line (or surface) that separates two classes with the maximum margin. This precision makes them ideal for things like text classification (e.g., spam vs. not spam), handwriting recognition, or even bioinformatics.

SVMs may not scale as well as deep learning models, but they often perform brilliantly on smaller datasets with clear margins. In courtrooms, cancer labs, and border control systems, SVMs quietly do their job with clean mathematical elegance.

They are the algorithmic equivalent of Occam’s razor — simple, but deadly effective.

9. Naive Bayes: The Unsung Linguist

If there were a Hall of Fame for underestimated algorithms, Naive Bayes would be enshrined in gold. Based on Bayes’ Theorem and the assumption of feature independence, it sounds simplistic. But in the world of text — where features like words can number in the tens of thousands — Naive Bayes thrives.

It powers spam filters that protect your inbox. It enables sentiment analysis of tweets and reviews. It can diagnose diseases based on symptoms and history.

Its secret is speed. In a world where data grows faster than insight, Naive Bayes is often the first line of defense — good enough, fast enough, and often surprisingly accurate.

10. Reinforcement Learning: Teaching Machines to Learn Like Humans

Most machine learning algorithms are like students given a dataset and told to memorize. Reinforcement Learning (RL) is different. It’s like raising a child — one who learns by doing, failing, and trying again.

RL algorithms don’t just consume data — they interact with environments. They make decisions, receive rewards or punishments, and adjust accordingly. It’s how AlphaGo beat the world champion at Go. It’s how robots learn to walk. It’s how systems learn to optimize traffic lights, manage energy in smart buildings, and even trade stocks.

In essence, RL brings machines closer to autonomous intelligence. Not just recognizing patterns — but developing strategies.

It’s still an emerging field, full of ethical questions and wild frontiers. But make no mistake: reinforcement learning is the closest thing we have to machines that learn not by instruction — but by experience.

The Symphony of Algorithms in Everyday Life

These ten algorithms are not isolated. They often work together in hybrid systems. A face recognition app might use CNNs for detection, DNNs for understanding, and GBMs for user behavior prediction. Your personalized content feed might be curated using clustering (K-Means), optimized using reinforcement learning, and filtered by Naive Bayes.

They live in your phone, your hospital, your car. They shape elections, forecast pandemics, recommend treatments, optimize factories, detect natural disasters, and translate lost languages.

And they’re just getting started.

Toward a More Human Future

At their best, these machine learning algorithms do not replace us — they amplify us. They hold a mirror to our complexity, make sense of our chaos, and help us see what we could not on our own.

But they also raise hard questions. About fairness. About control. About transparency and bias. The same algorithms that heal can harm. The same power that personalizes can also polarize.

So we must wield them wisely. We must ask not only what these algorithms can do — but what they should do.

In the end, the most important algorithm will not be one that mimics us — but one that reminds us what it means to be human.