Quantum computers represent one of the most revolutionary technological frontiers of the 21st century, a convergence of physics, computer science, and mathematics that promises to redefine computation itself. Unlike classical computers, which rely on bits that exist in one of two possible states—0 or 1—quantum computers operate using the strange and counterintuitive principles of quantum mechanics, allowing them to process information in ways that are fundamentally beyond the reach of classical systems.

The physics behind quantum computing is rooted in the laws that govern the microscopic world, where particles such as electrons, photons, and atoms exhibit behaviors that defy classical intuition. Quantum superposition, entanglement, and interference—phenomena that once seemed purely theoretical—form the bedrock of how quantum computers encode, manipulate, and extract information. Understanding these principles is essential not only to grasp how quantum computers function but also to appreciate why they represent such a profound shift in the way we think about information and computation.

In this article, we explore the deep physical foundations of quantum computers, tracing the principles of quantum mechanics that enable them, the challenges of realizing such machines in practice, and the extraordinary implications they hold for science, technology, and society.

The Foundation of Quantum Mechanics

Quantum mechanics is the physical theory that describes nature at the smallest scales, governing the behavior of matter and energy in the realm of atoms, molecules, and subatomic particles. Unlike classical mechanics, which offers deterministic predictions of motion and outcomes, quantum mechanics introduces inherent uncertainty and probabilistic behavior.

At its core, quantum theory posits that particles do not have definite properties such as position or momentum until they are measured. Instead, they exist in a superposition of states, represented mathematically by a wavefunction. This wavefunction encodes all the possible outcomes of a measurement, with probabilities determined by its amplitude. When a measurement is performed, the wavefunction “collapses” to one of the possible states.

This probabilistic nature, along with the linear algebra of complex vector spaces, provides the mathematical foundation for quantum computation. In quantum computers, the manipulation of quantum states is analogous to controlling and evolving wavefunctions, allowing a vast amount of information to be processed simultaneously through quantum superposition.

Classical Bits vs. Quantum Bits

To understand quantum computers, it is crucial to compare their basic units of information with those of classical computers. Classical computers process information in bits—binary digits that can exist in one of two distinct states: 0 or 1. These bits are typically represented physically by voltage levels in circuits, magnetic polarization in storage media, or optical signals in fiber networks.

Quantum computers, in contrast, use quantum bits, or qubits. A qubit is a two-level quantum system that can exist in a superposition of both 0 and 1 states simultaneously. This means that a qubit can be described as a linear combination of both basis states, written as:

|ψ⟩ = α|0⟩ + β|1⟩,

where α and β are complex probability amplitudes that satisfy the normalization condition |α|² + |β|² = 1. The magnitudes of these amplitudes correspond to the probabilities of measuring the qubit in either the 0 or 1 state.

This superposition property allows a quantum computer with n qubits to represent 2ⁿ possible states simultaneously. In other words, while a classical computer must explore each computational path one at a time, a quantum computer can process all possible combinations at once, exploiting the parallelism inherent in quantum mechanics.

However, this does not mean that quantum computers can instantly solve all problems exponentially faster than classical ones. The power of quantum computation arises not simply from superposition but from the way interference and entanglement can be harnessed to manipulate these parallel states constructively and destructively to reach specific outcomes.

Superposition: The Heart of Quantum Computation

Superposition is one of the defining principles of quantum mechanics. It refers to the ability of a quantum system to exist in multiple states simultaneously until a measurement collapses it into one. This property is what gives qubits their extraordinary potential for parallel information processing.

To illustrate, consider the famous thought experiment known as Schrödinger’s cat. In this scenario, a cat in a sealed box can be simultaneously alive and dead depending on the quantum state of a subatomic particle. Only when the box is opened—when the system is measured—does the cat’s state collapse into one definite outcome. Similarly, a qubit can exist in a combination of the 0 and 1 states until measurement determines its final value.

In a quantum computer, operations (known as quantum gates) manipulate these superpositions coherently. Instead of simply flipping bits, quantum gates rotate the qubit’s state vector on a geometrical construct known as the Bloch sphere, which represents all possible superpositions between 0 and 1. These rotations change the probabilities associated with each state, allowing quantum algorithms to explore a vast computational landscape simultaneously.

Entanglement: The Quantum Connection

Entanglement is another cornerstone of quantum physics and one of the most perplexing phenomena in science. When two or more qubits become entangled, their states become correlated in such a way that the measurement of one instantly determines the state of the other, no matter how far apart they are. This interdependence cannot be explained by classical physics and was famously referred to by Albert Einstein as “spooky action at a distance.”

In mathematical terms, an entangled state cannot be expressed as the product of individual qubit states. For example, the entangled Bell state

|Φ⁺⟩ = (|00⟩ + |11⟩) / √2

means that if one qubit is measured to be in the 0 state, the other will also be in the 0 state, and if one is in 1, the other is in 1. These correlations persist even across large distances, a property that has been experimentally verified countless times.

Entanglement enables quantum computers to perform operations on many qubits simultaneously in a coordinated fashion, effectively linking their computational states. This allows for the exponential scaling of computational power and forms the basis for key quantum algorithms and protocols, such as quantum teleportation and error correction.

Quantum Interference and Computational Power

While superposition allows a quantum system to explore multiple possibilities at once, interference determines how these possibilities combine to yield measurable outcomes. Interference arises because quantum amplitudes are complex numbers; they can add or cancel each other out depending on their phase relationships.

Quantum algorithms are designed to exploit constructive and destructive interference so that the correct solutions to a problem are amplified while incorrect ones are canceled out. This principle is at the heart of many quantum speedups. For example, in Grover’s algorithm for unstructured search, interference enhances the probability amplitude of the correct answer, allowing it to be found in roughly √N steps rather than N steps as in a classical search.

Thus, interference serves as a form of quantum parallelism control, enabling a quantum computer to manipulate and refine its superpositions toward specific results without having to examine every possibility individually.

Quantum Measurement and the Collapse of the Wavefunction

Measurement is a critical and subtle process in quantum mechanics. When a qubit is measured, its wavefunction collapses from a superposition of states into one definite outcome—either 0 or 1—with probabilities determined by the squared magnitudes of its amplitudes.

This collapse is irreversible, and it is the only point at which quantum computation produces a definite classical result. Until measurement, all computation within a quantum processor remains in the realm of probability and superposition. The challenge of designing effective quantum algorithms lies in ensuring that the interference pattern of amplitudes is such that, upon measurement, the desired outcome appears with high probability.

In practical quantum computing systems, measurements are carried out by coupling qubits to readout devices such as superconducting resonators, photodetectors, or ion traps. These measurements must be performed delicately to avoid prematurely disturbing the quantum state—a major engineering and physical challenge.

Physical Realizations of Qubits

Theoretical models of qubits must ultimately be realized in physical systems to build a functioning quantum computer. Several platforms have been developed, each leveraging different physical phenomena to represent and manipulate qubits while minimizing errors due to decoherence.

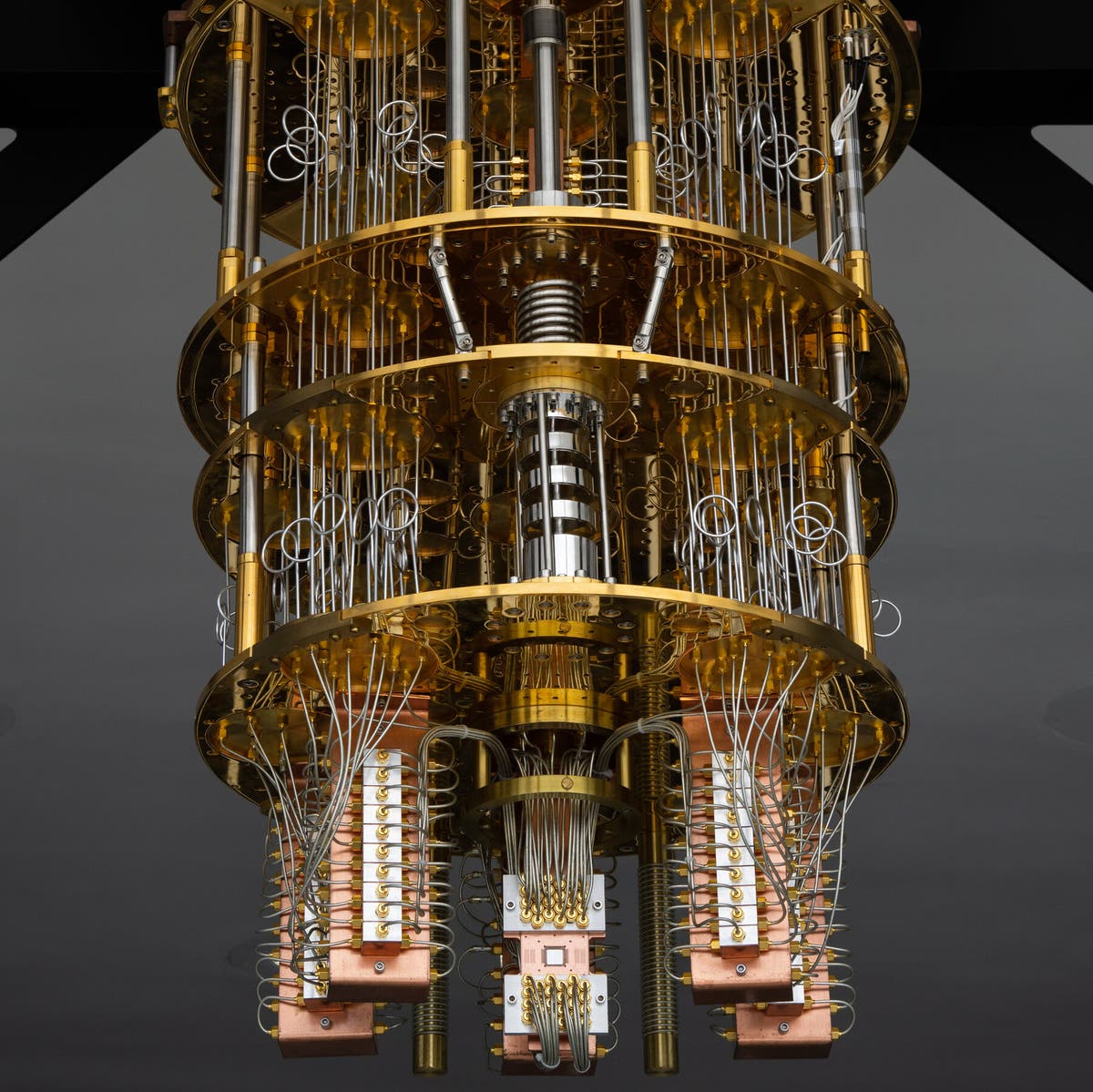

Superconducting qubits, used by companies such as IBM and Google, rely on macroscopic electrical circuits that behave quantum mechanically at cryogenic temperatures. These circuits consist of Josephson junctions, which allow quantum states of electrical current to form and be manipulated with microwave pulses.

Trapped ion qubits, employed by organizations like IonQ and Honeywell, use individual charged atoms confined by electromagnetic fields in vacuum chambers. Their quantum states are encoded in the electronic energy levels of the ions and manipulated using lasers.

Photon-based qubits use the polarization or phase of light particles as information carriers. These systems have advantages for communication and scalability, particularly in quantum networks and cryptography.

Other approaches, such as spin qubits in semiconductor quantum dots, topological qubits, and neutral atom systems, are being actively explored. Each platform has unique advantages and challenges, but all rely fundamentally on the quantum mechanical behavior of matter and energy.

Quantum Decoherence and Error Correction

One of the most significant challenges in building quantum computers is decoherence—the process by which quantum information is lost to the environment. Quantum states are delicate and easily disturbed by external noise, thermal fluctuations, or imperfect control. When a qubit interacts with its surroundings, it loses its superposition and entanglement, effectively reverting to classical behavior.

Quantum error correction (QEC) is the solution to this problem, but it presents formidable complexity. Unlike classical bits, which can be copied to create backups, quantum information cannot be cloned due to the no-cloning theorem. Instead, QEC encodes logical qubits into entangled states of multiple physical qubits, allowing errors to be detected and corrected indirectly.

One of the most promising methods is the surface code, which uses a lattice of qubits to detect and correct both bit-flip and phase-flip errors through continuous measurements of stabilizers. However, QEC requires significant overhead: hundreds or even thousands of physical qubits may be needed to represent a single logical qubit reliably.

Quantum Gates and Circuits

In quantum computation, operations on qubits are performed through quantum gates, analogous to logic gates in classical computing. However, unlike classical gates, which perform deterministic Boolean operations, quantum gates perform reversible unitary transformations that preserve the overall probability of the system.

Basic single-qubit gates include the Pauli-X (analogous to a classical NOT gate), Pauli-Y, and Pauli-Z gates, as well as rotation gates that change the state vector’s orientation on the Bloch sphere. Multi-qubit gates such as the Controlled-NOT (CNOT) and Toffoli gates create entanglement and enable complex computation.

Quantum circuits are built by combining these gates into sequences that manipulate qubit states coherently. The outcome of a quantum algorithm depends on how these gates shape the interference pattern of amplitudes across the qubit register. In practical implementations, these gates are realized through precisely timed electromagnetic pulses, laser interactions, or other forms of quantum control, depending on the physical platform.

Quantum Algorithms and Their Physical Implications

The power of quantum computers is best illustrated through quantum algorithms that exploit physical phenomena like superposition and entanglement to achieve computational advantages.

One of the most celebrated examples is Shor’s algorithm for integer factorization, which can break classical encryption systems based on the difficulty of factoring large numbers. It does so by using quantum parallelism and interference to find the periodicity of modular arithmetic functions exponentially faster than classical algorithms.

Grover’s algorithm, another milestone, accelerates search problems quadratically by amplifying the probability of correct solutions through interference. Quantum simulation algorithms allow physicists to model quantum systems directly—something that classical computers struggle with due to the exponential growth of the Hilbert space.

These algorithms are not merely mathematical tricks; they embody physical processes that harness the linearity and coherence of quantum evolution. Each step of a quantum algorithm corresponds to a precise manipulation of quantum states governed by Schrödinger’s equation.

Quantum Entanglement and Nonlocality in Computation

One of the most intriguing aspects of quantum computers is how they use entanglement to perform distributed computation. When qubits are entangled, their joint state contains correlations that cannot be explained by any local hidden variable theory, as demonstrated by violations of Bell’s inequalities.

In a quantum processor, entanglement allows qubits to share information instantaneously in a nonclassical manner. This does not transmit information faster than light, but it allows correlated operations that vastly increase computational efficiency. Quantum teleportation, for instance, enables the transfer of a quantum state from one location to another using entangled qubits and classical communication. This process is a fundamental building block of quantum communication and distributed quantum computing networks.

The Quantum Hardware Landscape

Building a scalable quantum computer is one of the greatest engineering challenges of our time. Superconducting circuits, trapped ions, and photonic systems each require advanced control technologies and extreme operating conditions.

Superconducting qubits operate at temperatures close to absolute zero, where electrical resistance vanishes and quantum coherence can persist for microseconds or milliseconds. Maintaining such environments demands dilution refrigerators and ultra-low-noise electronics.

Trapped ion systems, while slower, exhibit longer coherence times and high-fidelity gate operations. They rely on precision lasers to manipulate internal energy levels and motional states of ions. Photonic systems, in contrast, can operate at room temperature and excel at transmitting quantum information over long distances, making them ideal for quantum networks.

The scalability of quantum hardware depends on overcoming decoherence, minimizing gate errors, and implementing efficient quantum error correction. Hybrid systems that combine different qubit types may eventually provide the optimal path toward large-scale, fault-tolerant quantum computing.

Quantum Coherence and the Time Evolution of Qubits

At the heart of quantum computation is the controlled time evolution of quantum states. According to Schrödinger’s equation, the time evolution of a closed quantum system is governed by a unitary operator determined by its Hamiltonian—the total energy of the system.

Maintaining coherence over time is essential for quantum computation. Coherence time measures how long a qubit remains in a well-defined quantum state before interactions with the environment cause decoherence. Improving coherence times requires isolating qubits from noise while maintaining the ability to perform fast, accurate operations.

Researchers employ a variety of techniques to enhance coherence, such as using error-protected subspaces, implementing dynamic decoupling pulse sequences, and engineering materials with reduced magnetic impurities. These efforts lie at the intersection of physics and materials science, pushing the limits of experimental precision.

Quantum Thermodynamics and Energy Considerations

Quantum computation also introduces new perspectives on thermodynamics and energy. In classical systems, computation consumes energy primarily through irreversible operations, as described by Landauer’s principle. Quantum computers, however, operate through reversible unitary evolution, which in theory can be energy-efficient because information is not destroyed.

In practice, however, maintaining coherence, cooling systems to millikelvin temperatures, and performing error correction require substantial energy. Understanding the thermodynamic cost of quantum operations is an emerging area of research known as quantum thermodynamics. It seeks to quantify how quantum information processing interacts with entropy, heat, and energy flow at the quantum level.

Quantum Communication and Cryptography

Beyond computation, the physics of quantum systems enables secure communication methods based on the principles of quantum mechanics. Quantum key distribution (QKD), for example, relies on the no-cloning theorem and the collapse of quantum states upon measurement to ensure unbreakable encryption.

In QKD protocols such as BB84, two parties exchange photons in different polarization states. Any attempt by an eavesdropper to intercept the photons alters their states, revealing the intrusion. Such systems demonstrate how quantum mechanics can be harnessed to guarantee security based on physical laws rather than computational assumptions.

Entanglement-based quantum networks and future “quantum internets” will likely integrate with quantum computers to enable distributed computation, teleportation of quantum states, and novel forms of information sharing.

The Quest for Quantum Supremacy

The term “quantum supremacy” refers to the point at which a quantum computer performs a calculation that no classical computer can feasibly replicate within a reasonable time. In 2019, Google claimed to have achieved this milestone when its 53-qubit processor, Sycamore, completed a specific sampling task in 200 seconds that would have taken classical supercomputers thousands of years.

While such demonstrations are specialized and do not yet represent practical applications, they validate the fundamental physics of quantum computation and confirm that quantum processors can perform tasks inaccessible to classical algorithms. The challenge now is to extend this advantage to useful, real-world problems in chemistry, materials science, cryptography, and optimization.

The Future of Quantum Physics and Computation

The path ahead for quantum computing remains both challenging and promising. On the physical front, researchers continue to seek more robust qubits, improved coherence times, and scalable architectures. On the theoretical side, new algorithms, error correction codes, and hybrid quantum-classical approaches are being developed to harness the unique strengths of quantum systems.

Perhaps most importantly, quantum computers will serve as tools for exploring quantum physics itself. They can simulate complex quantum systems, revealing insights into high-temperature superconductivity, quantum phase transitions, and the fundamental structure of space-time. In this way, quantum computers are not only built upon the principles of quantum mechanics but also act as laboratories for probing its deepest mysteries.

As we continue to master the delicate balance between coherence and control, the dream of practical quantum computation comes closer to reality. The physics that underlies these machines—once confined to thought experiments and theoretical papers—has become the foundation of an entirely new era of science and technology.

Conclusion

Quantum computers are more than advanced machines; they are physical embodiments of the laws of quantum mechanics. Their power arises from principles that challenge our everyday understanding of reality—superposition, entanglement, interference, and measurement. Each qubit, each gate, and each algorithm reflects a dance of probabilities governed by the fundamental equations of physics.

The journey to harness these phenomena is as much a scientific exploration as a technological one. It demands mastery over materials, precision engineering, and deep theoretical insight into the quantum world. Yet, the reward is immense: a new paradigm of computation that could revolutionize industries, unlock the secrets of complex systems, and deepen our understanding of the universe.

The physics behind quantum computers stands as one of humanity’s most ambitious endeavors—a testament to our ability to transform the abstract principles of the quantum realm into the engines of future discovery.