Artificial intelligence (AI) has traditionally been the domain of powerful servers and massive data centers, where machine learning models are trained and executed using thousands of CPUs and GPUs. However, a major transformation is underway. The rise of neural chips—specialized hardware designed to accelerate AI computation—is moving intelligence closer to where data is generated. This paradigm shift is enabling a new era of smart devices, from phones and cars to industrial robots and medical equipment, capable of performing advanced AI operations locally without constant reliance on cloud computing. Neural chips, also called AI accelerators or neuromorphic processors, are redefining the boundaries between software and hardware, making artificial intelligence faster, more efficient, and more pervasive than ever before.

The Evolution of AI Hardware

To understand how neural chips are revolutionizing the field, it is important to trace the evolution of AI hardware. In the early days of computing, general-purpose processors such as CPUs handled all forms of computation, including the primitive machine learning algorithms of the time. However, as neural networks grew in complexity, traditional processors struggled to manage the parallel mathematical operations required for training and inference. The rise of graphical processing units (GPUs) in the 2000s changed the landscape. Originally developed for rendering graphics, GPUs proved ideal for parallel matrix operations, accelerating deep learning training by several orders of magnitude.

Yet even GPUs, though powerful, were not specifically designed for neural computation. Their general-purpose nature and high energy consumption limited their deployment in smaller devices. This led to the creation of a new generation of hardware optimized for the structure and operations of neural networks. Companies began developing application-specific integrated circuits (ASICs), field-programmable gate arrays (FPGAs), and eventually neuromorphic processors, all tailored for AI workloads. These chips are designed to process large amounts of data simultaneously while minimizing energy use, enabling AI to move from centralized data centers into edge devices.

Understanding Neural Chips

Neural chips are hardware architectures built to mimic, accelerate, or efficiently execute neural network computations. Unlike conventional processors that execute sequential instructions, neural chips are designed for parallel computation, performing millions or even billions of operations at once. The fundamental unit of operation is often a multiply-and-accumulate (MAC) function, which mirrors the operations performed in artificial neurons during training and inference.

There are several types of neural chips, each with distinct design philosophies. Some are digital accelerators that optimize existing neural network architectures by reconfiguring memory access and data flow. Others are neuromorphic chips inspired by the human brain, using spiking neural networks (SNNs) and analog computation to emulate biological neurons and synapses. Regardless of design, the goal remains the same: to enable fast, energy-efficient execution of AI models, often in environments where power and space are limited.

These chips differ from CPUs and GPUs in their approach to data handling. Traditional processors spend significant time moving data between memory and computation units, a bottleneck known as the “von Neumann bottleneck.” Neural chips address this by integrating computation and memory, often performing operations directly where data resides. This concept, known as “in-memory computing,” drastically reduces energy consumption and latency, making real-time AI processing feasible in edge devices.

The Architecture of Neural Chips

At the heart of a neural chip is an architecture optimized for parallel computation and high data throughput. Many neural chips use a grid-like array of processing elements (PEs) that work in concert to perform the mathematical operations of neural networks. Each PE handles small parts of a large computation, and results are shared across the array to build complex inferences. This structure enables massive parallelism, which is essential for the high-dimensional matrix multiplications found in deep learning.

A key architectural innovation is the reduction of data movement. In neural networks, most of the energy consumption comes not from computation itself but from transferring data between memory and compute units. Neural chips employ various techniques to mitigate this, such as local caching, hierarchical memory, and near-memory processing. Some architectures embed small memory banks within the computational fabric so that each neuron or processing element can access data instantly without long memory fetches.

Moreover, neural chips use specialized interconnects—data highways that efficiently route signals between processing units. These interconnects are often designed to support sparse computation, where not every neuron is active at a given time, further improving efficiency. By focusing on the structure of neural network operations rather than general-purpose computation, neural chips achieve performance gains that are orders of magnitude higher than CPUs or even GPUs for specific AI tasks.

The Rise of Edge AI

One of the most significant impacts of neural chips is their role in enabling edge AI—bringing artificial intelligence capabilities directly to devices rather than relying on cloud servers. Traditionally, running complex AI models required high computational power and abundant memory, resources available only in centralized data centers. However, edge devices such as smartphones, drones, and IoT sensors often operate under strict power and latency constraints. Neural chips bridge this gap by providing efficient local computation.

Edge AI offers several advantages. First, it reduces latency by processing data locally instead of transmitting it to a remote server, which is crucial for real-time applications like autonomous driving and augmented reality. Second, it enhances privacy because sensitive data can be analyzed on-device without being sent to the cloud. Third, it improves reliability by enabling operation even when connectivity is poor or unavailable.

Modern neural chips like Apple’s Neural Engine, Google’s Tensor Processing Unit (TPU) Edge, and Qualcomm’s Hexagon DSP exemplify this shift. These processors allow mobile devices to perform complex tasks such as image recognition, natural language processing, and predictive analytics locally. As a result, features like facial recognition, voice assistants, and computational photography have become seamless and instantaneous.

Neuromorphic Computing: Mimicking the Brain

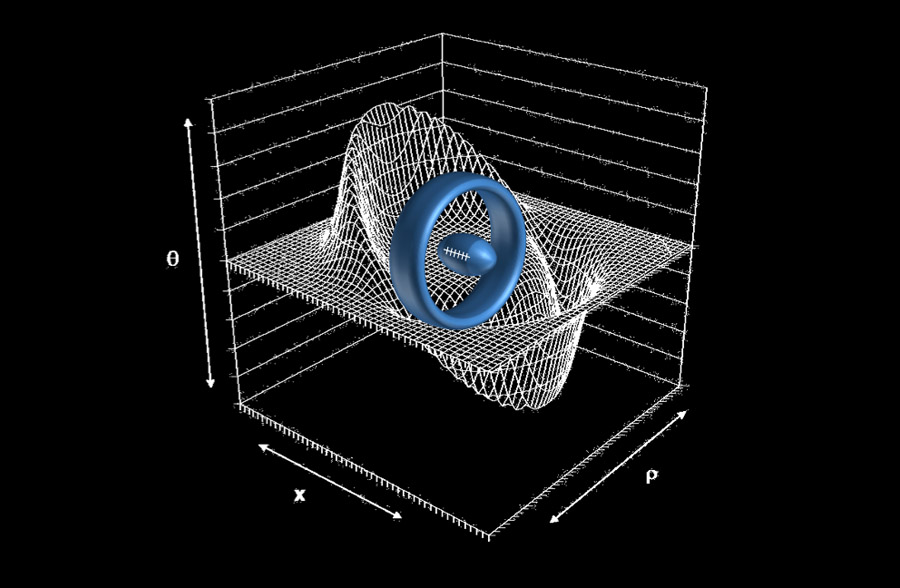

A particularly fascinating branch of neural chip development is neuromorphic computing, which seeks to emulate the structure and function of the human brain. Unlike conventional digital systems, neuromorphic chips use spiking neural networks where information is transmitted via discrete electrical spikes, similar to how biological neurons communicate. This approach promises extreme energy efficiency and the ability to process information in a more adaptive and event-driven manner.

Neuromorphic processors, such as Intel’s Loihi and IBM’s TrueNorth, contain millions of artificial neurons connected by programmable synapses. Each neuron operates asynchronously and only consumes energy when active, mirroring the sparse, event-driven computation of the brain. This allows neuromorphic systems to perform sensory processing tasks—such as visual or auditory recognition—at a fraction of the power consumed by traditional processors.

The potential of neuromorphic computing extends beyond efficiency. Because these systems operate in ways analogous to biological brains, they can support forms of learning that are more flexible and adaptive. They can potentially enable machines to learn from continuous streams of sensory data in real time, an ability that current deep learning systems struggle with. Although still in experimental stages, neuromorphic chips represent a vision of computing where AI systems are not just faster but fundamentally more intelligent in how they process information.

AI Training vs. Inference in Hardware

AI computation can be broadly divided into two phases: training and inference. Training involves adjusting the parameters of a neural network using large datasets, a process that is computationally intensive and requires high-precision arithmetic. Inference, on the other hand, is the application of a trained model to new data, such as recognizing objects in a photo or translating speech.

Neural chips are increasingly specialized for these two functions. High-performance chips like NVIDIA’s A100 or Google’s Cloud TPU are optimized for training large-scale models in data centers, offering massive parallelism and high memory bandwidth. In contrast, edge-oriented chips prioritize inference, focusing on low power consumption and reduced precision arithmetic to fit within the energy constraints of mobile or embedded devices.

The trend toward hardware specialization is creating a diverse ecosystem where different chips handle different stages of the AI pipeline. This division allows for more efficient resource allocation—complex model training occurs in the cloud, while inference and adaptation happen on local devices using compact neural accelerators.

The Role of Quantization and Pruning

To make neural networks run efficiently on hardware, engineers employ optimization techniques such as quantization and pruning. Quantization reduces the precision of numerical calculations, converting 32-bit floating-point numbers to 8-bit integers or even lower. This significantly reduces memory usage and computation time, with minimal impact on accuracy for most applications. Neural chips are designed to exploit such low-precision arithmetic, allowing them to achieve high performance while consuming less energy.

Pruning, on the other hand, removes redundant or insignificant connections within a neural network, effectively simplifying the model. By eliminating weights that contribute little to the output, pruning reduces the number of computations required. Neural chips can take advantage of these sparse networks by activating only relevant processing elements, further improving speed and energy efficiency.

Together, quantization and pruning make deep learning models more suitable for deployment on compact, power-constrained hardware. These techniques ensure that even advanced models like transformers and convolutional neural networks can operate in real time on mobile and embedded devices.

The Integration of AI and Hardware Design

The emergence of neural chips has blurred the line between software and hardware engineering. Designing efficient AI systems now requires co-optimization—developing algorithms and hardware in tandem to maximize performance. This integration has given rise to hardware-aware neural network design, where the structure of models is tailored to fit specific chip architectures.

For instance, neural architecture search (NAS) can automatically design networks that match the computational characteristics of a target chip, balancing accuracy and efficiency. Similarly, hardware designers create custom dataflows that minimize memory movement and adapt to the layer structure of neural networks. This symbiosis ensures that AI workloads are executed with maximal throughput and minimal energy waste.

The concept of “AI-native hardware” is gaining traction, where processors are no longer general-purpose but are built from the ground up to support the operations most common in AI, such as matrix multiplications and activation functions. These architectures are now central to innovation in everything from consumer electronics to industrial automation.

The Expansion of AI Accelerators

Beyond neuromorphic and edge processors, AI accelerators are appearing across all levels of the computing hierarchy. In cloud environments, data centers deploy massive arrays of neural chips to handle deep learning workloads for millions of users simultaneously. These accelerators, often integrated into GPUs or standalone ASICs, enable faster training of large language models and multimodal AI systems that power tools like virtual assistants, chatbots, and generative models.

At the edge, AI accelerators embedded in IoT devices, cameras, and sensors allow for real-time decision-making without cloud connectivity. In autonomous vehicles, neural chips process data from cameras, radar, and LiDAR in milliseconds, enabling safe navigation. In healthcare, neural processors inside portable devices analyze biosignals and imaging data instantly, providing early diagnostics and continuous monitoring.

The proliferation of AI accelerators is also reshaping industries such as manufacturing, agriculture, and energy. Smart factories use embedded neural chips to optimize production in real time, drones equipped with onboard AI systems monitor crops, and power grids utilize predictive algorithms to balance energy loads efficiently. As neural chips become more accessible and cost-effective, their integration into everyday devices will continue to expand, democratizing the benefits of AI.

Challenges in Neural Chip Development

Despite their promise, neural chips face significant technical and practical challenges. One major issue is the rapid pace of AI model evolution. Neural network architectures evolve faster than hardware design cycles, which can take years to complete. This mismatch means that chips optimized for one type of model may become less efficient as new algorithms emerge. To address this, designers are exploring reconfigurable architectures that can adapt to changing AI workloads.

Another challenge lies in balancing precision and performance. While low-precision arithmetic saves energy, it can introduce errors in sensitive applications like medical diagnostics or financial forecasting. Ensuring reliability and accuracy across a wide range of AI tasks requires careful calibration of hardware and software parameters.

Thermal management and scalability also present hurdles. As neural chips pack more transistors and processing units into small areas, heat dissipation becomes a major concern. Efficient cooling and packaging solutions are necessary to maintain stability without compromising energy efficiency. Furthermore, manufacturing advanced neural chips demands cutting-edge semiconductor technology, which is expensive and limited to a few foundries worldwide.

Ethical and Environmental Considerations

The widespread adoption of neural chips also raises ethical and environmental questions. On one hand, moving AI processing to edge devices can improve privacy by keeping data local. On the other, it enables pervasive surveillance if misused in cameras and monitoring systems. Balancing innovation with privacy protection requires transparent design and regulatory oversight.

From an environmental perspective, neural chips offer both opportunities and challenges. They are far more energy-efficient than traditional processors, reducing the overall carbon footprint of AI computation. However, the production and disposal of semiconductor devices contribute to electronic waste and resource consumption. Sustainable chip design, recycling, and energy-efficient manufacturing processes will be essential to mitigate these effects.

The Future of Neural Chips and AI Hardware

The future of neural chips lies in continued miniaturization, integration, and innovation. As fabrication technologies advance toward smaller transistor nodes, neural chips will become more powerful and energy-efficient. We can expect to see heterogeneous computing platforms where CPUs, GPUs, and neural accelerators coexist seamlessly, each handling the tasks best suited to their architecture.

Research is also moving toward three-dimensional chip stacking, where multiple layers of computation and memory are integrated vertically, reducing data movement and increasing bandwidth. This could lead to orders-of-magnitude improvements in performance for AI workloads. At the same time, advances in materials science—such as memristors and phase-change materials—are enabling new forms of analog computing that further blur the distinction between memory and logic.

In the longer term, the convergence of neuromorphic computing, quantum computing, and traditional AI accelerators may usher in a new era of hybrid intelligence. Systems could combine the adaptability of biological brains, the precision of digital logic, and the power of quantum parallelism to tackle problems that are currently beyond reach. Neural chips will play a central role in this evolution, serving as the bridge between artificial and biological intelligence.

Conclusion

Neural chips represent one of the most transformative developments in the history of computing. By embedding intelligence directly into hardware, they are enabling machines to think, perceive, and act with unprecedented speed and efficiency. From smartphones and autonomous vehicles to healthcare devices and industrial systems, these specialized processors are reshaping the landscape of technology and bringing AI from the cloud to the physical world.

Their impact extends beyond performance gains. Neural chips are redefining how we design algorithms, architectures, and even entire computing systems. They embody the convergence of hardware and intelligence, signaling a future where computation is no longer a distant process but an intrinsic part of the environment around us. As neural chip technology continues to evolve, it promises not only to enhance artificial intelligence but also to transform the very nature of how humans interact with machines.

In this emerging era of intelligent hardware, the boundaries between data, computation, and decision-making are dissolving. The world is moving toward devices that not only sense and respond but also learn and reason—ushering in a new age of embedded intelligence where AI is no longer confined to digital abstraction but becomes a tangible, integrated element of reality itself.