For over a century, photography has been both an art and a science—light captured on film, moments frozen in time. From the daguerreotype to the DSLR, cameras have evolved dramatically, but the act of taking a photo remained fundamentally human. A person framed the shot, adjusted the settings, clicked the shutter. Even as digital cameras and smartphones emerged, the photographer remained at the center of the creative process.

Then came artificial intelligence.

In the space of just a few years, AI has done more than upgrade photography—it has redefined it. Photos are no longer just captured; they are interpreted, enhanced, even imagined by machines. What we now call a “photograph” might be part memory, part reality, and part machine-made vision. AI doesn’t just help us take better pictures—it challenges us to rethink what a picture even is.

We’re witnessing the birth of a new photographic era, one shaped not just by lenses and light sensors, but by algorithms, neural networks, and billions of data points.

Seeing Through Silicon Eyes

At the heart of this transformation is a simple but profound truth: cameras today no longer “see” the way they used to.

Traditional cameras are passive observers. They take in light, record color, and store a static image. But AI-driven cameras are active interpreters. They understand scenes. They recognize faces. They can isolate objects, track motion, and even anticipate what you might want to photograph next. When you lift a smartphone to take a photo, AI is already at work—adjusting exposure in real time, identifying people, removing noise, enhancing detail, even correcting lens distortions on the fly.

What was once the domain of professional photographers and manual post-processing is now built into the camera itself, operating silently in the background. Computational photography, powered by AI, fuses multiple images taken at different exposures, focuses, and even times to create a single, “perfect” shot—one that no single exposure could capture.

Google’s Pixel phones popularized this concept with features like Night Sight, which uses AI to pull light out of darkness without a flash. Apple’s iPhones analyze each scene with their A-series chips to decide which frames to merge and which details to enhance. Samsung’s Galaxy line can now remove reflections from glass or erase photobombers with a tap.

This is photography not as documentation, but as intelligent synthesis.

The Camera That Understands You

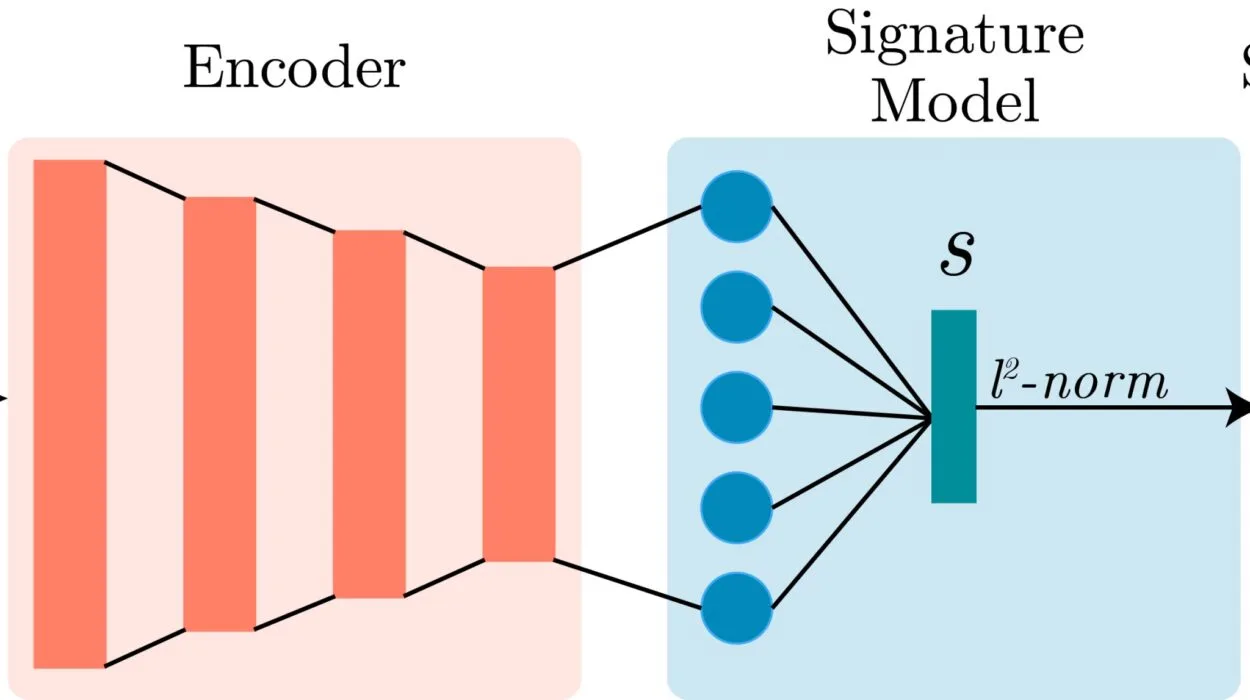

One of AI’s most profound impacts on photography is personalization. Modern AI-enhanced cameras don’t just capture better images—they learn from you.

Your habits, your preferences, your style—all can be modeled by algorithms. Do you prefer portraits with shallow depth of field? Landscapes with high saturation? Black-and-white street shots with heavy contrast? The system adapts. AI can apply those preferences in real time or learn over weeks to adjust post-processing to match your taste.

This level of personalization doesn’t just live in smartphones. Professional editing platforms like Adobe Lightroom and Photoshop now include AI-driven tools like “Sensei,” which can analyze your photo library, recognize patterns in your editing style, and suggest adjustments accordingly.

Even camera manufacturers are adapting. Sony’s latest mirrorless cameras include AI-based subject tracking that can lock onto a bird’s eye or a dancer’s hand with uncanny precision. Nikon and Canon have developed deep-learning autofocus systems trained on vast datasets of movement and anatomy.

Photography, once an external expression of human vision, is becoming an internal feedback loop—cameras learning how we see, and then helping us see more clearly.

A New Language of Light

AI doesn’t just enhance what’s visible—it reveals what we can’t see.

In scientific and medical fields, AI-powered imaging has pushed the boundaries of what’s observable. Super-resolution microscopy, aided by neural networks, can now visualize structures within living cells that are smaller than the wavelength of light. In astronomy, AI helps process petabytes of raw data from telescopes to build composite images of distant galaxies and nebulae—photographs not of what the human eye could see, but of what exists beyond our natural senses.

In environmental science, AI-enhanced drones use multispectral imaging to detect deforestation, soil erosion, and illegal mining—producing “photographs” that reveal data invisible to the naked eye. In wildlife conservation, trail cameras equipped with AI can differentiate between species, count populations, and even identify individual animals by their markings.

These are not just pictures. They are visual data narratives.

Even in art, AI is changing the visual vocabulary. Applications like Runway ML, Artbreeder, and DALL·E use deep generative models to create “photos” of people who don’t exist, places that never were, and moments that were never captured. These aren’t composites or Photoshop tricks. They’re entirely machine-generated, pixel by pixel, from textual prompts or sketches. The lines between photograph, painting, and synthetic image have all but vanished.

The camera is no longer a tool of representation—it has become a tool of imagination.

Editing Without Touching

Perhaps the most radical shift AI brings to photography is the ease and speed of post-processing.

What once took hours in the darkroom—or in Lightroom—can now happen in milliseconds. Remove shadows? Done. Replace skies? One click. Change the time of day? AI can do it. Swap expressions in a group photo so everyone is smiling? Easy. Upscale a low-resolution image without losing detail? Not only possible, but often indistinguishable from a high-res original.

Companies like Skylum, with their AI-powered Luminar software, offer features like “Accent AI” that enhance photos automatically by understanding the content of the image. Adobe’s Generative Fill uses AI to expand photos beyond their borders or remove large objects while filling in the background seamlessly.

Forensic photography benefits, too. AI tools can clarify blurry surveillance images, enhance facial details, and even reconstruct partially damaged photographs from historical archives.

But such power also raises ethical questions. At what point does enhancement become manipulation? When does convenience cross into deception?

The Ethics of the Artificial Image

The very nature of truth in photography is being reshaped. Historically, photographs were trusted—”proof” of a moment’s existence. But in the AI age, the line between real and fake grows faint.

Deepfakes—AI-generated videos and images of real people saying or doing things they never did—have already begun to erode public trust. Entire faces can now be replaced in real-time video. Backgrounds can be altered, lighting changed, expressions modified—all automatically and undetectably.

This isn’t just a concern for politics and media. In personal photography, apps offer “beautification” features that smooth skin, alter body shapes, and brighten eyes by default. What we present to the world, even in casual selfies, is increasingly curated by algorithms.

The result is a tension between authenticity and aesthetics. Are we documenting reality, or creating a pleasing illusion of it?

Photographers now grapple with questions that once belonged to philosophers. Is a machine-enhanced image less true? Does the intent of the photographer matter if the result is AI-generated? Can synthetic images carry the same emotional weight as real ones?

There are no simple answers—only a growing need for transparency and visual literacy in a world where seeing is no longer believing.

Photography as Collaboration

Despite the philosophical unease, many artists and photographers are embracing AI not as a threat, but as a collaborator.

Photographer Trevor Paglen uses AI to explore how machines “see” the world, creating haunting images based on facial recognition algorithms. Artist Refik Anadol turns neural network data into swirling visual tapestries that blend photography, memory, and machine hallucination.

Photographers today can train their own models, feed them datasets of images, and let the AI generate new compositions. The process is less about control and more about dialogue. The artist proposes a seed; the machine grows it into something unexpected.

This collaborative creativity opens new doors. AI can imagine alternate realities, explore new aesthetics, and challenge human assumptions about composition, lighting, and form. It becomes not just a tool, but a muse.

As with any technology, what matters is the hand—and the mind—behind it.

Memory in the Age of Algorithms

Photography has always been about memory. From faded Polaroids in dusty albums to digital galleries on our phones, we use images to hold onto what we’ve lived. But AI is altering even that.

Google Photos now uses AI to recognize faces across decades, group events by theme, and suggest “memories” to revisit. Meta’s AI generates highlight reels of your life from thousands of scattered images. Your phone might remind you: “This day, 5 years ago…” complete with AI-curated music and edits.

We are no longer the sole curators of our past. Algorithms decide which moments matter most, which images deserve resurrection. They can stitch together slideshows of people who are gone. They can animate still images of loved ones. They can make memories speak.

This automation brings both comfort and discomfort. AI can help preserve and recall forgotten joys—but it can also reframe, edit, or omit parts of our history without us even noticing.

What happens when we outsource our memory to machines?

A Glimpse into the Future Frame

Where is AI-driven photography headed?

Emerging technologies suggest a future that borders on the surreal. Smart glasses, like those from Meta and Ray-Ban, are beginning to incorporate AI assistants that can identify objects, translate text, and take photos with voice commands. In the near future, these devices may record not just images, but context—sound, emotion, and even biometric data—layering photos with dimensions we’ve never had before.

Augmented reality (AR) will blur the line between photograph and experience. You might look at an image and hear the sounds it captured, feel the temperature of that day, see other angles rendered by generative AI. Photos could become immersive time capsules—360-degree memory palaces built from neural reconstructions.

AI may also enable “predictive photography.” Cameras that know what you’re likely to shoot next and prepare settings accordingly. Or cameras that capture moments retroactively, constantly recording and saving only the best, most meaningful seconds.

In such a world, the shutter button may disappear entirely. Photography becomes not something you do, but something that happens around you—an ambient act of memory-making, orchestrated by machines.

Conclusion: When Machines Dream in Light

The story of photography has always been one of evolution. But with AI, the shift feels less like a step and more like a metamorphosis.

We are moving from cameras that see the world to cameras that understand it. From editing pixels to generating realities. From capturing moments to anticipating them.

Yet amid this transformation, the essence of photography endures. It is still, at its core, a way of seeing—a way of feeling. AI may change the tools, but the desire to preserve, to express, to explore, remains profoundly human.

Perhaps the future of photography is not about choosing between man and machine, but learning how they can dream together. Because in the hands of both, light becomes language. And in that language, new stories are waiting to be told.