In the earliest flickers of civilization, humans built tools from stone and fire to shape their world. Today, we’re building something far more elusive—an intelligence that is not our own. Artificial Intelligence, or AI, has moved from the pages of science fiction into the fabric of everyday life. From virtual assistants whispering directions to cars that drive themselves, AI is no longer a curiosity. It is a revolution.

But what exactly is AI? Is it a machine that thinks like a human? A piece of code that mimics decision-making? A sentient robot with dreams of world domination? The answer is more nuanced, and far more interesting.

To understand AI, we must first strip away the mystique. AI is not magic, nor is it consciousness in a box. At its core, AI is mathematics, data, and algorithms. It is a system that learns patterns from information and uses those patterns to make predictions or decisions. But from this foundation emerges something profound—a machine that, in specific ways, can outperform even the human brain.

The Birth of Machine Intelligence

The idea of artificial intelligence isn’t new. Philosophers and inventors have speculated for centuries about mechanical minds. In the 1950s, that dream began to solidify into science. Mathematician Alan Turing proposed a question that still haunts the field: Can machines think?

Turing’s “Imitation Game,” now known as the Turing Test, became the first serious exploration of whether a machine could convincingly mimic human responses. Around the same time, the term “Artificial Intelligence” was coined by John McCarthy for the 1956 Dartmouth Conference—the event that is widely considered the birth of the field.

In those early days, optimism was rampant. Researchers believed human-level AI was just around the corner. But intelligence proved harder to engineer than anticipated. Progress stalled, funding dried up, and the field entered what became known as the “AI Winter.”

That winter lasted decades, punctuated by brief thaws whenever a new breakthrough sparked hope. Chess-playing programs, expert systems for medical diagnosis, and basic natural language tools all promised to fulfill the AI dream—but each eventually revealed their limits.

Then came a renaissance.

The Learning Machine

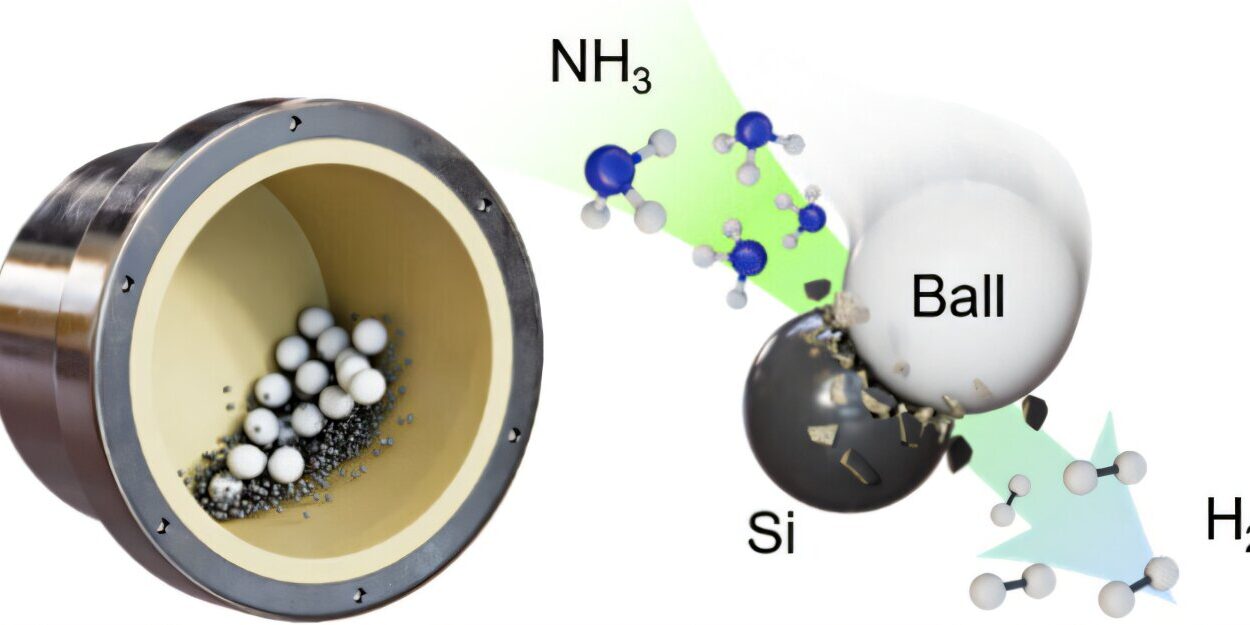

The key that unlocked AI’s modern power was not better rules—it was learning. Instead of telling machines exactly what to do in every situation, researchers began teaching them how to learn from data. This shift gave rise to machine learning: a subset of AI where algorithms improve automatically through experience.

Rather than hard-coding knowledge, developers fed systems vast datasets. A machine learning algorithm sifts through this data, finds patterns, and builds models that generalize to new situations. Show it thousands of cat photos, and it learns to recognize cats—even ones it has never seen before.

This approach exploded in effectiveness with the arrival of faster processors and, more crucially, the internet. Suddenly, machines could be trained on massive amounts of data—millions of images, voices, emails, and more. The age of deep learning had arrived.

Deep learning uses artificial neural networks—layered systems inspired loosely by the brain’s structure. These networks learn hierarchical patterns, recognizing edges in images, then shapes, then objects. They can translate languages, drive cars, even generate music. The more layers and data they have, the more powerful they become.

By the 2010s, deep learning had transformed AI from a promising concept into a juggernaut of industry. Virtual assistants like Siri and Alexa became household names. Google Translate leapt in fluency. Image recognition hit superhuman accuracy. AI was no longer a dream. It was in your pocket.

What AI Can (and Can’t) Do Today

Despite its impressive feats, today’s AI remains narrow. It can perform specific tasks with incredible accuracy—like diagnosing cancer from medical scans or recommending movies you’re likely to enjoy—but it doesn’t understand these tasks the way a human does.

This kind of AI is known as narrow AI or weak AI. It’s called “weak” not because it’s ineffective, but because it lacks general understanding. A deep learning model trained to identify skin cancer can outperform doctors in some cases, but ask it to explain its reasoning or understand the concept of illness, and it draws a blank.

Still, narrow AI is transforming industries.

In healthcare, AI reads radiology images, sifts through genomic data, and flags early signs of disease. In finance, it detects fraud, automates trades, and analyzes risk. In agriculture, it predicts crop yields, optimizes irrigation, and monitors livestock.

AI also fuels entertainment—from TikTok’s addictive algorithm to Netflix’s eerily accurate recommendations. In manufacturing, robots equipped with AI inspect quality, predict failures, and assemble products with precision. In logistics, AI finds optimal routes, forecasts demand, and keeps global supply chains humming.

But the limits are real. AI systems often fail in unexpected situations, require enormous datasets, and can be shockingly brittle. A self-driving car might perform flawlessly for months—until a plastic bag is mistaken for a rock, or a snowy road throws off its sensors.

Even more troubling are the black box nature of many AI systems—especially deep neural networks. We don’t always know why an AI made a decision. In high-stakes areas like criminal justice or medicine, this lack of transparency can be dangerous.

Bias, Ethics, and the Human Factor

AI is not immune to the biases of the world it learns from. If a training dataset reflects societal inequality, the AI will too. This has already led to serious consequences—facial recognition systems misidentifying people of color, hiring algorithms penalizing women, and predictive policing tools disproportionately targeting minority communities.

These failures aren’t just technical glitches—they are ethical landmines.

AI reflects our values, our priorities, and our blind spots. That means building ethical AI requires more than engineers. It demands input from sociologists, ethicists, legal experts, and affected communities.

Transparency, fairness, and accountability are not optional. They must be baked into the very structure of AI systems. This is a new kind of responsibility, one that grows more urgent as AI weaves itself deeper into the machinery of society.

The question isn’t just “What can AI do?” but “What should AI do?” And who decides?

The Dream of General Intelligence

Beneath all this lies the grand question: Can we build a machine that thinks like a human—not just in one domain, but across all of them?

Artificial General Intelligence (AGI) is the idea of an AI that can reason, learn, and adapt across a broad range of tasks, just like you and me. AGI could solve problems it hasn’t seen before, transfer knowledge from one domain to another, and even pursue its own goals.

We are not there yet.

Despite huge leaps in capability, today’s most advanced systems—including large language models like ChatGPT—still lack true understanding. They don’t have beliefs, desires, or common sense in the way humans do. They process symbols and patterns, not meaning.

But the line is blurring. Some argue that models with billions of parameters and exposure to enormous datasets are developing an emergent kind of intelligence—not quite human, but not entirely alien either.

Others caution that we’re still far from true AGI, and that scaling up current methods will hit diminishing returns. Real intelligence, they argue, requires grounding in the physical world, emotions, embodiment, and a richer internal life than any model has today.

Whether AGI arrives in a decade or a century—or never at all—remains one of the most profound open questions of our time.

The Future: From Partner to Creator

Even without AGI, the future of AI is staggering. In the coming decades, AI is expected to become more deeply embedded in every corner of life.

In science, AI will help discover new materials, accelerate drug development, and even assist in designing spacecraft or decoding the brain. In education, personalized tutors powered by AI could transform how we learn. In the workplace, AI could automate routine tasks, freeing humans for more creative and strategic work—while also raising fears of job displacement and economic inequality.

In art and creativity, AI has already begun composing symphonies, painting portraits, writing stories, and designing buildings. The boundary between human and machine creativity is shifting. Are these tools? Collaborators? Competitors?

One thing is clear: AI is becoming a co-pilot in the human story. Whether that story becomes a dystopia of surveillance and control or a renaissance of possibility depends not on the machines, but on us.

The Human in the Loop

As AI grows in power, so must our wisdom in guiding it. We must design systems that keep humans in the loop—able to intervene, understand, and shape outcomes.

This includes not just technical safeguards but cultural ones. We need education systems that teach not just coding, but ethics. We need policy frameworks that balance innovation with oversight. We need public engagement, not just private profit.

The future of AI is not inevitable. It is a design challenge, a societal experiment, a collective moral question. It is shaped by the decisions we make today.

A Mirror and a Flame

Artificial Intelligence holds a mirror to humanity. It reflects our intellect, our values, our flaws. But it also holds a flame—a spark of something new. A machine that learns, adapts, and evolves opens a door to a kind of mind the universe has never seen before.

Will it be wise? Will it be compassionate? Will it serve us, surpass us, or ignore us?

The answers are not written in code. They will be written in choices—in law, in design, in culture, and in courage.

The age of AI is not a chapter in a textbook. It is a chapter in our evolution.

And we are still writing it.