At first glance, the world seems to be a place of order. We wake up, dress ourselves, brew coffee, and step into cities that function—more or less—according to plan. Nature, too, appears to operate with elegance: birds migrate in formation, seasons cycle with precision, and the stars maintain their orbits in the night sky. Yet beneath this apparent harmony lies a deeper truth whispered by one of the most profound laws in all of physics. This truth is called entropy, and it tells a sobering, startling story: that everything, from the steam of your morning coffee to the galaxies themselves, is sliding inevitably toward chaos.

Entropy is not just an abstract principle tucked away in physics textbooks. It is a concept that permeates every facet of reality, shaping the flow of time, the behavior of systems, and even the structure of information and life itself. To understand entropy is to glimpse the arrow of time, to perceive why decay is more likely than perfection, and to come to terms with the inexorable tendency of the universe to fall apart.

A Brief History of a Difficult Idea

Entropy made its debut in the 19th century, during the birth of thermodynamics—the science of heat, energy, and motion. Engineers and scientists were trying to understand how steam engines worked. They knew that heat could be converted into mechanical energy, but there seemed to be unavoidable inefficiencies. Even the best engines lost some energy as wasted heat.

Among these early pioneers was Rudolf Clausius, a German physicist who, in 1865, coined the term “entropy” from the Greek word “tropein,” meaning “to transform.” He observed that in any energy exchange, the total amount of energy remained constant (the first law of thermodynamics), but the energy available to do work always decreased. This unavailable energy, this “waste,” seemed to grow inevitably. Clausius defined entropy to quantify this effect, and in doing so, captured one of the most counterintuitive realities of nature.

Entropy, Clausius wrote, is the measure of energy dispersal in a system. Or, more broadly understood today: entropy is a measure of disorder. It quantifies how much chaos or randomness exists in a physical system. And most crucially, the second law of thermodynamics tells us that in any closed system, entropy never decreases. It always stays the same or increases.

This was revolutionary. While Newton’s laws suggested a clockwork universe that could run backward or forward in time without contradiction, entropy introduced a preferred direction. Time, it said, flows in only one direction: from order to disorder, from usable energy to waste, from structure to chaos.

Ice Cubes, Coffee, and the Direction of Time

Let’s bring entropy down to Earth. Imagine you drop an ice cube into a hot cup of coffee. What happens? The ice melts, the coffee cools, and the whole system reaches a uniform temperature. Heat flows from the hotter coffee to the colder ice, never the reverse. The process is irreversible. You never see the ice cube spontaneously re-forming or the coffee heating itself back up.

Why not? Because such a reversal would involve a decrease in entropy. It would mean going from a more disordered state—lukewarm coffee—to a more ordered one—hot coffee and frozen ice. The second law of thermodynamics forbids this. Entropy must increase or, at best, stay constant in an idealized, reversible process.

This mundane example reveals something cosmic. The fact that we remember the past but not the future, that we age and not grow younger, that we can destroy a sandcastle with a kick but can’t build one with a puff of wind—all of these stem from entropy. Entropy is the arrow of time.

Microstates, Macrostates, and the Game of Probabilities

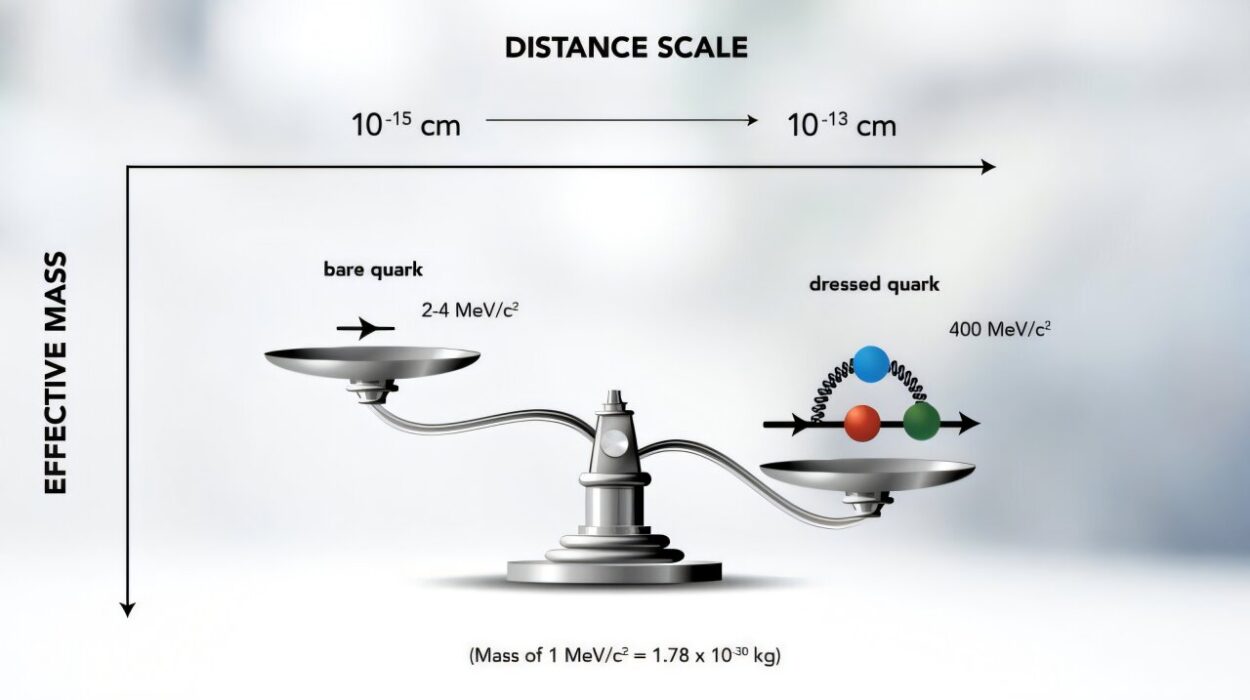

To understand why entropy increases, we need to dive into the world of statistical mechanics, pioneered by Ludwig Boltzmann. Boltzmann asked: what is entropy, really, at a microscopic level? His answer changed physics forever.

Imagine a box filled with gas molecules. You can describe the box in two ways. First, the macrostate: things like temperature, pressure, and volume—what we can observe. Second, the microstate: the exact position and velocity of every single molecule in the box—information we cannot realistically obtain.

Boltzmann realized that a macrostate (say, the gas uniformly spread throughout the box) corresponds to a vast number of possible microstates. Another macrostate (say, all the gas molecules huddled in one corner) corresponds to far fewer microstates. So statistically speaking, the uniform distribution is vastly more probable. It has higher entropy.

From this insight, Boltzmann derived a simple yet profound equation: S = k * log W, where S is entropy, k is Boltzmann’s constant, and W is the number of microstates corresponding to a given macrostate. The greater the number of microstates, the greater the entropy. Disorder is simply more probable than order.

This explains why entropy increases. Systems tend to move from less probable states (low entropy) to more probable ones (high entropy). It’s not just a physical necessity; it’s a statistical certainty. The universe is rolling dice, and the odds are always in favor of chaos.

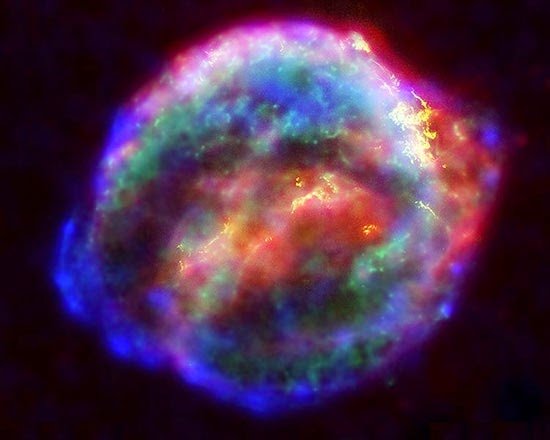

Entropy and the Heat Death of the Universe

If entropy always increases, where does it end? This brings us to one of the most haunting ideas in cosmology: the heat death of the universe.

In the distant future, assuming the laws of physics as we know them remain unchanged, all stars will burn out, galaxies will dissipate, and black holes will evaporate via Hawking radiation. The universe will become a cold, dark, featureless expanse of uniform temperature and maximum entropy. There will be no usable energy left to perform work, no gradients, no life. Just the silent stillness of thermodynamic equilibrium.

This ultimate fate, predicted by the second law, is called the heat death. It is not an explosion or a collapse but a fading away—a descent into perfect disorder, where everything is homogenized and nothing ever changes again. Time itself, in a sense, will cease to have meaning, for all processes that mark the passage of time will have stopped.

Entropy, then, is not merely a law of physics. It is the curtain call of existence.

Life vs. Entropy: The Great Rebellion

And yet, here we are—living beings who create order, build civilizations, write symphonies, and ponder the stars. Doesn’t life defy entropy?

At first glance, it might seem so. Life appears to generate order: a single fertilized egg becomes a structured organism; seeds grow into trees; brains organize thoughts. But this order doesn’t violate the second law because life is not a closed system. Organisms take in energy (from food, the sun, etc.) and expel waste. In doing so, they decrease entropy locally—within themselves—while increasing it globally—around themselves.

Think of it like cleaning your room. You can organize it, reducing entropy locally, but you must expend energy to do so, perhaps burning calories or consuming electricity. That energy generation elsewhere—through chemical reactions in your body or in a power plant—increases entropy overall. The second law remains unbroken.

Life thrives in this delicate balance: riding the flow of entropy, capturing energy before it degrades, and using it to build momentary islands of order. Evolution, metabolism, and thought itself are thermodynamic processes—magnificent, ephemeral eddies in the river of increasing disorder.

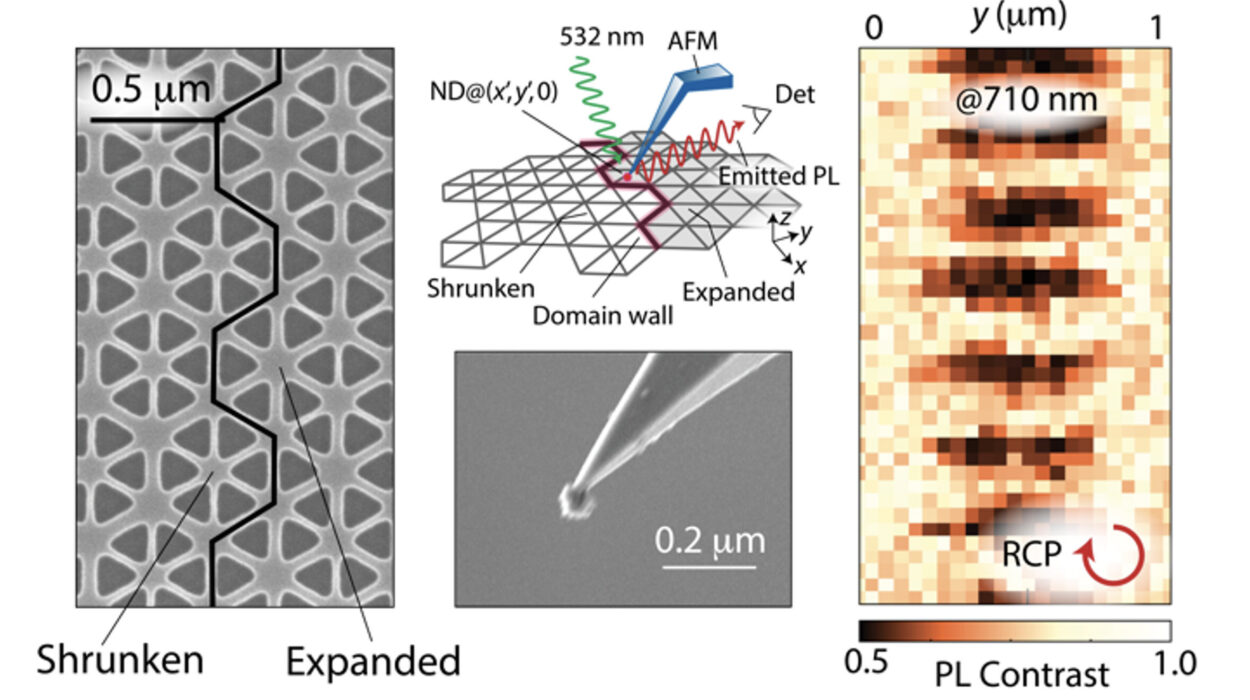

Information, Entropy, and the Digital World

Entropy is not just a physical concept; it also intersects with information theory. In the mid-20th century, Claude Shannon developed a mathematical framework for quantifying information, drawing surprising parallels to thermodynamic entropy.

In Shannon’s formulation, the entropy of a message reflects its unpredictability or uncertainty. A completely random string of characters has high entropy—it’s hard to compress or predict. A repetitive, structured message has low entropy.

This concept reveals an intimate connection between entropy and information. To store or transmit information, we must reduce uncertainty—decrease entropy—in our signals. But doing so often requires energy, which in turn increases entropy somewhere else. Even the act of erasing a bit from memory—clearing a 1 or a 0—has an entropic cost, as shown by Rolf Landauer in the 1960s.

Thus, in our digital age, entropy is everywhere—in our computers, our smartphones, our data networks. Every click, every upload, every calculation dances to the tune of thermodynamic constraints. Information is not abstract. It is physical. And it is costly.

Entropy in Economics, Art, and Philosophy

The idea of entropy has seeped into fields far beyond physics. In economics, entropy has been used to model the flow of goods, energy, and wealth. Some ecological economists argue that unlimited growth is impossible because it conflicts with the second law; the more we produce, the more we degrade the environment.

In art and literature, entropy often symbolizes decay, fragmentation, or the collapse of meaning. The disintegration of narrative, the abstraction of form, and the embrace of randomness in modernist and postmodernist works echo the entropic dissolution of structure.

Philosophically, entropy raises profound questions. If the universe is moving toward maximum disorder, what is the point of struggle, of creation, of life? Some see this as nihilistic. Others see it as ennobling: to create beauty in the face of inevitable chaos is a heroic act.

Entropy is not a villain, but a mirror. It reflects the nature of reality—not as a static picture, but as a dynamic story of transformation, complexity, and impermanence.

The Mystery of Low Entropy Beginnings

One of the deepest puzzles in physics today is why the universe began in such a low-entropy state. If high entropy is the natural, likely condition, why did the Big Bang produce a cosmos of extraordinary order?

This mystery touches on the origins of time itself. Some physicists, like Roger Penrose, argue that the low entropy of the early universe is what gives rise to the arrow of time. Others speculate that our universe might be just one bubble in a multiverse, with different regions having different entropy histories.

Still others, including cosmologist Sean Carroll, propose radical models where time emerges from entangled quantum states, and entropy is a byproduct of how observers perceive those states. These are deep, speculative waters. But they underscore one thing: entropy is not merely a thermodynamic quantity. It is a key to understanding why time flows, why events unfold, and why we remember the past but not the future.

Chaos Is Not the End

Despite entropy’s association with disorder, it is not synonymous with ruin. Chaos, in the scientific sense, is not randomness but sensitive dependence on initial conditions. Complexity can arise from entropy, not in spite of it.

The cosmos, in its relentless drive toward equilibrium, generates astonishing patterns: stars, galaxies, weather systems, ecosystems, minds. These are not violations of the second law. They are its consequences—complex, structured intermediates in the unfolding entropic drama.

We are part of this story. Born of stars, forged in chaos, we reflect the universe’s own striving for momentary order. And in that striving—in art, in science, in love—we defy not the laws of thermodynamics, but their emptiness. We give meaning to the drift.

Final Reflections: Embracing the Inevitable

Entropy teaches us many things. It tells us why ice melts, why memories fade, why buildings crumble, why life is fragile. But it also reveals the sublime unity of existence: from atoms to galaxies, from heat to thought, everything flows along a thermodynamic tide.

This is not a cause for despair. It is a call to cherish what we build, to honor the fleeting order we create, to marvel at the improbable miracle of consciousness.

Entropy will win in the end. But in the meantime, there is light, motion, music, and thought. And perhaps that is the most meaningful rebellion of all.